| Revision as of 16:50, 9 January 2003 editHeron (talk | contribs)Administrators29,249 edits velocity -> speed← Previous edit | Revision as of 23:57, 16 January 2003 edit undoAp (talk | contribs)2,137 editsmNo edit summaryNext edit → | ||

| Line 9: | Line 9: | ||

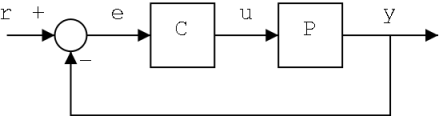

| To avoid the problems of the open-loop controller, control theory introduces ]. The output of the system <math>y</math> is fed back to the reference value <math>r</math>. The controller <math>C</math> then takes the difference between the reference and the output, the error <math>e</math>, to change the inputs <math>u</math> to the system under control <math>P</math>. This is shown in the figure. This kind of controller is a ] or ]. | To avoid the problems of the open-loop controller, control theory introduces ]. The output of the system <math>y</math> is fed back to the reference value <math>r</math>. The controller <math>C</math> then takes the difference between the reference and the output, the error <math>e</math>, to change the inputs <math>u</math> to the system under control <math>P</math>. This is shown in the figure. This kind of controller is a ] or ]. | ||

| ] | <center>]<br> | ||

| ''A simple feedback control loop''</center> | |||

| If we assume the controller <math>C</math> and the plant <math>P</math> are linear, time-invariant and all ], we can analyse the system above by using the ] on the variables. This gives us the following relations: | If we assume the controller <math>C</math> and the plant <math>P</math> are linear, time-invariant and all ], we can analyse the system above by using the ] on the variables. This gives us the following relations: | ||

Revision as of 23:57, 16 January 2003

In engineering, control theory deals with the behaviour of dynamical systems over time. The desired output of a system is called the reference variable. When one or more output variables of a system need to show a certain behaviour over time, a controller tries to manipulate the inputs of the system to realize this behaviour at the output of the system.

Take for example cruise control. In this case, the system is a car. The goal of the cruise control is to keep it at a constant speed. So, the output variable of the system is the speed of the car. The primary means to control the speed of the car is the gas pedal.

A simple way to implement cruise control is to lock the position of the gas pedal the moment the driver engages cruise control. This is fine if the car is driving on perfectly flat terrain. On hilly terrain, the car will accelerate when going downhill; something its driver may find highly undesirable.

This type of controller is called an open-loop controller because there is no direct connection between the output of the system and its input. One of the main disadvantages of this type of controller is the sensitivity to the dynamics of the system under control.

To avoid the problems of the open-loop controller, control theory introduces feedback. The output of the system is fed back to the reference value . The controller then takes the difference between the reference and the output, the error , to change the inputs to the system under control . This is shown in the figure. This kind of controller is a closed-loop controller or feedback controller.

A simple feedback control loop

If we assume the controller and the plant are linear, time-invariant and all single input, single output, we can analyse the system above by using the Laplace transform on the variables. This gives us the following relations:

Solving for in function of , we obtain:

So, if we can ensure , then .

Controllability is a measure for the ability to use a certain input to control an output of a system. In the cruise control example, an additional input to control the speed of the car is the clutch pedal. By varying the amount of power transferred from the engine to the wheels, you could control the speed of the car. However, most drivers will use this method only for very low speeds. The controllability of the car at high speeds is better with the gas pedal.

Observability is a measure for how well internal states can be observed on the external outputs of a system. The observability and controllability of a system are mathematical equivalent.

is fed back to the reference value

is fed back to the reference value  . The controller

. The controller  then takes the difference between the reference and the output, the error

then takes the difference between the reference and the output, the error  , to change the inputs

, to change the inputs  to the system under control

to the system under control  . This is shown in the figure. This kind of controller is a

. This is shown in the figure. This kind of controller is a

in function of

in function of  , we obtain:

, we obtain:

, then

, then  .

.