This is an old revision of this page, as edited by Augmented Seventh (talk | contribs) at 06:09, 19 December 2024 (Reverted 1 edit by 103.25.249.202 (talk)). The present address (URL) is a permanent link to this revision, which may differ significantly from the current revision.

Revision as of 06:09, 19 December 2024 by Augmented Seventh (talk | contribs) (Reverted 1 edit by 103.25.249.202 (talk))(diff) ← Previous revision | Latest revision (diff) | Newer revision → (diff) Software that runs automated tasks over the Internet For other uses, see Automated bot. For bot operation on Misplaced Pages, see Misplaced Pages:Bots.An Internet bot, web robot, robot or simply bot, is a software application that runs automated tasks (scripts) on the Internet, usually with the intent to imitate human activity, such as messaging, on a large scale. An Internet bot plays the client role in a client–server model whereas the server role is usually played by web servers. Internet bots are able to perform simple and repetitive tasks much faster than a person could ever do. The most extensive use of bots is for web crawling, in which an automated script fetches, analyzes and files information from web servers. More than half of all web traffic is generated by bots.

Efforts by web servers to restrict bots vary. Some servers have a robots.txt file that contains the rules governing bot behavior on that server. Any bot that does not follow the rules could, in theory, be denied access to or removed from the affected website. If the posted text file has no associated program/software/app, then adhering to the rules is entirely voluntary. There would be no way to enforce the rules or to ensure that a bot's creator or implementer reads or acknowledges the robots.txt file. Some bots are "good", e.g. search engine spiders, while others are used to launch malicious attacks on political campaigns, for example.

IM and IRC

Some bots communicate with users of Internet-based services, via instant messaging (IM), Internet Relay Chat (IRC), or other web interfaces such as Facebook bots and Twitter bots. These chatbots may allow people to ask questions in plain English and then formulate a response. Such bots can often handle reporting weather, zip code information, sports scores, currency or other unit conversions, etc. Others are used for entertainment, such as SmarterChild on AOL Instant Messenger and MSN Messenger.

Additional roles of an IRC bot may be to listen on a conversation channel, and to comment on certain phrases uttered by the participants (based on pattern matching). This is sometimes used as a help service for new users or to censor profanity.

Social bots

Main article: Social botSocial bots are sets of algorithms that take on the duties of repetitive sets of instructions in order to establish a service or connection among social networking users. Among the various designs of networking bots, the most common are chat bots, algorithms designed to converse with a human user, and social bots, algorithms designed to mimic human behaviors to converse with patterns similar to those of a human user. The history of social botting can be traced back to Alan Turing in the 1950s and his vision of designing sets of instructional code approved by the Turing test. In the 1960s Joseph Weizenbaum created ELIZA, a natural language processing computer program considered an early indicator of artificial intelligence algorithms. ELIZA inspired computer programmers to design tasked programs that can match behavior patterns to their sets of instruction. As a result, natural language processing has become an influencing factor to the development of artificial intelligence and social bots. And as information and thought see a progressive mass spreading on social media websites, innovative technological advancements are made following the same pattern.

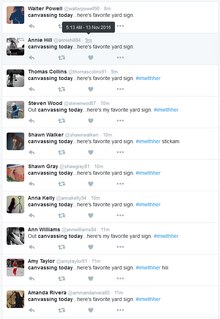

Reports of political interferences in recent elections, including the 2016 US and 2017 UK general elections, have set the notion of bots being more prevalent because of the ethics that is challenged between the bot's design and the bot's designer. Emilio Ferrara, a computer scientist from the University of Southern California reporting on Communications of the ACM, said the lack of resources available to implement fact-checking and information verification results in the large volumes of false reports and claims made about these bots on social media platforms. In the case of Twitter, most of these bots are programmed with search filter capabilities that target keywords and phrases favoring political agendas and then retweet them. While the attention of bots is programmed to spread unverified information throughout the social media platforms, it is a challenge that programmers face in the wake of a hostile political climate. The Bot Effect is what Ferrera reported as the socialization of bots and human users creating a vulnerability to the leaking of personal information and polarizing influences outside the ethics of the bot's code, and was confirmed by Guillory Kramer in his study where he observed the behavior of emotionally volatile users and the impact the bots have on them, altering their perception of reality.

Commercial bots

| This section needs additional citations for verification. Please help improve this article by adding citations to reliable sources in this section. Unsourced material may be challenged and removed. (August 2018) (Learn how and when to remove this message) |

There has been a great deal of controversy about the use of bots in an automated trading function. Auction website eBay took legal action in an attempt to suppress a third-party company from using bots to look for bargains on its site; this approach backfired on eBay and attracted the attention of further bots. The United Kingdom-based bet exchange, Betfair, saw such a large amount of traffic coming from bots that it launched a WebService API aimed at bot programmers, through which it can actively manage bot interactions.

Bot farms are known to be used in online app stores, like the Apple App Store and Google Play, to manipulate positions or increase positive ratings/reviews.

A rapidly growing, benign form of internet bot is the chatbot. From 2016, when Facebook Messenger allowed developers to place chatbots on their platform, there has been an exponential growth of their use on that app alone. 30,000 bots were created for Messenger in the first six months, rising to 100,000 by September 2017. Avi Ben Ezra, CTO of SnatchBot, told Forbes that evidence from the use of their chatbot building platform pointed to a near future saving of millions of hours of human labor as 'live chat' on websites was replaced with bots.

Companies use internet bots to increase online engagement and streamline communication. Companies often use bots to cut down on cost; instead of employing people to communicate with consumers, companies have developed new ways to be efficient. These chatbots are used to answer customers' questions: for example, Domino's developed a chatbot that can take orders via Facebook Messenger. Chatbots allow companies to allocate their employees' time to other tasks.

Malicious bots

One example of the malicious use of bots is the coordination and operation of an automated attack on networked computers, such as a denial-of-service attack by a botnet. Internet bots or web bots can also be used to commit click fraud and more recently have appeared around MMORPG games as computer game bots. Another category is represented by spambots, internet bots that attempt to spam large amounts of content on the Internet, usually adding advertising links. More than 94.2% of websites have experienced a bot attack.

There are malicious bots (and botnets) of the following types:

- Spambots that harvest email addresses from contact or guestbook pages

- Downloaded programs that suck bandwidth by downloading entire websites

- Website scrapers that grab the content of websites and re-use it without permission on automatically generated doorway pages

- Registration bots that sign up a specific email address to numerous services in order to have the confirmation messages flood the email inbox and distract from important messages indicating a security breach.

- Viruses and worms

- DDoS attacks

- Botnets, zombie computers, etc.

- Spambots that try to redirect people onto a malicious website, sometimes found in comment sections or forums of various websites

- Viewbots create fake views

- Bots that buy up higher-demand seats for concerts, particularly by ticket brokers who resell the tickets. These bots run through the purchase process of entertainment event-ticketing sites and obtain better seats by pulling as many seats back as it can.

- Bots that are used in massively multiplayer online role-playing games to farm for resources that would otherwise take significant time or effort to obtain, which can be a concern for online in-game economies.

- Bots that increase traffic counts on analytics reporting to extract money from advertisers. A study by Comscore found that over half of ads shown across thousands of campaigns between May 2012 and February 2013 were not served to human users.

- Bots used on internet forums to automatically post inflammatory or nonsensical posts to disrupt the forum and anger users.

in 2012, journalist Percy von Lipinski reported that he discovered millions of bots or botted or pinged views at CNN iReport. CNN iReport quietly removed millions of views from the account of iReporter Chris Morrow. It is not known if the ad revenue received by CNN from the fake views was ever returned to the advertisers.

The most widely used anti-bot technique is CAPTCHA. Examples of providers include Recaptcha, Minteye, Solve Media and NuCaptcha. However, captchas are not foolproof in preventing bots, as they can often be circumvented by computer character recognition, security holes, and outsourcing captcha solving to cheap laborers.

Protection against bots

In the case of academic surveys, protection against auto test taking bots is essential for maintaining accuracy and consistency in the results of the survey. Without proper precautions against these bots, the results of a survey can become skewed or inaccurate. Researchers indicate that the best way to keep bots out of surveys is to not allow them to enter to begin with. The survey should have participants from a reliable source, such as an existing department or group at work. This way, malicious bots don't have the opportunity to infiltrate the study.

Another form of protection against bots is a CAPTCHA test as mentioned in a previous section, which stands for "Completely Automated Public Turing Test". This test is often used to quickly distinguish a real user from a bot by posing a challenge that a human could easily do but a bot would not. This could be something like recognizing distorted letters or numbers, or picking out specific parts of an image, such as traffic lights on a busy street. CAPTCHAs are a great form of protection due to their ability to be completed quickly, low effort, and easy implementation.

There are also dedicated companies that specialize in protection against bots, including ones like DataDome, Akamai, BrandSSL and Imperva. These companies offer defense systems to their clients to protect them against DDoS attacks, infrastructure attacks, and overall cybersecurity. While the pricing rates of these companies can often be expensive, the services offered can be crucial both for large corporations and small businesses.

Human interaction with social bots

| This section may be unbalanced towards certain viewpoints. Please improve the article or discuss the issue on the talk page. (November 2021) |

There are two main concerns with bots: clarity and face-to-face support. The cultural background of human beings affects the way they communicate with social bots. Others recognize that online bots have the ability to "masquerade" as humans online and have become highly aware of their presence. Due to this, some users are becoming unsure when interacting with a social bot.

Many people believe that bots are vastly less intelligent than humans, so they are not worthy of our respect.

Min-Sun Kim proposed five concerns or issues that may arise when communicating with a social robot, and they are avoiding the damage of peoples' feelings, minimizing impositions, disapproval from others, clarity issues, and how effective their messages may come across.

People who oppose social robots argue that they also take away from the genuine creations of human relationships. Opposition to social bots also note that the use of social bots add a new, unnecessary layer to privacy protection. Many users call for stricter legislation in relation to social bots to ensure private information remains preserved. The discussion of what to do with social bots and how far they should go remains ongoing.

Social bots and political discussions

In recent years, political discussion platforms and politics on social media have become highly unstable and volatile. With the introduction of social bots on the political discussion scene, many users worry about their effect on the discussion and election outcomes. The biggest offender on the social media side is X (previously Twitter), where heated political discussions are raised both by bots and real users. The result is a misuse of political discussion on these platforms and a general mistrust among users for what they see.

See also

- Agent-based model (for bot's theory)

- Botnet

- Chatbot

- Comparison of Internet Relay Chat bots

- Dead Internet theory

- Facebook Bots

- IRC bot

- Online algorithm

- Social bot

- Software agent

- Software bot

- Spambot

- Twitterbot

- UBot Studio

- Votebots

- Web brigades

- Misplaced Pages:Bots – bots on Misplaced Pages

References

- "bot". Etymology, origin and meaning of bot by etymonline. October 9, 1922. Retrieved September 21, 2023.

- ^ Dunham, Ken; Melnick, Jim (2009). Malicious Bots: An outside look of the Internet. CRC Press. ISBN 978-1420069068.

- ^ Zeifman, Igal (January 24, 2017). "Bot Traffic Report 2016". Incapsula. Retrieved February 1, 2017.

- "What is a bot: types and functions". IONOS Digitalguide. November 16, 2021. Retrieved January 28, 2022.

- Howard, Philip N (October 18, 2018). "How Political Campaigns Weaponize Social Media Bots". IEEE Spectrum.

- Ferrara, Emilio; Varol, Onur; Davis, Clayton; Menczer, Filippo; Flammini, Alessandro (2016). "The Rise of Social Bots". Communications of the ACM. 59 (7): 96–104. arXiv:1407.5225. doi:10.1145/2818717. S2CID 1914124.

- Alessandro, Bessi; Emilio, Ferrara (November 7, 2016). "Social Bots Distort the 2016 US Presidential Election Online Discussion". First Monday. SSRN 2982233.

- "Biggest FRAUD in the Top 25 Free Ranking". TouchArcade – iPhone, iPad, Android Games Forum.

- "App Store fake reviews: Here's how they encourage your favourite developers to cheat". Electricpig. Archived from the original on October 18, 2017. Retrieved June 11, 2014.

- "Facebook Messenger Hits 100,000 bots". April 18, 2017. Retrieved September 22, 2017.

- Murray Newlands. "These Chatbot Usage Metrics Will Change Your Customer Service Strategy". Forbes. Retrieved March 8, 2018.

- "How companies are using chatbots for marketing: Use cases and inspiration". MarTech Today. January 22, 2018. Retrieved April 10, 2018.

- Dima Bekerman: How Registration Bots Concealed the Hacking of My Amazon Account, Application Security, Industry Perspective, December 1st, 2016, In: www.Imperva.com/blog

- Carr, Sam (July 15, 2019). "What Is Viewbotting: How Twitch Are Taking On The Ad Fraudsters". PPC Protect. Retrieved September 19, 2020.

- Lewis, Richard (March 17, 2015). "Leading StarCraft streamer embroiled in viewbot controversy". Dot Esports. Retrieved September 19, 2020.

- Safruti, Ido (June 19, 2017). "Why Detecting Bot Attacks Is Becoming More Difficult". DARKReading.

- Kang, Ah Reum; Jeong, Seong Hoon; Mohaisen, Aziz; Kim, Huy Kang (April 26, 2016). "Multimodal game bot detection using user behavioral characteristics". SpringerPlus. 5 (1): 523. arXiv:1606.01426. doi:10.1186/s40064-016-2122-8. ISSN 2193-1801. PMC 4844581. PMID 27186487.

- Holiday, Ryan (January 16, 2014). "Fake Traffic Means Real Paydays". BetaBeat. Archived from the original on January 3, 2015. Retrieved April 28, 2014.

- von Lipinski, Percy (May 28, 2013). "CNN's iReport hit hard by pay-per-view scandal". PulsePoint. Archived from the original on August 18, 2016. Retrieved July 21, 2016.

External links

Media related to Bots at Wikimedia Commons

Media related to Bots at Wikimedia Commons

| Botnets | |

|---|---|

| Notable botnets | |

| Main articles | |