Bootstrapping is a procedure for estimating the distribution of an estimator by resampling (often with replacement) one's data or a model estimated from the data. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates. This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods.

Bootstrapping estimates the properties of an estimand (such as its variance) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distribution is the empirical distribution function of the observed data. In the case where a set of observations can be assumed to be from an independent and identically distributed population, this can be implemented by constructing a number of resamples with replacement, of the observed data set (and of equal size to the observed data set). A key result in Efron's seminal paper that introduced the bootstrap is the favorable performance of bootstrap methods using sampling with replacement compared to prior methods like the jackknife that sample without replacement. However, since its introduction, numerous variants on the bootstrap have been proposed, including methods that sample without replacement or that create bootstrap samples larger or smaller than the original data.

The bootstrap may also be used for constructing hypothesis tests. It is often used as an alternative to statistical inference based on the assumption of a parametric model when that assumption is in doubt, or where parametric inference is impossible or requires complicated formulas for the calculation of standard errors.

History

The bootstrap was first described by Bradley Efron in "Bootstrap methods: another look at the jackknife" (1979), inspired by earlier work on the jackknife. Improved estimates of the variance were developed later. A Bayesian extension was developed in 1981. The bias-corrected and accelerated () bootstrap was developed by Efron in 1987, and the approximate bootstrap confidence interval (ABC, or approximate ) procedure in 1992.

Approach

The basic idea of bootstrapping is that inference about a population from sample data (sample → population) can be modeled by resampling the sample data and performing inference about a sample from resampled data (resampled → sample). As the population is unknown, the true error in a sample statistic against its population value is unknown. In bootstrap-resamples, the 'population' is in fact the sample, and this is known; hence the quality of inference of the 'true' sample from resampled data (resampled → sample) is measurable.

More formally, the bootstrap works by treating inference of the true probability distribution J, given the original data, as being analogous to an inference of the empirical distribution Ĵ, given the resampled data. The accuracy of inferences regarding Ĵ using the resampled data can be assessed because we know Ĵ. If Ĵ is a reasonable approximation to J, then the quality of inference on J can in turn be inferred.

As an example, assume we are interested in the average (or mean) height of people worldwide. We cannot measure all the people in the global population, so instead, we sample only a tiny part of it, and measure that. Assume the sample is of size N; that is, we measure the heights of N individuals. From that single sample, only one estimate of the mean can be obtained. In order to reason about the population, we need some sense of the variability of the mean that we have computed. The simplest bootstrap method involves taking the original data set of heights, and, using a computer, sampling from it to form a new sample (called a 'resample' or bootstrap sample) that is also of size N. The bootstrap sample is taken from the original by using sampling with replacement (e.g. we might 'resample' 5 times from and get ), so, assuming N is sufficiently large, for all practical purposes there is virtually zero probability that it will be identical to the original "real" sample. This process is repeated a large number of times (typically 1,000 or 10,000 times), and for each of these bootstrap samples, we compute its mean (each of these is called a "bootstrap estimate"). We now can create a histogram of bootstrap means. This histogram provides an estimate of the shape of the distribution of the sample mean from which we can answer questions about how much the mean varies across samples. (The method here, described for the mean, can be applied to almost any other statistic or estimator.)

Discussion

| This section includes a list of references, related reading, or external links, but its sources remain unclear because it lacks inline citations. Please help improve this section by introducing more precise citations. (June 2012) (Learn how and when to remove this message) |

Advantages

A great advantage of bootstrap is its simplicity. It is a straightforward way to derive estimates of standard errors and confidence intervals for complex estimators of the distribution, such as percentile points, proportions, Odds ratio, and correlation coefficients. However, despite its simplicity, bootstrapping can be applied to complex sampling designs (e.g. for population divided into s strata with ns observations per strata, bootstrapping can be applied for each stratum). Bootstrap is also an appropriate way to control and check the stability of the results. Although for most problems it is impossible to know the true confidence interval, bootstrap is asymptotically more accurate than the standard intervals obtained using sample variance and assumptions of normality. Bootstrapping is also a convenient method that avoids the cost of repeating the experiment to get other groups of sample data.

Disadvantages

Bootstrapping depends heavily on the estimator used and, though simple, naive use of bootstrapping will not always yield asymptotically valid results and can lead to inconsistency. Although bootstrapping is (under some conditions) asymptotically consistent, it does not provide general finite-sample guarantees. The result may depend on the representative sample. The apparent simplicity may conceal the fact that important assumptions are being made when undertaking the bootstrap analysis (e.g. independence of samples or large enough of a sample size) where these would be more formally stated in other approaches. Also, bootstrapping can be time-consuming and there are not many available software for bootstrapping as it is difficult to automate using traditional statistical computer packages.

Recommendations

Scholars have recommended more bootstrap samples as available computing power has increased. If the results may have substantial real-world consequences, then one should use as many samples as is reasonable, given available computing power and time. Increasing the number of samples cannot increase the amount of information in the original data; it can only reduce the effects of random sampling errors which can arise from a bootstrap procedure itself. Moreover, there is evidence that numbers of samples greater than 100 lead to negligible improvements in the estimation of standard errors. In fact, according to the original developer of the bootstrapping method, even setting the number of samples at 50 is likely to lead to fairly good standard error estimates.

Adèr et al. recommend the bootstrap procedure for the following situations:

- When the theoretical distribution of a statistic of interest is complicated or unknown. Since the bootstrapping procedure is distribution-independent it provides an indirect method to assess the properties of the distribution underlying the sample and the parameters of interest that are derived from this distribution.

- When the sample size is insufficient for straightforward statistical inference. If the underlying distribution is well-known, bootstrapping provides a way to account for the distortions caused by the specific sample that may not be fully representative of the population.

- When power calculations have to be performed, and a small pilot sample is available. Most power and sample size calculations are heavily dependent on the standard deviation of the statistic of interest. If the estimate used is incorrect, the required sample size will also be wrong. One method to get an impression of the variation of the statistic is to use a small pilot sample and perform bootstrapping on it to get impression of the variance.

However, Athreya has shown that if one performs a naive bootstrap on the sample mean when the underlying population lacks a finite variance (for example, a power law distribution), then the bootstrap distribution will not converge to the same limit as the sample mean. As a result, confidence intervals on the basis of a Monte Carlo simulation of the bootstrap could be misleading. Athreya states that "Unless one is reasonably sure that the underlying distribution is not heavy tailed, one should hesitate to use the naive bootstrap".

Types of bootstrap scheme

| This section includes a list of references, related reading, or external links, but its sources remain unclear because it lacks inline citations. Please help improve this section by introducing more precise citations. (June 2012) (Learn how and when to remove this message) |

In univariate problems, it is usually acceptable to resample the individual observations with replacement ("case resampling" below) unlike subsampling, in which resampling is without replacement and is valid under much weaker conditions compared to the bootstrap. In small samples, a parametric bootstrap approach might be preferred. For other problems, a smooth bootstrap will likely be preferred.

For regression problems, various other alternatives are available.

Case resampling

The bootstrap is generally useful for estimating the distribution of a statistic (e.g. mean, variance) without using normality assumptions (as required, e.g., for a z-statistic or a t-statistic). In particular, the bootstrap is useful when there is no analytical form or an asymptotic theory (e.g., an applicable central limit theorem) to help estimate the distribution of the statistics of interest. This is because bootstrap methods can apply to most random quantities, e.g., the ratio of variance and mean. There are at least two ways of performing case resampling.

- The Monte Carlo algorithm for case resampling is quite simple. First, we resample the data with replacement, and the size of the resample must be equal to the size of the original data set. Then the statistic of interest is computed from the resample from the first step. We repeat this routine many times to get a more precise estimate of the Bootstrap distribution of the statistic.

- The 'exact' version for case resampling is similar, but we exhaustively enumerate every possible resample of the data set. This can be computationally expensive as there are a total of different resamples, where n is the size of the data set. Thus for n = 5, 10, 20, 30 there are 126, 92378, 6.89 × 10 and 5.91 × 10 different resamples respectively.

Estimating the distribution of sample mean

Consider a coin-flipping experiment. We flip the coin and record whether it lands heads or tails. Let X = x1, x2, …, x10 be 10 observations from the experiment. xi = 1 if the i th flip lands heads, and 0 otherwise. By invoking the assumption that the average of the coin flips is normally distributed, we can use the t-statistic to estimate the distribution of the sample mean,

Such a normality assumption can be justified either as an approximation of the distribution of each individual coin flip or as an approximation of the distribution of the average of a large number of coin flips. The former is a poor approximation because the true distribution of the coin flips is Bernoulli instead of normal. The latter is a valid approximation in infinitely large samples due to the central limit theorem.

However, if we are not ready to make such a justification, then we can use the bootstrap instead. Using case resampling, we can derive the distribution of . We first resample the data to obtain a bootstrap resample. An example of the first resample might look like this X1* = x2, x1, x10, x10, x3, x4, x6, x7, x1, x9. There are some duplicates since a bootstrap resample comes from sampling with replacement from the data. Also the number of data points in a bootstrap resample is equal to the number of data points in our original observations. Then we compute the mean of this resample and obtain the first bootstrap mean: μ1*. We repeat this process to obtain the second resample X2* and compute the second bootstrap mean μ2*. If we repeat this 100 times, then we have μ1*, μ2*, ..., μ100*. This represents an empirical bootstrap distribution of sample mean. From this empirical distribution, one can derive a bootstrap confidence interval for the purpose of hypothesis testing.

Regression

In regression problems, case resampling refers to the simple scheme of resampling individual cases – often rows of a data set. For regression problems, as long as the data set is fairly large, this simple scheme is often acceptable. However, the method is open to criticism.

In regression problems, the explanatory variables are often fixed, or at least observed with more control than the response variable. Also, the range of the explanatory variables defines the information available from them. Therefore, to resample cases means that each bootstrap sample will lose some information. As such, alternative bootstrap procedures should be considered.

Bayesian bootstrap

Bootstrapping can be interpreted in a Bayesian framework using a scheme that creates new data sets through reweighting the initial data. Given a set of data points, the weighting assigned to data point in a new data set is , where is a low-to-high ordered list of uniformly distributed random numbers on , preceded by 0 and succeeded by 1. The distributions of a parameter inferred from considering many such data sets are then interpretable as posterior distributions on that parameter.

Smooth bootstrap

Under this scheme, a small amount of (usually normally distributed) zero-centered random noise is added onto each resampled observation. This is equivalent to sampling from a kernel density estimate of the data. Assume K to be a symmetric kernel density function with unit variance. The standard kernel estimator of is

where is the smoothing parameter. And the corresponding distribution function estimator is

Parametric bootstrap

Based on the assumption that the original data set is a realization of a random sample from a distribution of a specific parametric type, in this case a parametric model is fitted by parameter θ, often by maximum likelihood, and samples of random numbers are drawn from this fitted model. Usually the sample drawn has the same sample size as the original data. Then the estimate of original function F can be written as . This sampling process is repeated many times as for other bootstrap methods. Considering the centered sample mean in this case, the random sample original distribution function is replaced by a bootstrap random sample with function , and the probability distribution of is approximated by that of , where , which is the expectation corresponding to . The use of a parametric model at the sampling stage of the bootstrap methodology leads to procedures which are different from those obtained by applying basic statistical theory to inference for the same model.

Resampling residuals

Another approach to bootstrapping in regression problems is to resample residuals. The method proceeds as follows.

- Fit the model and retain the fitted values and the residuals .

- For each pair, (xi, yi), in which xi is the (possibly multivariate) explanatory variable, add a randomly resampled residual, , to the fitted value . In other words, create synthetic response variables where j is selected randomly from the list (1, ..., n) for every i.

- Refit the model using the fictitious response variables , and retain the quantities of interest (often the parameters, , estimated from the synthetic ).

- Repeat steps 2 and 3 a large number of times.

This scheme has the advantage that it retains the information in the explanatory variables. However, a question arises as to which residuals to resample. Raw residuals are one option; another is studentized residuals (in linear regression). Although there are arguments in favor of using studentized residuals; in practice, it often makes little difference, and it is easy to compare the results of both schemes.

Gaussian process regression bootstrap

When data are temporally correlated, straightforward bootstrapping destroys the inherent correlations. This method uses Gaussian process regression (GPR) to fit a probabilistic model from which replicates may then be drawn. GPR is a Bayesian non-linear regression method. A Gaussian process (GP) is a collection of random variables, any finite number of which have a joint Gaussian (normal) distribution. A GP is defined by a mean function and a covariance function, which specify the mean vectors and covariance matrices for each finite collection of the random variables.

Regression model:

- is a noise term.

Gaussian process prior:

For any finite collection of variables, x1, ..., xn, the function outputs are jointly distributed according to a multivariate Gaussian with mean and covariance matrix

Assume Then ,

where , and is the standard Kronecker delta function.

Gaussian process posterior:

According to GP prior, we can get

- ,

where and

Let x1,...,xs be another finite collection of variables, it's obvious that

- ,

where , ,

According to the equations above, the outputs y are also jointly distributed according to a multivariate Gaussian. Thus,

where , , , and is identity matrix.

Wild bootstrap

The wild bootstrap, proposed originally by Wu (1986), is suited when the model exhibits heteroskedasticity. The idea is, as the residual bootstrap, to leave the regressors at their sample value, but to resample the response variable based on the residuals values. That is, for each replicate, one computes a new based on

so the residuals are randomly multiplied by a random variable with mean 0 and variance 1. For most distributions of (but not Mammen's), this method assumes that the 'true' residual distribution is symmetric and can offer advantages over simple residual sampling for smaller sample sizes. Different forms are used for the random variable , such as

- A distribution suggested by Mammen (1993).

- Approximately, Mammen's distribution is:

- Or the simpler distribution, linked to the Rademacher distribution:

Block bootstrap

The block bootstrap is used when the data, or the errors in a model, are correlated. In this case, a simple case or residual resampling will fail, as it is not able to replicate the correlation in the data. The block bootstrap tries to replicate the correlation by resampling inside blocks of data (see Blocking (statistics)). The block bootstrap has been used mainly with data correlated in time (i.e. time series) but can also be used with data correlated in space, or among groups (so-called cluster data).

Time series: Simple block bootstrap

In the (simple) block bootstrap, the variable of interest is split into non-overlapping blocks.

Time series: Moving block bootstrap

In the moving block bootstrap, introduced by Künsch (1989), data is split into n − b + 1 overlapping blocks of length b: Observation 1 to b will be block 1, observation 2 to b + 1 will be block 2, etc. Then from these n − b + 1 blocks, n/b blocks will be drawn at random with replacement. Then aligning these n/b blocks in the order they were picked, will give the bootstrap observations.

This bootstrap works with dependent data, however, the bootstrapped observations will not be stationary anymore by construction. But, it was shown that varying randomly the block length can avoid this problem. This method is known as the stationary bootstrap. Other related modifications of the moving block bootstrap are the Markovian bootstrap and a stationary bootstrap method that matches subsequent blocks based on standard deviation matching.

Time series: Maximum entropy bootstrap

Vinod (2006), presents a method that bootstraps time series data using maximum entropy principles satisfying the Ergodic theorem with mean-preserving and mass-preserving constraints. There is an R package, meboot, that utilizes the method, which has applications in econometrics and computer science.

Cluster data: block bootstrap

Cluster data describes data where many observations per unit are observed. This could be observing many firms in many states or observing students in many classes. In such cases, the correlation structure is simplified, and one does usually make the assumption that data is correlated within a group/cluster, but independent between groups/clusters. The structure of the block bootstrap is easily obtained (where the block just corresponds to the group), and usually only the groups are resampled, while the observations within the groups are left unchanged. Cameron et al. (2008) discusses this for clustered errors in linear regression.

Methods for improving computational efficiency

The bootstrap is a powerful technique although may require substantial computing resources in both time and memory. Some techniques have been developed to reduce this burden. They can generally be combined with many of the different types of Bootstrap schemes and various choices of statistics.

Parallel processing

Most bootstrap methods are embarrassingly parallel algorithms. That is, the statistic of interest for each bootstrap sample does not depend on other bootstrap samples. Such computations can therefore be performed on separate CPUs or compute nodes with the results from the separate nodes eventually aggregated for final analysis.

Poisson bootstrap

The nonparametric bootstrap samples items from a list of size n with counts drawn from a multinomial distribution. If denotes the number times element i is included in a given bootstrap sample, then each is distributed as a binomial distribution with n trials and mean 1, but is not independent of for .

The Poisson bootstrap instead draws samples assuming all 's are independently and identically distributed as Poisson variables with mean 1. The rationale is that the limit of the binomial distribution is Poisson:

The Poisson bootstrap had been proposed by Hanley and MacGibbon as potentially useful for non-statisticians using software like SAS and SPSS, which lacked the bootstrap packages of R and S-Plus programming languages. The same authors report that for large enough n, the results are relatively similar to the nonparametric bootstrap estimates but go on to note the Poisson bootstrap has seen minimal use in applications.

Another proposed advantage of the Poisson bootstrap is the independence of the makes the method easier to apply for large datasets that must be processed as streams.

A way to improve on the Poisson bootstrap, termed "sequential bootstrap", is by taking the first samples so that the proportion of unique values is ≈0.632 of the original sample size n. This provides a distribution with main empirical characteristics being within a distance of . Empirical investigation has shown this method can yield good results. This is related to the reduced bootstrap method.

Bag of Little Bootstraps

For massive data sets, it is often computationally prohibitive to hold all the sample data in memory and resample from the sample data. The Bag of Little Bootstraps (BLB) provides a method of pre-aggregating data before bootstrapping to reduce computational constraints. This works by partitioning the data set into equal-sized buckets and aggregating the data within each bucket. This pre-aggregated data set becomes the new sample data over which to draw samples with replacement. This method is similar to the Block Bootstrap, but the motivations and definitions of the blocks are very different. Under certain assumptions, the sample distribution should approximate the full bootstrapped scenario. One constraint is the number of buckets where and the authors recommend usage of as a general solution.

Choice of statistic

The bootstrap distribution of a point estimator of a population parameter has been used to produce a bootstrapped confidence interval for the parameter's true value if the parameter can be written as a function of the population's distribution.

Population parameters are estimated with many point estimators. Popular families of point-estimators include mean-unbiased minimum-variance estimators, median-unbiased estimators, Bayesian estimators (for example, the posterior distribution's mode, median, mean), and maximum-likelihood estimators.

A Bayesian point estimator and a maximum-likelihood estimator have good performance when the sample size is infinite, according to asymptotic theory. For practical problems with finite samples, other estimators may be preferable. Asymptotic theory suggests techniques that often improve the performance of bootstrapped estimators; the bootstrapping of a maximum-likelihood estimator may often be improved using transformations related to pivotal quantities.

Deriving confidence intervals from the bootstrap distribution

The bootstrap distribution of a parameter-estimator is often used to calculate confidence intervals for its population-parameter. A variety of methods for constructing the confidence intervals have been proposed, although there is disagreement which method is the best.

Desirable properties

The survey of bootstrap confidence interval methods of DiCiccio and Efron and consequent discussion lists several desired properties of confidence intervals, which generally are not all simultaneously met.

- Transformation invariant - the confidence intervals from bootstrapping transformed data (e.g., by taking the logarithm) would ideally be the same as transforming the confidence intervals from bootstrapping the untransformed data.

- Confidence intervals should be valid or consistent, i.e., the probability a parameter is in a confidence interval with nominal level should be equal to or at least converge in probability to . The latter criteria is both refined and expanded using the framework of Hall. The refinements are to distinguish between methods based on how fast the true coverage probability approaches the nominal value, where a method is (using DiCiccio and Efron's terminology) first-order accurate if the error term in the approximation is and second-order accurate if the error term is . In addition, methods are distinguished by the speed with which the estimated bootstrap critical point converges to the true (unknown) point, and a method is second-order correct when this rate is .

- Gleser in the discussion of the paper argues that a limitation of the asymptotic descriptions in the previous bullet is that the terms are not necessarily uniform in the parameters or true distribution.

Bias, asymmetry, and confidence intervals

- Bias: The bootstrap distribution and the sample may disagree systematically, in which case bias may occur.

- If the bootstrap distribution of an estimator is symmetric, then percentile confidence-interval are often used; such intervals are appropriate especially for median-unbiased estimators of minimum risk (with respect to an absolute loss function). Bias in the bootstrap distribution will lead to bias in the confidence interval.

- Otherwise, if the bootstrap distribution is non-symmetric, then percentile confidence intervals are often inappropriate.

Methods for bootstrap confidence intervals

There are several methods for constructing confidence intervals from the bootstrap distribution of a real parameter:

- Basic bootstrap, also known as the Reverse Percentile Interval. The basic bootstrap is a simple scheme to construct the confidence interval: one simply takes the empirical quantiles from the bootstrap distribution of the parameter (see Davison and Hinkley 1997, equ. 5.6 p. 194):

- where denotes the percentile of the bootstrapped coefficients .

- Percentile bootstrap. The percentile bootstrap proceeds in a similar way to the basic bootstrap, using percentiles of the bootstrap distribution, but with a different formula (note the inversion of the left and right quantiles):

- where denotes the percentile of the bootstrapped coefficients .

- See Davison and Hinkley (1997, equ. 5.18 p. 203) and Efron and Tibshirani (1993, equ 13.5 p. 171).

- This method can be applied to any statistic. It will work well in cases where the bootstrap distribution is symmetrical and centered on the observed statistic and where the sample statistic is median-unbiased and has maximum concentration (or minimum risk with respect to an absolute value loss function). When working with small sample sizes (i.e., less than 50), the basic / reversed percentile and percentile confidence intervals for (for example) the variance statistic will be too narrow. So that with a sample of 20 points, 90% confidence interval will include the true variance only 78% of the time. The basic / reverse percentile confidence intervals are easier to justify mathematically but they are less accurate in general than percentile confidence intervals, and some authors discourage their use.

- Studentized bootstrap. The studentized bootstrap, also called bootstrap-t, is computed analogously to the standard confidence interval, but replaces the quantiles from the normal or student approximation by the quantiles from the bootstrap distribution of the Student's t-test (see Davison and Hinkley 1997, equ. 5.7 p. 194 and Efron and Tibshirani 1993 equ 12.22, p. 160):

- where denotes the percentile of the bootstrapped Student's t-test , and is the estimated standard error of the coefficient in the original model.

- The studentized test enjoys optimal properties as the statistic that is bootstrapped is pivotal (i.e. it does not depend on nuisance parameters as the t-test follows asymptotically a N(0,1) distribution), unlike the percentile bootstrap.

- Bias-corrected bootstrap – adjusts for bias in the bootstrap distribution.

- Accelerated bootstrap – The bias-corrected and accelerated (BCa) bootstrap, by Efron (1987), adjusts for both bias and skewness in the bootstrap distribution. This approach is accurate in a wide variety of settings, has reasonable computation requirements, and produces reasonably narrow intervals.

Bootstrap hypothesis testing

| This article may require cleanup to meet Misplaced Pages's quality standards. The specific problem is: there are other bootstrap tests. Please help improve this article if you can. (July 2023) (Learn how and when to remove this message) |

Efron and Tibshirani suggest the following algorithm for comparing the means of two independent samples: Let be a random sample from distribution F with sample mean and sample variance . Let be another, independent random sample from distribution G with mean and variance

- Calculate the test statistic

- Create two new data sets whose values are and where is the mean of the combined sample.

- Draw a random sample () of size with replacement from and another random sample () of size with replacement from .

- Calculate the test statistic

- Repeat 3 and 4 times (e.g. ) to collect values of the test statistic.

- Estimate the p-value as where when condition is true and 0 otherwise.

Example applications

| This section includes a list of references, related reading, or external links, but its sources remain unclear because it lacks inline citations. Please help improve this section by introducing more precise citations. (June 2012) (Learn how and when to remove this message) |

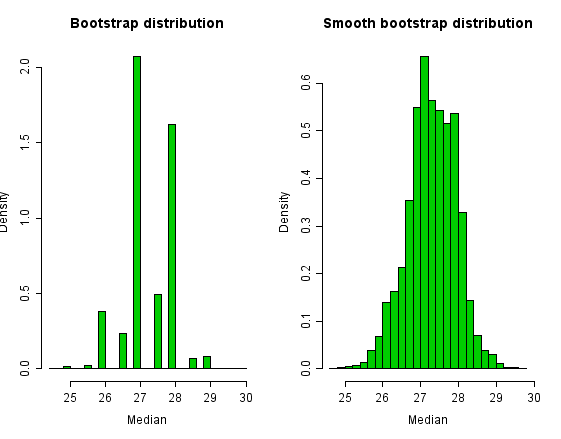

Smoothed bootstrap

In 1878, Simon Newcomb took observations on the speed of light. The data set contains two outliers, which greatly influence the sample mean. (The sample mean need not be a consistent estimator for any population mean, because no mean needs to exist for a heavy-tailed distribution.) A well-defined and robust statistic for the central tendency is the sample median, which is consistent and median-unbiased for the population median.

The bootstrap distribution for Newcomb's data appears below. We can reduce the discreteness of the bootstrap distribution by adding a small amount of random noise to each bootstrap sample. A conventional choice is to add noise with a standard deviation of for a sample size n; this noise is often drawn from a Student-t distribution with n-1 degrees of freedom. This results in an approximately-unbiased estimator for the variance of the sample mean. This means that samples taken from the bootstrap distribution will have a variance which is, on average, equal to the variance of the total population.

Histograms of the bootstrap distribution and the smooth bootstrap distribution appear below. The bootstrap distribution of the sample-median has only a small number of values. The smoothed bootstrap distribution has a richer support. However, note that whether the smoothed or standard bootstrap procedure is favorable is case-by-case and is shown to depend on both the underlying distribution function and on the quantity being estimated.

In this example, the bootstrapped 95% (percentile) confidence-interval for the population median is (26, 28.5), which is close to the interval for (25.98, 28.46) for the smoothed bootstrap.

Relation to other approaches to inference

Relationship to other resampling methods

The bootstrap is distinguished from:

- the jackknife procedure, used to estimate biases of sample statistics and to estimate variances, and

- cross-validation, in which the parameters (e.g., regression weights, factor loadings) that are estimated in one subsample are applied to another subsample.

For more details see resampling.

Bootstrap aggregating (bagging) is a meta-algorithm based on averaging model predictions obtained from models trained on multiple bootstrap samples.

U-statistics

Main article: U-statisticIn situations where an obvious statistic can be devised to measure a required characteristic using only a small number, r, of data items, a corresponding statistic based on the entire sample can be formulated. Given an r-sample statistic, one can create an n-sample statistic by something similar to bootstrapping (taking the average of the statistic over all subsamples of size r). This procedure is known to have certain good properties and the result is a U-statistic. The sample mean and sample variance are of this form, for r = 1 and r = 2.

Asymptotic theory

The bootstrap has under certain conditions desirable asymptotic properties. The asymptotic properties most often described are weak convergence / consistency of the sample paths of the bootstrap empirical process and the validity of confidence intervals derived from the bootstrap. This section describes the convergence of the empirical bootstrap.

Stochastic convergence

This paragraph summarizes more complete descriptions of stochastic convergence in van der Vaart and Wellner and Kosorok. The bootstrap defines a stochastic process, a collection of random variables indexed by some set , where is typically the real line () or a family of functions. Processes of interest are those with bounded sample paths, i.e., sample paths in L-infinity (), the set of all uniformly bounded functions from to . When equipped with the uniform distance, is a metric space, and when , two subspaces of are of particular interest, , the space of all continuous functions from to the unit interval , and , the space of all cadlag functions from to . This is because contains the distribution functions for all continuous random variables, and contains the distribution functions for all random variables. Statements about the consistency of the bootstrap are statements about the convergence of the sample paths of the bootstrap process as random elements of the metric space or some subspace thereof, especially or .

Consistency

Horowitz in a recent review defines consistency as: the bootstrap estimator is consistent [for a statistic ] if, for each , converges in probability to 0 as , where is the distribution of the statistic of interest in the original sample, is the true but unknown distribution of the statistic, is the asymptotic distribution function of , and is the indexing variable in the distribution function, i.e., . This is sometimes more specifically called consistency relative to the Kolmogorov-Smirnov distance.

Horowitz goes on to recommend using a theorem from Mammen that provides easier to check necessary and sufficient conditions for consistency for statistics of a certain common form. In particular, let be the random sample. If for a sequence of numbers and , then the bootstrap estimate of the cumulative distribution function estimates the empirical cumulative distribution function if and only if converges in distribution to the standard normal distribution.

Strong consistency

Convergence in (outer) probability as described above is also called weak consistency. It can also be shown with slightly stronger assumptions, that the bootstrap is strongly consistent, where convergence in (outer) probability is replaced by convergence (outer) almost surely. When only one type of consistency is described, it is typically weak consistency. This is adequate for most statistical applications since it implies confidence bands derived from the bootstrap are asymptotically valid.

Showing consistency using the central limit theorem

In simpler cases, it is possible to use the central limit theorem directly to show the consistency of the bootstrap procedure for estimating the distribution of the sample mean.

Specifically, let us consider independent identically distributed random variables with and for each . Let . In addition, for each , conditional on , let be independent random variables with distribution equal to the empirical distribution of . This is the sequence of bootstrap samples.

Then it can be shown that where represents probability conditional on , , , and .

To see this, note that satisfies the Lindeberg condition, so the CLT holds.

The Glivenko–Cantelli theorem provides theoretical background for the bootstrap method.

See also

- Accuracy and precision

- Bootstrap aggregating

- Bootstrapping

- Empirical likelihood

- Imputation (statistics)

- Reliability (statistics)

- Reproducibility

- Resampling

References

- ^ Horowitz JL (2019). "Bootstrap methods in econometrics". Annual Review of Economics. 11: 193–224. arXiv:1809.04016. doi:10.1146/annurev-economics-080218-025651.

- ^ Efron B, Tibshirani R (1993). An Introduction to the Bootstrap. Boca Raton, FL: Chapman & Hall/CRC. ISBN 0-412-04231-2. software Archived 2012-07-12 at archive.today

- Efron B (2003). "Second thoughts on the bootstrap" (PDF). Statistical Science. 18 (2): 135–140. doi:10.1214/ss/1063994968.

- ^ Efron, B. (1979). "Bootstrap methods: Another look at the jackknife". The Annals of Statistics. 7 (1): 1–26. doi:10.1214/aos/1176344552.

- Lehmann E.L. (1992) "Introduction to Neyman and Pearson (1933) On the Problem of the Most Efficient Tests of Statistical Hypotheses". In: Breakthroughs in Statistics, Volume 1, (Eds Kotz, S., Johnson, N.L.), Springer-Verlag. ISBN 0-387-94037-5 (followed by reprinting of the paper).

- Quenouille MH (1949). "Approximate tests of correlation in time-series". Journal of the Royal Statistical Society, Series B. 11 (1): 68–84. doi:10.1111/j.2517-6161.1949.tb00023.x.

- Tukey JW. "Bias and confidence in not-quite large samples". Annals of Mathematical Statistics. 29: 614.

- Jaeckel L (1972) The infinitesimal jackknife. Memorandum MM72-1215-11, Bell Lab

- Bickel PJ, Freedman DA (1981). "Some asymptotic theory for the bootstrap". The Annals of Statistics. 9 (6): 1196–1217. doi:10.1214/aos/1176345637.

- Singh K (1981). "On the asymptotic accuracy of Efron's bootstrap". The Annals of Statistics. 9 (6): 1187–1195. doi:10.1214/aos/1176345636. JSTOR 2240409.

- Rubin DB (1981). "The Bayesian bootstrap". The Annals of Statistics. 9: 130–134. doi:10.1214/aos/1176345338.

- ^ Efron, B. (1987). "Better Bootstrap Confidence Intervals". Journal of the American Statistical Association. 82 (397). Journal of the American Statistical Association, Vol. 82, No. 397: 171–185. doi:10.2307/2289144. JSTOR 2289144.

- DiCiccio T, Efron B (1992). "More accurate confidence intervals in exponential families". Biometrika. 79 (2): 231–245. doi:10.2307/2336835. ISSN 0006-3444. JSTOR 2336835. OCLC 5545447518. Retrieved 2024-01-31.

- Good, P. (2006) Resampling Methods. 3rd Ed. Birkhauser.

- ^ "21 Bootstrapping Regression Models" (PDF). Archived (PDF) from the original on 2015-07-24.

- DiCiccio TJ, Efron B (1996). "Bootstrap confidence intervals (with Discussion)". Statistical Science. 11 (3): 189–228. doi:10.1214/ss/1032280214.

- Hinkley D (1994). "[Bootstrap: More than a Stab in the Dark?]: Comment". Statistical Science. 9 (3): 400–403. doi:10.1214/ss/1177010387. ISSN 0883-4237.

- Goodhue DL, Lewis W, Thompson W (2012). "Does PLS have advantages for small sample size or non-normal data?". MIS Quarterly. 36 (3): 981–1001. doi:10.2307/41703490. JSTOR 41703490. Appendix.

- Efron, B., Rogosa, D., & Tibshirani, R. (2004). Resampling methods of estimation. In N.J. Smelser, & P.B. Baltes (Eds.). International Encyclopedia of the Social & Behavioral Sciences (pp. 13216–13220). New York, NY: Elsevier.

- Adèr, H. J., Mellenbergh G. J., & Hand, D. J. (2008). Advising on research methods: A consultant's companion. Huizen, The Netherlands: Johannes van Kessel Publishing. ISBN 978-90-79418-01-5.

- Athreya KB (1987). "Bootstrap of the mean in the infinite variance case". Annals of Statistics. 15 (2): 724–731. doi:10.1214/aos/1176350371.

- "How many different bootstrap samples are there? Statweb.stanford.edu". Archived from the original on 2019-09-14. Retrieved 2019-12-09.

- Rubin, D. B. (1981). "The Bayesian bootstrap". Annals of Statistics, 9, 130.

- ^ WANG, SUOJIN (1995). "Optimizing the smoothed bootstrap". Ann. Inst. Statist. Math. 47: 65–80. doi:10.1007/BF00773412. S2CID 122041565.

- Dekking, Frederik Michel; Kraaikamp, Cornelis; Lopuhaä, Hendrik Paul; Meester, Ludolf Erwin (2005). A modern introduction to probability and statistics : understanding why and how. London: Springer. ISBN 978-1-85233-896-1. OCLC 262680588.

- ^ Kirk, Paul (2009). "Gaussian process regression bootstrapping: exploring the effects of uncertainty in time course data". Bioinformatics. 25 (10): 1300–1306. doi:10.1093/bioinformatics/btp139. PMC 2677737. PMID 19289448.

- Wu, C.F.J. (1986). "Jackknife, bootstrap and other resampling methods in regression analysis (with discussions)" (PDF). Annals of Statistics. 14: 1261–1350. doi:10.1214/aos/1176350142.

- Mammen, E. (Mar 1993). "Bootstrap and wild bootstrap for high dimensional linear models". Annals of Statistics. 21 (1): 255–285. doi:10.1214/aos/1176349025.

- Künsch, H. R. (1989). "The Jackknife and the Bootstrap for General Stationary Observations". Annals of Statistics. 17 (3): 1217–1241. doi:10.1214/aos/1176347265.

- Politis, D. N.; Romano, J. P. (1994). "The Stationary Bootstrap". Journal of the American Statistical Association. 89 (428): 1303–1313. doi:10.1080/01621459.1994.10476870. hdl:10983/25607.

- Vinod, HD (2006). "Maximum entropy ensembles for time series inference in economics". Journal of Asian Economics. 17 (6): 955–978. doi:10.1016/j.asieco.2006.09.001.

- Vinod, Hrishikesh; López-de-Lacalle, Javier (2009). "Maximum entropy bootstrap for time series: The meboot R package". Journal of Statistical Software. 29 (5): 1–19. doi:10.18637/jss.v029.i05.

- Cameron, A. C.; Gelbach, J. B.; Miller, D. L. (2008). "Bootstrap-based improvements for inference with clustered errors" (PDF). Review of Economics and Statistics. 90 (3): 414–427. doi:10.1162/rest.90.3.414.

- Hanley JA, MacGibbon B (2006). "Creating non-parametric bootstrap samples using Poisson frequencies". Computer Methods and Programs in Biomedicine. 83 (1): 57–62. doi:10.1016/j.cmpb.2006.04.006. PMID 16730851.

- Chamandy N, Muralidharan O, Najmi A, Naidu S (2012). "Estimating Uncertainty for Massive Data Streams". Retrieved 2024-08-14.

- Babu, G. Jogesh; Pathak, P. K; Rao, C. R. (1999). "Second-order correctness of the Poisson bootstrap". The Annals of Statistics. 27 (5): 1666–1683. doi:10.1214/aos/1017939146.

- Shoemaker, Owen J.; Pathak, P. K. (2001). "The sequential bootstrap: a comparison with regular bootstrap". Communications in Statistics - Theory and Methods. 30 (8–9): 1661–1674. doi:10.1081/STA-100105691.

- Jiménez-Gamero, María Dolores; Muñoz-García, Joaquín; Pino-Mejías, Rafael (2004). "Reduced bootstrap for the median". Statistica Sinica. 14 (4): 1179–1198. JSTOR 24307226.

- Kleiner, A; Talwalkar, A; Sarkar, P; Jordan, M. I. (2014). "A scalable bootstrap for massive data". Journal of the Royal Statistical Society, Series B (Statistical Methodology). 76 (4): 795–816. arXiv:1112.5016. doi:10.1111/rssb.12050. ISSN 1369-7412. S2CID 3064206.

- ^ Davison, A. C.; Hinkley, D. V. (1997). Bootstrap methods and their application. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press. ISBN 0-521-57391-2. software.

- Hall P (1988). "Theoretical comparison of bootstrap confidence intervals". The Annals of Statistics. 16 (3): 927–953. doi:10.1214/aos/1176350933. JSTOR 2241604.

- ^ Hesterberg, Tim C (2014). "What Teachers Should Know about the Bootstrap: Resampling in the Undergraduate Statistics Curriculum". arXiv:1411.5279 .

- Efron, B. (1982). The jackknife, the bootstrap, and other resampling plans. Vol. 38. Society of Industrial and Applied Mathematics CBMS-NSF Monographs. ISBN 0-89871-179-7.

- Scheiner, S. (1998). Design and Analysis of Ecological Experiments. CRC Press. ISBN 0412035618. Ch13, p300

- Rice, John. Mathematical Statistics and Data Analysis (2 ed.). p. 272. "Although this direct equation of quantiles of the bootstrap sampling distribution with confidence limits may seem initially appealing, it’s rationale is somewhat obscure."

- Data from examples in Bayesian Data Analysis

- Chihara, Laura; Hesterberg, Tim (3 August 2018). Mathematical Statistics with Resampling and R (2nd ed.). John Wiley & Sons, Inc. doi:10.1002/9781119505969. ISBN 9781119416548. S2CID 60138121.

- Voinov, Vassily ; Nikulin, Mikhail (1993). Unbiased estimators and their applications. Vol. 1: Univariate case. Dordrect: Kluwer Academic Publishers. ISBN 0-7923-2382-3.

- Young, G. A. (July 1990). "Alternative Smoothed Bootstraps". Journal of the Royal Statistical Society, Series B (Methodological). 52 (3): 477–484. doi:10.1111/j.2517-6161.1990.tb01801.x. ISSN 0035-9246.

- van der Vaart AW, Wellner JA (1996). Weak Convergence and Empirical Processes With Applications to Statistics. New York: Springer Science+Business Media. ISBN 978-1-4757-2547-6.

- ^ Kosorok MR (2008). Introduction to Empirical Processes and Semiparametric Inference. New York: Springer Science+Business Media. ISBN 978-0-387-74977-8.

- van der Vaart AW (1998). Asymptotic Statistics. Cambridge Series in Statistical and Probabilistic Mathematics. New York: Cambridge University Press. p. 329. ISBN 978-0-521-78450-4.

- Mammen E (1992). When Does Bootstrap Work?: Aysmptotic Results and Simulations. Lecture Notes in Statistics. Vol. 57. New York: Springer-Verlag. ISBN 978-0-387-97867-3.

- Gregory, Karl (29 Dec 2023). "Some results based on the Lindeberg central limit theorem" (PDF). Retrieved 29 Dec 2023.

Further reading

- Diaconis P, Efron B (May 1983). "Computer-intensive methods in statistics" (PDF). Scientific American. 248 (5): 116–130. Bibcode:1983SciAm.248e.116D. doi:10.1038/scientificamerican0583-116. Archived from the original (PDF) on 2016-03-13. Retrieved 2016-01-19. popular-science

- Efron B (1981). "Nonparametric estimates of standard error: The jackknife, the bootstrap and other methods". Biometrika. 68 (3): 589–599. doi:10.1093/biomet/68.3.589. JSTOR 2335441.

- Hesterberg T, Moore DS, Monaghan S, Clipson A, Epstein R (2005). "Bootstrap methods and permutation tests" (PDF). In David S. Moore, George McCabe (eds.). Introduction to the Practice of Statistics. software. Archived from the original (PDF) on 2006-02-15. Retrieved 2007-03-23.

- Efron B (1979). "Bootstrap methods: Another look at the jackknife". The Annals of Statistics. 7: 1–26. doi:10.1214/aos/1176344552.

- Efron B (1982). The Jackknife, the Bootstrap, and Other Resampling Plans. Society of Industrial and Applied Mathematics CBMS-NSF Monographs. Vol. 38. Philadelphia, US: Society for Industrial and Applied Mathematics.

- Efron B, Tibshirani RJ (1993). An Introduction to the Bootstrap. Monographs on Statistics and Applied Probability. Vol. 57. Boca Raton, US: Chapman & Hall. software.

- Davison AC, Hinley DV (1997). Bootstrap Methods and their Application. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge: Cambridge University Press. ISBN 9780511802843. software.

- Mooney CZ, Duval RD (1993). Bootstrapping: A Nonparametric Approach to Statistical Inference. Sage University Paper Series on Quantitative Applications in the Social Sciences. Vol. 07–095. Newbury Park, US: Sage.

- Wright D, London K, Field AP (2011). "Using bootstrap estimation and the plug-in principle for clinical psychology data". Journal of Experimental Psychopathology. 2 (2): 252–270. doi:10.5127/jep.013611.

- Gong G (1986). "Cross-validation, the jackknife, and the bootstrap: Excess error estimation in forward logistic regression". Journal of the American Statistical Association. 81 (393): 108–113. doi:10.1080/01621459.1986.10478245.

- Other names that Efron's colleagues suggested for the "bootstrap" method were: Swiss Army Knife, Meat Axe, Swan-Dive, Jack-Rabbit, and Shotgun.

) bootstrap was developed by Efron in 1987, and the approximate bootstrap confidence interval (ABC, or approximate

) bootstrap was developed by Efron in 1987, and the approximate bootstrap confidence interval (ABC, or approximate  is calculated and, therefore, a histogram can be calculated to estimate the distribution of

is calculated and, therefore, a histogram can be calculated to estimate the distribution of  different resamples, where n is the size of the data set. Thus for n = 5, 10, 20, 30 there are 126, 92378, 6.89 × 10 and 5.91 × 10 different resamples respectively.

different resamples, where n is the size of the data set. Thus for n = 5, 10, 20, 30 there are 126, 92378, 6.89 × 10 and 5.91 × 10 different resamples respectively.

. We first resample the data to obtain a bootstrap resample. An example of the first resample might look like this X1* = x2, x1, x10, x10, x3, x4, x6, x7, x1, x9. There are some duplicates since a bootstrap resample comes from sampling with replacement from the data. Also the number of data points in a bootstrap resample is equal to the number of data points in our original observations. Then we compute the mean of this resample and obtain the first bootstrap mean: μ1*. We repeat this process to obtain the second resample X2* and compute the second bootstrap mean μ2*. If we repeat this 100 times, then we have μ1*, μ2*, ..., μ100*. This represents an empirical bootstrap distribution of sample mean. From this empirical distribution, one can derive a bootstrap confidence interval for the purpose of hypothesis testing.

. We first resample the data to obtain a bootstrap resample. An example of the first resample might look like this X1* = x2, x1, x10, x10, x3, x4, x6, x7, x1, x9. There are some duplicates since a bootstrap resample comes from sampling with replacement from the data. Also the number of data points in a bootstrap resample is equal to the number of data points in our original observations. Then we compute the mean of this resample and obtain the first bootstrap mean: μ1*. We repeat this process to obtain the second resample X2* and compute the second bootstrap mean μ2*. If we repeat this 100 times, then we have μ1*, μ2*, ..., μ100*. This represents an empirical bootstrap distribution of sample mean. From this empirical distribution, one can derive a bootstrap confidence interval for the purpose of hypothesis testing.

data points, the weighting assigned to data point

data points, the weighting assigned to data point  in a new data set

in a new data set  is

is  , where

, where  is a low-to-high ordered list of

is a low-to-high ordered list of  uniformly distributed random numbers on

uniformly distributed random numbers on  , preceded by 0 and succeeded by 1. The distributions of a parameter inferred from considering many such data sets

, preceded by 0 and succeeded by 1. The distributions of a parameter inferred from considering many such data sets  of

of  is

is

is the smoothing parameter. And the corresponding distribution function estimator

is the smoothing parameter. And the corresponding distribution function estimator  is

is

. This sampling process is repeated many times as for other bootstrap methods. Considering the centered

. This sampling process is repeated many times as for other bootstrap methods. Considering the centered  is replaced by a bootstrap random sample with function

is replaced by a bootstrap random sample with function  , and the

, and the  is approximated by that of

is approximated by that of  , where

, where  , which is the expectation corresponding to

, which is the expectation corresponding to  and the residuals

and the residuals  .

. , to the fitted value

, to the fitted value  where j is selected randomly from the list (1, ..., n) for every i.

where j is selected randomly from the list (1, ..., n) for every i. , and retain the quantities of interest (often the parameters,

, and retain the quantities of interest (often the parameters,  , estimated from the synthetic

, estimated from the synthetic

is a noise term.

is a noise term. are jointly distributed according to a multivariate Gaussian with mean

are jointly distributed according to a multivariate Gaussian with mean  and covariance matrix

and covariance matrix

Then

Then  ,

,

, and

, and  is the standard Kronecker delta function.

is the standard Kronecker delta function.

,

, and

and

,

, ,

,  ,

,

,

,  ,

,  , and

, and  is

is  identity matrix.

identity matrix.

based on

based on

with mean 0 and variance 1. For most distributions of

with mean 0 and variance 1. For most distributions of

denotes the number times element i is included in a given bootstrap sample, then each

denotes the number times element i is included in a given bootstrap sample, then each  for

for  .

.

. Empirical investigation has shown this method can yield good results. This is related to the reduced bootstrap method.

. Empirical investigation has shown this method can yield good results. This is related to the reduced bootstrap method.

equal-sized buckets and aggregating the data within each bucket. This pre-aggregated data set becomes the new sample data over which to draw samples with replacement. This method is similar to the Block Bootstrap, but the motivations and definitions of the blocks are very different. Under certain assumptions, the sample distribution should approximate the full bootstrapped scenario. One constraint is the number of buckets

equal-sized buckets and aggregating the data within each bucket. This pre-aggregated data set becomes the new sample data over which to draw samples with replacement. This method is similar to the Block Bootstrap, but the motivations and definitions of the blocks are very different. Under certain assumptions, the sample distribution should approximate the full bootstrapped scenario. One constraint is the number of buckets  where

where  and the authors recommend usage of

and the authors recommend usage of  as a general solution.

as a general solution.

should be equal to or at least converge in probability to

should be equal to or at least converge in probability to  and second-order accurate if the error term is

and second-order accurate if the error term is  . In addition, methods are distinguished by the speed with which the estimated bootstrap critical point converges to the true (unknown) point, and a method is second-order correct when this rate is

. In addition, methods are distinguished by the speed with which the estimated bootstrap critical point converges to the true (unknown) point, and a method is second-order correct when this rate is  .

. terms are not necessarily uniform in the parameters or true distribution.

terms are not necessarily uniform in the parameters or true distribution. where

where  denotes the

denotes the

.

. where

where  where

where  denotes the

denotes the  , and

, and  is the estimated standard error of the coefficient in the original model.

is the estimated standard error of the coefficient in the original model. be a random sample from distribution F with sample mean

be a random sample from distribution F with sample mean  . Let

. Let  be another, independent random sample from distribution G with mean

be another, independent random sample from distribution G with mean  and variance

and variance

and

and  where

where  is the mean of the combined sample.

is the mean of the combined sample. ) of size

) of size  with replacement from

with replacement from  and another random sample (

and another random sample ( with replacement from

with replacement from  .

.

times (e.g.

times (e.g.  ) to collect

) to collect  where

where  when condition is true and 0 otherwise.

when condition is true and 0 otherwise. for a sample size n; this noise is often drawn from a Student-t distribution with n-1 degrees of freedom. This results in an approximately-unbiased estimator for the variance of the sample mean. This means that samples taken from the bootstrap distribution will have a variance which is, on average, equal to the variance of the total population.

for a sample size n; this noise is often drawn from a Student-t distribution with n-1 degrees of freedom. This results in an approximately-unbiased estimator for the variance of the sample mean. This means that samples taken from the bootstrap distribution will have a variance which is, on average, equal to the variance of the total population.

, where

, where  ) or a family of functions. Processes of interest are those with bounded sample paths, i.e., sample paths in

) or a family of functions. Processes of interest are those with bounded sample paths, i.e., sample paths in  ), the set of all

), the set of all  , two subspaces of

, two subspaces of  , the space of all

, the space of all  , the space of all

, the space of all  is consistent [for a statistic

is consistent [for a statistic  ] if, for each

] if, for each  ,

,

, where

, where  is the distribution of the statistic of interest in the original sample,

is the distribution of the statistic of interest in the original sample,  is the asymptotic distribution function of

is the asymptotic distribution function of  is the indexing variable in the distribution function, i.e.,

is the indexing variable in the distribution function, i.e.,  . This is sometimes more specifically called consistency relative to the Kolmogorov-Smirnov distance.

. This is sometimes more specifically called consistency relative to the Kolmogorov-Smirnov distance.

be the random sample. If

be the random sample. If  for a sequence of numbers

for a sequence of numbers  and

and  , then the bootstrap estimate of the cumulative distribution function estimates the empirical cumulative distribution function if and only if

, then the bootstrap estimate of the cumulative distribution function estimates the empirical cumulative distribution function if and only if  independent identically distributed random variables with

independent identically distributed random variables with  and

and  for each

for each  . Let

. Let  . In addition, for each

. In addition, for each  be independent random variables with distribution equal to the empirical distribution of

be independent random variables with distribution equal to the empirical distribution of  where

where  represents probability conditional on

represents probability conditional on  , and

, and  .

.

satisfies the

satisfies the