| Three-dimensional (3D) computer graphics |

|---|

|

| Fundamentals |

| Primary uses |

| Related topics |

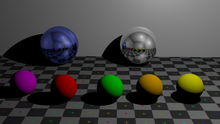

Computer-generated imagery (CGI) is a specific-technology or application of computer graphics for creating or improving images in art, printed media, simulators, videos and video games. These images are either static (i.e. still images) or dynamic (i.e. moving images). CGI both refers to 2D computer graphics and (more frequently) 3D computer graphics with the purpose of designing characters, virtual worlds, or scenes and special effects (in films, television programs, commercials, etc.). The application of CGI for creating/improving animations is called computer animation, or CGI animation.

History

| This section needs expansion. You can help by adding to it. (October 2023) |

The first feature film to use CGI as well as the composition of live-action film with CGI was Vertigo, which used abstract computer graphics by John Whitney in the opening credits of the film. The first feature film to make use of CGI with live action in the storyline of the film was the 1973 film Westworld. Other early films that incorporated CGI include Star Wars: Episode IV (1977), Tron (1982), Star Trek II: The Wrath of Khan (1982), Golgo 13: The Professional (1983), The Last Starfighter (1984), Young Sherlock Holmes (1985), The Abyss (1989), Terminator 2: Judgement Day (1991), Jurassic Park (1993) and Toy Story (1995). The first music video to use CGI was Will Powers' Adventures in Success (1983). Prior to CGI being prevalent in film, virtual reality, personal computing and gaming, one of the early practical applications of CGI was for aviation and military training, namely the flight simulator. Visual systems developed in flight simulators were also an important precursor to three dimensional computer graphics and Computer Generated Imagery (CGI) systems today. Namely because the object of flight simulation was to reproduce on the ground the behavior of an aircraft in flight. Much of this reproduction had to do with believable visual synthesis that mimicked reality. The Link Digital Image Generator (DIG) by the Singer Company (Singer-Link), was considered one of the worlds first generation CGI systems. It was a real-time, 3D capable, day/dusk/night system that was used by NASA shuttles, for F-111s, Black Hawk and the B-52. Link's Digital Image Generator had architecture to provide a visual system that realistically corresponded with the view of the pilot. The basic archictecture of the DIG and subsequent improvements contained a scene manager followed by geometric processor, video processor and into the display with the end goal of a visual system that processed realistic texture, shading, translucency capabilties, and free of aliasing.

Combined with the need to pair virtual synthesis with military level training requirements, CGI technologies applied in flight simulation were often years ahead of what would have been available in commercial computing or even in high budget film. Early CGI systems could depict only objects consisting of planar polygons. Advances in algorithms and electronics in flight simulator visual systems and CGI in the 1970s and 1980s influenced many technologies still used in modern CGI adding the ability to superimpose texture over the surfaces as well as transition imagery from one level of detail to the next one in a smooth manner.

The evolution of CGI led to the emergence of virtual cinematography in the 1990s, where the vision of the simulated camera is not constrained by the laws of physics. Availability of CGI software and increased computer speeds have allowed individual artists and small companies to produce professional-grade films, games, and fine art from their home computers.

Static images and landscapes

See also: Fractal landscape and Scenery generator

Not only do animated images form part of computer-generated imagery; natural looking landscapes (such as fractal landscapes) are also generated via computer algorithms. A simple way to generate fractal surfaces is to use an extension of the triangular mesh method, relying on the construction of some special case of a de Rham curve, e.g., midpoint displacement. For instance, the algorithm may start with a large triangle, then recursively zoom in by dividing it into four smaller Sierpinski triangles, then interpolate the height of each point from its nearest neighbors. The creation of a Brownian surface may be achieved not only by adding noise as new nodes are created but by adding additional noise at multiple levels of the mesh. Thus a topographical map with varying levels of height can be created using relatively straightforward fractal algorithms. Some typical, easy-to-program fractals used in CGI are the plasma fractal and the more dramatic fault fractal.

Many specific techniques have been researched and developed to produce highly focused computer-generated effects — e.g., the use of specific models to represent the chemical weathering of stones to model erosion and produce an "aged appearance" for a given stone-based surface.

Architectural scenes

Modern architects use services from computer graphic firms to create 3-dimensional models for both customers and builders. These computer generated models can be more accurate than traditional drawings. Architectural animation (which provides animated movies of buildings, rather than interactive images) can also be used to see the possible relationship a building will have in relation to the environment and its surrounding buildings. The processing of architectural spaces without the use of paper and pencil tools is now a widely accepted practice with a number of computer-assisted architectural design systems.

Architectural modeling tools allow an architect to visualize a space and perform "walk-throughs" in an interactive manner, thus providing "interactive environments" both at the urban and building levels. Specific applications in architecture not only include the specification of building structures (such as walls and windows) and walk-throughs but the effects of light and how sunlight will affect a specific design at different times of the day.

Architectural modeling tools have now become increasingly internet-based. However, the quality of internet-based systems still lags behind sophisticated in-house modeling systems.

In some applications, computer-generated images are used to "reverse engineer" historical buildings. For instance, a computer-generated reconstruction of the monastery at Georgenthal in Germany was derived from the ruins of the monastery, yet provides the viewer with a "look and feel" of what the building would have looked like in its day.

Anatomical models

See also: Medical imaging, Visible Human Project, Google Body, and Living Human Project

Computer generated models used in skeletal animation are not always anatomically correct. However, organizations such as the Scientific Computing and Imaging Institute have developed anatomically correct computer-based models. Computer generated anatomical models can be used both for instructional and operational purposes. To date, a large body of artist produced medical images continue to be used by medical students, such as images by Frank H. Netter, e.g. Cardiac images. However, a number of online anatomical models are becoming available.

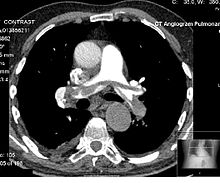

A single patient X-ray is not a computer generated image, even if digitized. However, in applications which involve CT scans a three-dimensional model is automatically produced from many single-slice x-rays, producing "computer generated image". Applications involving magnetic resonance imaging also bring together a number of "snapshots" (in this case via magnetic pulses) to produce a composite, internal image.

In modern medical applications, patient-specific models are constructed in 'computer assisted surgery'. For instance, in total knee replacement, the construction of a detailed patient-specific model can be used to carefully plan the surgery. These three-dimensional models are usually extracted from multiple CT scans of the appropriate parts of the patient's own anatomy. Such models can also be used for planning aortic valve implantations, one of the common procedures for treating heart disease. Given that the shape, diameter, and position of the coronary openings can vary greatly from patient to patient, the extraction (from CT scans) of a model that closely resembles a patient's valve anatomy can be highly beneficial in planning the procedure.

Cloth and skin images

Models of cloth generally fall into three groups:

- The geometric-mechanical structure at yarn crossing

- The mechanics of continuous elastic sheets

- The geometric macroscopic features of cloth.

To date, making the clothing of a digital character automatically fold in a natural way remains a challenge for many animators.

In addition to their use in film, advertising and other modes of public display, computer generated images of clothing are now routinely used by top fashion design firms.

The challenge in rendering human skin images involves three levels of realism:

- Photo realism in resembling real skin at the static level

- Physical realism in resembling its movements

- Function realism in resembling its response to actions.

The finest visible features such as fine wrinkles and skin pores are the size of about 100 μm or 0.1 millimetres. Skin can be modeled as a 7-dimensional bidirectional texture function (BTF) or a collection of bidirectional scattering distribution function (BSDF) over the target's surfaces.

Interactive simulation and visualization

Main article: Interactive visualizationInteractive visualization is the rendering of data that may vary dynamically and allowing a user to view the data from multiple perspectives. The applications areas may vary significantly, ranging from the visualization of the flow patterns in fluid dynamics to specific computer aided design applications. The data rendered may correspond to specific visual scenes that change as the user interacts with the system — e.g. simulators, such as flight simulators, make extensive use of CGI techniques for representing the world.

At the abstract level, an interactive visualization process involves a "data pipeline" in which the raw data is managed and filtered to a form that makes it suitable for rendering. This is often called the "visualization data". The visualization data is then mapped to a "visualization representation" that can be fed to a rendering system. This is usually called a "renderable representation". This representation is then rendered as a displayable image. As the user interacts with the system (e.g. by using joystick controls to change their position within the virtual world) the raw data is fed through the pipeline to create a new rendered image, often making real-time computational efficiency a key consideration in such applications.

Computer animation

Main article: Computer animation See also: History of computer animationWhile computer-generated images of landscapes may be static, computer animation only applies to dynamic images that resemble a movie. However, in general, the term computer animation refers to dynamic images that do not allow user interaction, and the term virtual world is used for the interactive animated environments.

Computer animation is essentially a digital successor to the art of stop motion animation of 3D models and frame-by-frame animation of 2D illustrations. Computer generated animations are more controllable than other more physically based processes, such as constructing miniatures for effects shots or hiring extras for crowd scenes, and because it allows the creation of images that would not be feasible using any other technology. It can also allow a single graphic artist to produce such content without the use of actors, expensive set pieces, or props.

To create the illusion of movement, an image is displayed on the computer screen and repeatedly replaced by a new image which is similar to the previous image, but advanced slightly in the time domain (usually at a rate of 24 or 30 frames/second). This technique is identical to how the illusion of movement is achieved with television and motion pictures.

Text-to-image models

This section is an excerpt from Text-to-image model.

an astronaut riding a horse, by Hiroshige, generated by Stable Diffusion 3.5, a large-scale text-to-image model first released in 2022A text-to-image model is a machine learning model which takes an input natural language description and produces an image matching that description.

Text-to-image models began to be developed in the mid-2010s during the beginnings of the AI boom, as a result of advances in deep neural networks. In 2022, the output of state-of-the-art text-to-image models—such as OpenAI's DALL-E 2, Google Brain's Imagen, Stability AI's Stable Diffusion, and Midjourney—began to be considered to approach the quality of real photographs and human-drawn art.

Text-to-image models are generally latent diffusion models, which combine a language model, which transforms the input text into a latent representation, and a generative image model, which produces an image conditioned on that representation. The most effective models have generally been trained on massive amounts of image and text data scraped from the web.Virtual worlds

Main article: Virtual world

A virtual world is an agent-based and simulated environment allowing users to interact with artificially animated characters (e.g software agent) or with other physical users, through the use of avatars. Virtual worlds are intended for its users to inhabit and interact, and the term today has become largely synonymous with interactive 3D virtual environments, where the users take the form of avatars visible to others graphically. These avatars are usually depicted as textual, two-dimensional, or three-dimensional graphical representations, although other forms are possible (auditory and touch sensations for example). Some, but not all, virtual worlds allow for multiple users.

In courtrooms

Computer-generated imagery has been used in courtrooms, primarily since the early 2000s. However, some experts have argued that it is prejudicial. They are used to help judges or the jury to better visualize the sequence of events, evidence or hypothesis. However, a 1997 study showed that people are poor intuitive physicists and easily influenced by computer generated images. Thus it is important that jurors and other legal decision-makers be made aware that such exhibits are merely a representation of one potential sequence of events.

Broadcast and live events

Weather visualizations were the first application of CGI in television. One of the first companies to offer computer systems for generating weather graphics was ColorGraphics Weather Systems in 1979 with the "LiveLine", based around an Apple II computer, with later models from ColorGraphics using Cromemco computers fitted with their Dazzler video graphics card.

It has now become common in weather casting to display full motion video of images captured in real-time from multiple cameras and other imaging devices. Coupled with 3D graphics symbols and mapped to a common virtual geospatial model, these animated visualizations constitute the first true application of CGI to TV.

CGI has become common in sports telecasting. Sports and entertainment venues are provided with see-through and overlay content through tracked camera feeds for enhanced viewing by the audience. Examples include the yellow "first down" line seen in television broadcasts of American football games showing the line the offensive team must cross to receive a first down. CGI is also used in association with football and other sporting events to show commercial advertisements overlaid onto the view of the playing area. Sections of rugby fields and cricket pitches also display sponsored images. Swimming telecasts often add a line across the lanes to indicate the position of the current record holder as a race proceeds to allow viewers to compare the current race to the best performance. Other examples include hockey puck tracking and annotations of racing car performance and snooker ball trajectories. Sometimes CGI on TV with correct alignment to the real world has been referred to as augmented reality.

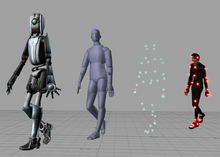

Motion capture

Main article: Motion captureComputer-generated imagery is often used in conjunction with motion capture to better cover the faults that come with CGI and animation. Computer-generated imagery is limited in its practical application by how realistic it can look. Unrealistic, or badly managed computer-generated imagery can result in the uncanny valley effect. This effect refers to the human ability to recognize things that look eerily like humans, but are slightly off. Such ability is a fault with normal computer-generated imagery which, due to the complex anatomy of the human body, can often fail to replicate it perfectly. Artists can use motion capture to get footage of a human performing an action and then replicate it perfectly with computer-generated imagery so that it looks normal.

The lack of anatomically correct digital models contributes to the necessity of motion capture as it is used with computer-generated imagery. Because computer-generated imagery reflects only the outside, or skin, of the object being rendered, it fails to capture the infinitesimally small interactions between interlocking muscle groups used in fine motor skills like speaking. The constant motion of the face as it makes sounds with shaped lips and tongue movement, along with the facial expressions that go along with speaking are difficult to replicate by hand. Motion capture can catch the underlying movement of facial muscles and better replicate the visual that goes along with the audio.

See also

- 3D modeling

- Cinema Research Corporation

- Cel shading

- Anime Studio

- Animation database

- List of computer-animated films

- Digital image

- Parallel rendering

- Photoshop is the industry standard commercial digital photo editing tool.

- GIMP, a FOSS digital photo editing application.

- Poser DIY CGI optimized for soft models

- Ray tracing (graphics)

- Real-time computer graphics

- Shader

- Virtual human

- Virtual studio

- Virtual Physiological Human

References

Citations

- Ozturk, Selen (March 15, 2023). "Vicious Circle: John Whitney and the Military Origins of Early CGI". Bright Lights Film Journal. Retrieved May 11, 2023.

- ^ "14 groundbreaking movies that took special effects to new levels". Insider.com.

- Halverson, Dave (December 2005). "Anime Reviews: The Professional Golgo 13". Play. No. 48. United States of America. p. 92.

- "Last Starfighter sequel is super close to happening, says Gary Whitta". Archived from the original on 2021-08-29. Retrieved 2021-08-29.

- Jankel, A.; Morton, R. (Nov 15, 1984). Creative Computer Graphics. Cambridge University Press. p. 134. ISBN 0-521-26251-8.

- Rolfe, J. M. and Staples, K.J. (May 27, 1988). Flight Simulation (Cambridge Aerospace Series, Series Number 1). New York: Cambridge University Press. ISBN 978-0521357517.

{{cite book}}: CS1 maint: multiple names: authors list (link) - Carlson, Wayne (20 June 2017). "Computer Graphics and Animation: A Retrospective Overview". Ohio State University. p. 13.2.

- Suminski, Leonard and, Hulin, Paul (September 26, 1980). "Computer Generated Imagery Current Technology". U.S. Army Project Manager for Training Devices: 14–18.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - Yan, Johnson K. and, Florence, Judit K. "MODULAR DIGITAL IMAGE GENERATOR". United States Patent Office.

{{cite web}}: CS1 maint: multiple names: authors list (link) - Yan, Johnson (August 1985). "Advances in Computer-Generated Imagery for Flight Simulation". IEEE. 5 (8): 37–51. doi:10.1109/MCG.1985.276213. S2CID 15309937.

- ^ Peitgen 2004, pp. 462–466.

- Game programming gems 2 by Mark A. DeLoura 2001 ISBN 1-58450-054-9 page 240

- Digital modeling of material appearance by Julie Dorsey, Holly Rushmeier, François X. Sillion 2007 ISBN 0-12-221181-2 page 217

- Sondermann 2008, pp. 8–15.

- Interactive environments with open-source software: 3D walkthroughs by Wolfgang Höhl, Wolfgang Höhl, 2008, ISBN 3-211-79169-8, pp. 24–29.

- "Light: The art of exposure". GarageFarm. 2020-11-12. Retrieved 2020-11-12.

- Advances in Computer and Information Sciences and Engineering by Tarek Sobh 2008 ISBN 1-4020-8740-3 pages 136-139

- Encyclopedia of Multimedia Technology and Networking, Volume 1 by Margherita Pagani 2005 ISBN 1-59140-561-0 page 1027

- Interac storytelling: First Joint International Conference by Ulrike Spierling, Nicolas Szilas 2008 ISBN 3-540-89424-1 pages 114-118

- Total Knee Arthroplasty by Johan Bellemans, Michael D. Ries, Jan M.K. Victor 2005 ISBN 3-540-20242-0 pages 241-245

- I. Waechter et al. Patient Specific Models for Minimally Invasive Aortic Valve Implantation in Medical Image Computing and Computer-Assisted Intervention -- MICCAI 2010 edited by Tianzi Jiang, 2010 ISBN 3-642-15704-1 pages 526-560

- Cloth modeling and animation by Donald House, David E. Breen 2000 ISBN 1-56881-090-3 page 20

- Film and photography by Ian Graham 2003 ISBN 0-237-52626-3 page 21

- Designing clothes: culture and organization of the fashion industry by Veronica Manlow 2007 ISBN 0-7658-0398-4 page 213

- Handbook of Virtual Humans by Nadia Magnenat-Thalmann and Daniel Thalmann, 2004 ISBN 0-470-02316-3 pages 353-370

- Mathematical optimization in computer graphics and vision by Luiz Velho, Paulo Cezar Pinto Carvalho 2008 ISBN 0-12-715951-7 page 177

- ^ GPU-based interactive visualization techniques by Daniel Weiskopf 2006 ISBN 3-540-33262-6 pages 1-8

- Trends in interactive visualization by Elena van Zudilova-Seinstra, Tony Adriaansen, Robert Liere 2008 ISBN 1-84800-268-8 pages 1-7

- Vincent, James (May 24, 2022). "All these images were generated by Google's latest text-to-image AI". The Verge. Vox Media. Retrieved May 28, 2022.

- Cook, A.D. (2009). A case study of the manifestations and significance of social presence in a multi-user virtual environment. MEd Thesis. Available online

- Biocca & Levy 1995, pp. 40–44.

- Begault 1994, p. 212.

- Computer-generated images influence trial results The Conversation, 31 October 2013

- Kassin, S. M. (1997). "Computer-animated Display and the Jury: Facilitative and Prejudicial Effects". Law and Human Behavior. 40 (3): 269–281. doi:10.1023/a:1024838715221. S2CID 145311101.

- Archived at Ghostarchive and the Wayback Machine: Arti AR highlights at SRX -- the first sports augmented reality live from a moving car!, 14 July 2021, retrieved 2021-07-14

- Azuma, Ronald; Balliot, Yohan; Behringer, Reinhold; Feiner, Steven; Julier, Simon; MacIntyre, Blair. Recent Advances in Augmented Reality Computers & Graphics, November 2001.

- Marlow, Chris. Hey, hockey puck! NHL PrePlay adds a second-screen experience to live games, digitalmediawire 27 April 2012.

- Palomäki, Jussi; Kunnari, Anton; Drosinou, Marianna; Koverola, Mika; Lehtonen, Noora; Halonen, Juho; Repo, Marko; Laakasuo, Michael (2018-11-01). "Evaluating the replicability of the uncanny valley effect". Heliyon. 4 (11): e00939. Bibcode:2018Heliy...400939P. doi:10.1016/j.heliyon.2018.e00939. ISSN 2405-8440. PMC 6260244. PMID 30519654.

- Pelachaud, Catherine; Steedman, Mark; Badler, Norman (1991-06-01). "Linguistic Issues in Facial Animation". Center for Human Modeling and Simulation (69).

Sources

- Begault, Durand R. (1994). 3-D Sound for Virtual Reality and Multimedia. AP Professional. ISBN 978-0-1208-4735-8.

- Biocca, Frank; Levy, Mark R. (1995). Communication in the Age of Virtual Reality. Lawrence Erlbaum Associates. ISBN 978-0-8058-1549-8.

- Peitgen, Heinz-Otto; Jürgens, Hartmut; Saupe, Dietmar (2004). Chaos and Fractals: New Frontiers of Science. Springer Science & Business Media. ISBN 978-0-387-20229-7.

- Sondermann, Horst (2008). Light Shadow Space: Architectural Rendering with Cinema 4D. Vienna: Springer. ISBN 978-3-211-48761-7.

External links

Library resources aboutComputer-generated imagery

- A Critical History of Computer Graphics and Animation – a course page at Ohio State University that includes all the course materials and extensive supplementary materials (videos, articles, links).

- CG101: A Computer Graphics Industry Reference ISBN 073570046X Unique and personal histories of early computer graphics production, plus a comprehensive foundation of the industry for all reading levels.

- F/X Gods, by Anne Thompson, Wired, February 2005.

- "History Gets A Computer Graphics Make-Over" Tayfun King, Click, BBC World News (2004-11-19)

- NIH Visible Human Gallery

| Fractal software | |||||

|---|---|---|---|---|---|

| Open-source | |||||

| GNU | |||||

| Freeware | |||||

| Retail |

| ||||

| Scenery generator | |||||

| Found objects | |||||

| Related | |||||

| Unix–Windows interoperability | |||

|---|---|---|---|

| Unix-like subsystems | |||

| Development environments and shells | |||

| Ports of Unix-like utilities for Windows | |||

| Windows runtime environments for *nix | |||

| Platform virtualization software | |||

| Related | |||

| Cinematic techniques | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Lighting | |||||||||||||||

| Sound | |||||||||||||||

| Shooting |

| ||||||||||||||

|

| |||||||||||||||

| Special effects | |

|---|---|

| Practical | |

| In-camera | |

| Visual | |