| Part of a series of articles about | ||||||

| Calculus | ||||||

|---|---|---|---|---|---|---|

Differential

|

||||||

Integral

|

||||||

Series

|

||||||

Vector

|

||||||

Multivariable

|

||||||

|

Advanced |

||||||

| Specialized | ||||||

| Miscellanea | ||||||

In vector calculus, the divergence theorem, also known as Gauss's theorem or Ostrogradsky's theorem, is a theorem relating the flux of a vector field through a closed surface to the divergence of the field in the volume enclosed.

More precisely, the divergence theorem states that the surface integral of a vector field over a closed surface, which is called the "flux" through the surface, is equal to the volume integral of the divergence over the region enclosed by the surface. Intuitively, it states that "the sum of all sources of the field in a region (with sinks regarded as negative sources) gives the net flux out of the region".

The divergence theorem is an important result for the mathematics of physics and engineering, particularly in electrostatics and fluid dynamics. In these fields, it is usually applied in three dimensions. However, it generalizes to any number of dimensions. In one dimension, it is equivalent to the fundamental theorem of calculus. In two dimensions, it is equivalent to Green's theorem.

Explanation using liquid flow

See also: Sources and sinksVector fields are often illustrated using the example of the velocity field of a fluid, such as a gas or liquid. A moving liquid has a velocity—a speed and a direction—at each point, which can be represented by a vector, so that the velocity of the liquid at any moment forms a vector field. Consider an imaginary closed surface S inside a body of liquid, enclosing a volume of liquid. The flux of liquid out of the volume at any time is equal to the volume rate of fluid crossing this surface, i.e., the surface integral of the velocity over the surface.

Since liquids are incompressible, the amount of liquid inside a closed volume is constant; if there are no sources or sinks inside the volume then the flux of liquid out of S is zero. If the liquid is moving, it may flow into the volume at some points on the surface S and out of the volume at other points, but the amounts flowing in and out at any moment are equal, so the net flux of liquid out of the volume is zero.

However if a source of liquid is inside the closed surface, such as a pipe through which liquid is introduced, the additional liquid will exert pressure on the surrounding liquid, causing an outward flow in all directions. This will cause a net outward flow through the surface S. The flux outward through S equals the volume rate of flow of fluid into S from the pipe. Similarly if there is a sink or drain inside S, such as a pipe which drains the liquid off, the external pressure of the liquid will cause a velocity throughout the liquid directed inward toward the location of the drain. The volume rate of flow of liquid inward through the surface S equals the rate of liquid removed by the sink.

If there are multiple sources and sinks of liquid inside S, the flux through the surface can be calculated by adding up the volume rate of liquid added by the sources and subtracting the rate of liquid drained off by the sinks. The volume rate of flow of liquid through a source or sink (with the flow through a sink given a negative sign) is equal to the divergence of the velocity field at the pipe mouth, so adding up (integrating) the divergence of the liquid throughout the volume enclosed by S equals the volume rate of flux through S. This is the divergence theorem.

The divergence theorem is employed in any conservation law which states that the total volume of all sinks and sources, that is the volume integral of the divergence, is equal to the net flow across the volume's boundary.

Mathematical statement

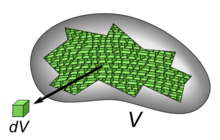

Suppose V is a subset of (in the case of n = 3, V represents a volume in three-dimensional space) which is compact and has a piecewise smooth boundary S (also indicated with ). If F is a continuously differentiable vector field defined on a neighborhood of V, then:

The left side is a volume integral over the volume V, and the right side is the surface integral over the boundary of the volume V. The closed, measurable set is oriented by outward-pointing normals, and is the outward pointing unit normal at almost each point on the boundary . ( may be used as a shorthand for .) In terms of the intuitive description above, the left-hand side of the equation represents the total of the sources in the volume V, and the right-hand side represents the total flow across the boundary S.

Informal derivation

The divergence theorem follows from the fact that if a volume V is partitioned into separate parts, the flux out of the original volume is equal to the algebraic sum of the flux out of each component volume. This is true despite the fact that the new subvolumes have surfaces that were not part of the original volume's surface, because these surfaces are just partitions between two of the subvolumes and the flux through them just passes from one volume to the other and so cancels out when the flux out of the subvolumes is summed.

See the diagram. A closed, bounded volume V is divided into two volumes V1 and V2 by a surface S3 (green). The flux Φ(Vi) out of each component region Vi is equal to the sum of the flux through its two faces, so the sum of the flux out of the two parts is

where Φ1 and Φ2 are the flux out of surfaces S1 and S2, Φ31 is the flux through S3 out of volume 1, and Φ32 is the flux through S3 out of volume 2. The point is that surface S3 is part of the surface of both volumes. The "outward" direction of the normal vector is opposite for each volume, so the flux out of one through S3 is equal to the negative of the flux out of the other so these two fluxes cancel in the sum.

Therefore:

Since the union of surfaces S1 and S2 is S

This principle applies to a volume divided into any number of parts, as shown in the diagram. Since the integral over each internal partition (green surfaces) appears with opposite signs in the flux of the two adjacent volumes they cancel out, and the only contribution to the flux is the integral over the external surfaces (grey). Since the external surfaces of all the component volumes equal the original surface.

The flux Φ out of each volume is the surface integral of the vector field F(x) over the surface

The goal is to divide the original volume into infinitely many infinitesimal volumes. As the volume is divided into smaller and smaller parts, the surface integral on the right, the flux out of each subvolume, approaches zero because the surface area S(Vi) approaches zero. However, from the definition of divergence, the ratio of flux to volume, , the part in parentheses below, does not in general vanish but approaches the divergence div F as the volume approaches zero.

As long as the vector field F(x) has continuous derivatives, the sum above holds even in the limit when the volume is divided into infinitely small increments

As approaches zero volume, it becomes the infinitesimal dV, the part in parentheses becomes the divergence, and the sum becomes a volume integral over V

Since this derivation is coordinate free, it shows that the divergence does not depend on the coordinates used.

Proofs

For bounded open subsets of Euclidean space

We are going to prove the following:

Theorem — Let be open and bounded with boundary. If is on an open neighborhood of , that is, , then for each , where is the outward pointing unit normal vector to . Equivalently,

Proof of Theorem.

- The first step is to reduce to the case where . Pick such that on . Note that and on . Hence it suffices to prove the theorem for . Hence we may assume that .

- Let be arbitrary. The assumption that has boundary means that there is an open neighborhood of in such that is the graph of a function with lying on one side of this graph. More precisely, this means that after a translation and rotation of , there are and and a function , such that with the notation

it holds that and for ,

Since is compact, we can cover with finitely many neighborhoods of the above form. Note that is an open cover of . By using a partition of unity subordinate to this cover, it suffices to prove the theorem in the case where either has compact support in or has compact support in some . If has compact support in , then for all , by the fundamental theorem of calculus, and since vanishes on a neighborhood of . Thus the theorem holds for with compact support in . Thus we have reduced to the case where has compact support in some . - So assume has compact support in some . The last step now is to show that the theorem is true by direct computation. Change notation to , and bring in the notation from (2) used to describe . Note that this means that we have rotated and translated . This is a valid reduction since the theorem is invariant under rotations and translations of coordinates. Since for and for , we have for each that For we have by the fundamental theorem of calculus that Now fix . Note that Define by . By the chain rule, But since has compact support, we can integrate out first to deduce that Thus In summary, with we have Recall that the outward unit normal to the graph of at a point is and that the surface element is given by . Thus This completes the proof.

For compact Riemannian manifolds with boundary

We are going to prove the following:

Theorem — Let be a compact manifold with boundary with metric tensor . Let denote the manifold interior of and let denote the manifold boundary of . Let denote inner products of functions and denote inner products of vectors. Suppose and is a vector field on . Then where is the outward-pointing unit normal vector to .

Proof of Theorem. We use the Einstein summation convention. By using a partition of unity, we may assume that and have compact support in a coordinate patch . First consider the case where the patch is disjoint from . Then is identified with an open subset of and integration by parts produces no boundary terms: In the last equality we used the Voss-Weyl coordinate formula for the divergence, although the preceding identity could be used to define as the formal adjoint of . Now suppose intersects . Then is identified with an open set in . We zero extend and to and perform integration by parts to obtain where . By a variant of the straightening theorem for vector fields, we may choose so that is the inward unit normal at . In this case is the volume element on and the above formula reads This completes the proof.

Corollaries

By replacing F in the divergence theorem with specific forms, other useful identities can be derived (cf. vector identities).

- With for a scalar function g and a vector field F,

- A special case of this is , in which case the theorem is the basis for Green's identities.

- With for two vector fields F and G, where denotes a cross product,

- With for two vector fields F and G, where denotes a dot product,

- With for a scalar function f and vector field c:

-

- The last term on the right vanishes for constant or any divergence free (solenoidal) vector field, e.g. Incompressible flows without sources or sinks such as phase change or chemical reactions etc. In particular, taking to be constant:

- With for vector field F and constant vector c:

- By reordering the triple product on the right hand side and taking out the constant vector of the integral,

- Hence,

Example

Suppose we wish to evaluate

where S is the unit sphere defined by

and F is the vector field

The direct computation of this integral is quite difficult, but we can simplify the derivation of the result using the divergence theorem, because the divergence theorem says that the integral is equal to:

where W is the unit ball:

Since the function y is positive in one hemisphere of W and negative in the other, in an equal and opposite way, its total integral over W is zero. The same is true for z:

Therefore,

because the unit ball W has volume 4π/3.

Applications

Differential and integral forms of physical laws

As a result of the divergence theorem, a host of physical laws can be written in both a differential form (where one quantity is the divergence of another) and an integral form (where the flux of one quantity through a closed surface is equal to another quantity). Three examples are Gauss's law (in electrostatics), Gauss's law for magnetism, and Gauss's law for gravity.

Continuity equations

Main article: continuity equationContinuity equations offer more examples of laws with both differential and integral forms, related to each other by the divergence theorem. In fluid dynamics, electromagnetism, quantum mechanics, relativity theory, and a number of other fields, there are continuity equations that describe the conservation of mass, momentum, energy, probability, or other quantities. Generically, these equations state that the divergence of the flow of the conserved quantity is equal to the distribution of sources or sinks of that quantity. The divergence theorem states that any such continuity equation can be written in a differential form (in terms of a divergence) and an integral form (in terms of a flux).

Inverse-square laws

Any inverse-square law can instead be written in a Gauss's law-type form (with a differential and integral form, as described above). Two examples are Gauss's law (in electrostatics), which follows from the inverse-square Coulomb's law, and Gauss's law for gravity, which follows from the inverse-square Newton's law of universal gravitation. The derivation of the Gauss's law-type equation from the inverse-square formulation or vice versa is exactly the same in both cases; see either of those articles for details.

History

Joseph-Louis Lagrange introduced the notion of surface integrals in 1760 and again in more general terms in 1811, in the second edition of his Mécanique Analytique. Lagrange employed surface integrals in his work on fluid mechanics. He discovered the divergence theorem in 1762.

Carl Friedrich Gauss was also using surface integrals while working on the gravitational attraction of an elliptical spheroid in 1813, when he proved special cases of the divergence theorem. He proved additional special cases in 1833 and 1839. But it was Mikhail Ostrogradsky, who gave the first proof of the general theorem, in 1826, as part of his investigation of heat flow. Special cases were proven by George Green in 1828 in An Essay on the Application of Mathematical Analysis to the Theories of Electricity and Magnetism, Siméon Denis Poisson in 1824 in a paper on elasticity, and Frédéric Sarrus in 1828 in his work on floating bodies.

Worked examples

Example 1

To verify the planar variant of the divergence theorem for a region :

and the vector field:

The boundary of is the unit circle, , that can be represented parametrically by:

such that where units is the length arc from the point to the point on . Then a vector equation of is

At a point on :

Therefore,

Because , we can evaluate , and because , . Thus

Example 2

Let's say we wanted to evaluate the flux of the following vector field defined by bounded by the following inequalities:

By the divergence theorem,

We now need to determine the divergence of . If is a three-dimensional vector field, then the divergence of is given by .

Thus, we can set up the following flux integral ![]() as follows:

as follows:

Now that we have set up the integral, we can evaluate it.

Generalizations

Multiple dimensions

One can use the generalised Stokes' theorem to equate the n-dimensional volume integral of the divergence of a vector field F over a region U to the (n − 1)-dimensional surface integral of F over the boundary of U:

This equation is also known as the divergence theorem.

When n = 2, this is equivalent to Green's theorem.

When n = 1, it reduces to the fundamental theorem of calculus, part 2.

Tensor fields

Main article: Tensor fieldWriting the theorem in Einstein notation:

suggestively, replacing the vector field F with a rank-n tensor field T, this can be generalized to:

where on each side, tensor contraction occurs for at least one index. This form of the theorem is still in 3d, each index takes values 1, 2, and 3. It can be generalized further still to higher (or lower) dimensions (for example to 4d spacetime in general relativity).

See also

References

- Katz, Victor J. (1979). "The history of Stokes's theorem". Mathematics Magazine. 52 (3): 146–156. doi:10.2307/2690275. JSTOR 2690275. reprinted in Anderson, Marlow (2009). Who Gave You the Epsilon?: And Other Tales of Mathematical History. Mathematical Association of America. pp. 78–79. ISBN 978-0-88385-569-0.

- R. G. Lerner; G. L. Trigg (1994). Encyclopaedia of Physics (2nd ed.). VHC. ISBN 978-3-527-26954-9.

- Byron, Frederick; Fuller, Robert (1992), Mathematics of Classical and Quantum Physics, Dover Publications, p. 22, ISBN 978-0-486-67164-2

- Wiley, C. Ray Jr. Advanced Engineering Mathematics, 3rd Ed. McGraw-Hill. pp. 372–373.

- Kreyszig, Erwin; Kreyszig, Herbert; Norminton, Edward J. (2011). Advanced Engineering Mathematics (10 ed.). John Wiley and Sons. pp. 453–456. ISBN 978-0-470-45836-5.

- Benford, Frank A. (May 2007). "Notes on Vector Calculus" (PDF). Course materials for Math 105: Multivariable Calculus. Prof. Steven Miller's webpage, Williams College. Retrieved 14 March 2022.

- ^ Purcell, Edward M.; David J. Morin (2013). Electricity and Magnetism. Cambridge Univ. Press. pp. 56–58. ISBN 978-1-107-01402-2.

- Alt, Hans Wilhelm (2016). "Linear Functional Analysis". Universitext. London: Springer London. pp. 259–261, 270–272. doi:10.1007/978-1-4471-7280-2. ISBN 978-1-4471-7279-6. ISSN 0172-5939.

- Taylor, Michael E. (2011). "Partial Differential Equations I". Applied Mathematical Sciences. Vol. 115. New York, NY: Springer New York. pp. 178–179. doi:10.1007/978-1-4419-7055-8. ISBN 978-1-4419-7054-1. ISSN 0066-5452.

- M. R. Spiegel; S. Lipschutz; D. Spellman (2009). Vector Analysis. Schaum's Outlines (2nd ed.). USA: McGraw Hill. ISBN 978-0-07-161545-7.

- ^ MathWorld

- ^ C.B. Parker (1994). McGraw Hill Encyclopaedia of Physics (2nd ed.). McGraw Hill. ISBN 978-0-07-051400-3.

- ^ Katz, Victor (2009). "Chapter 22: Vector Analysis". A History of Mathematics: An Introduction. Addison-Wesley. pp. 808–9. ISBN 978-0-321-38700-4.

- In his 1762 paper on sound, Lagrange treats a special case of the divergence theorem: Lagrange (1762) "Nouvelles recherches sur la nature et la propagation du son" (New researches on the nature and propagation of sound), Miscellanea Taurinensia (also known as: Mélanges de Turin ), 2: 11 – 172. This article is reprinted as: "Nouvelles recherches sur la nature et la propagation du son" in: J.A. Serret, ed., Oeuvres de Lagrange, (Paris, France: Gauthier-Villars, 1867), vol. 1, pages 151–316; on pages 263–265, Lagrange transforms triple integrals into double integrals using integration by parts.

- C. F. Gauss (1813) "Theoria attractionis corporum sphaeroidicorum ellipticorum homogeneorum methodo nova tractata," Commentationes societatis regiae scientiarium Gottingensis recentiores, 2: 355–378; Gauss considered a special case of the theorem; see the 4th, 5th, and 6th pages of his article.

- ^ Katz, Victor (May 1979). "A History of Stokes' Theorem". Mathematics Magazine. 52 (3): 146–156. doi:10.1080/0025570X.1979.11976770. JSTOR 2690275.

- Mikhail Ostragradsky presented his proof of the divergence theorem to the Paris Academy in 1826; however, his work was not published by the Academy. He returned to St. Petersburg, Russia, where in 1828–1829 he read the work that he'd done in France, to the St. Petersburg Academy, which published his work in abbreviated form in 1831.

- His proof of the divergence theorem – "Démonstration d'un théorème du calcul intégral" (Proof of a theorem in integral calculus) – which he had read to the Paris Academy on February 13, 1826, was translated, in 1965, into Russian together with another article by him. See: Юшкевич А.П. (Yushkevich A.P.) and Антропова В.И. (Antropov V.I.) (1965) "Неопубликованные работы М.В. Остроградского" (Unpublished works of MV Ostrogradskii), Историко-математические исследования (Istoriko-Matematicheskie Issledovaniya / Historical-Mathematical Studies), 16: 49–96; see the section titled: "Остроградский М.В. Доказательство одной теоремы интегрального исчисления" (Ostrogradskii M. V. Dokazatelstvo odnoy teoremy integralnogo ischislenia / Ostragradsky M.V. Proof of a theorem in integral calculus).

- M. Ostrogradsky (presented: November 5, 1828; published: 1831) "Première note sur la théorie de la chaleur" (First note on the theory of heat) Mémoires de l'Académie impériale des sciences de St. Pétersbourg, series 6, 1: 129–133; for an abbreviated version of his proof of the divergence theorem, see pages 130–131.

- Victor J. Katz (May1979) "The history of Stokes' theorem," Archived April 2, 2015, at the Wayback Machine Mathematics Magazine, 52(3): 146–156; for Ostragradsky's proof of the divergence theorem, see pages 147–148.

- George Green, An Essay on the Application of Mathematical Analysis to the Theories of Electricity and Magnetism (Nottingham, England: T. Wheelhouse, 1838). A form of the "divergence theorem" appears on pages 10–12.

- Other early investigators who used some form of the divergence theorem include:

- Poisson (presented: February 2, 1824; published: 1826) "Mémoire sur la théorie du magnétisme" (Memoir on the theory of magnetism), Mémoires de l'Académie des sciences de l'Institut de France, 5: 247–338; on pages 294–296, Poisson transforms a volume integral (which is used to evaluate a quantity Q) into a surface integral. To make this transformation, Poisson follows the same procedure that is used to prove the divergence theorem.

- Frédéric Sarrus (1828) "Mémoire sur les oscillations des corps flottans" (Memoir on the oscillations of floating bodies), Annales de mathématiques pures et appliquées (Nismes), 19: 185–211.

- K.F. Riley; M.P. Hobson; S.J. Bence (2010). Mathematical methods for physics and engineering. Cambridge University Press. ISBN 978-0-521-86153-3.

- see for example:

J.A. Wheeler; C. Misner; K.S. Thorne (1973). Gravitation. W.H. Freeman & Co. pp. 85–86, §3.5. ISBN 978-0-7167-0344-0., and

R. Penrose (2007). The Road to Reality. Vintage books. ISBN 978-0-679-77631-4.

External links

- "Ostrogradski formula", Encyclopedia of Mathematics, EMS Press, 2001

- Differential Operators and the Divergence Theorem at MathPages

- The Divergence (Gauss) Theorem by Nick Bykov, Wolfram Demonstrations Project.

- Weisstein, Eric W. "Divergence Theorem". MathWorld. – This article was originally based on the GFDL article from PlanetMath at https://web.archive.org/web/20021029094728/http://planetmath.org/encyclopedia/Divergence.html

| Calculus | |||||

|---|---|---|---|---|---|

| Precalculus | |||||

| Limits | |||||

| Differential calculus |

| ||||

| Integral calculus | |||||

| Vector calculus |

| ||||

| Multivariable calculus | |||||

| Sequences and series |

| ||||

| Special functions and numbers | |||||

| History of calculus | |||||

| Lists |

| ||||

| Miscellaneous topics |

| ||||

with the surface normal n

with the surface normal n (in the case of n = 3, V represents a volume in

(in the case of n = 3, V represents a volume in  ). If F is a continuously differentiable vector field defined on a

). If F is a continuously differentiable vector field defined on a

is oriented by outward-pointing

is oriented by outward-pointing  is the outward pointing unit normal at almost each point on the boundary

is the outward pointing unit normal at almost each point on the boundary  may be used as a shorthand for

may be used as a shorthand for  .) In terms of the intuitive description above, the left-hand side of the equation represents the total of the sources in the volume V, and the right-hand side represents the total flow across the boundary S.

.) In terms of the intuitive description above, the left-hand side of the equation represents the total of the sources in the volume V, and the right-hand side represents the total flow across the boundary S.

out of each volume to the volume

out of each volume to the volume  approaches

approaches

, the part in parentheses below, does not in general vanish but approaches the

, the part in parentheses below, does not in general vanish but approaches the

be open and bounded with

be open and bounded with  boundary. If

boundary. If  is

is  of

of  , that is,

, that is,  , then for each

, then for each  ,

,

where

where  is the outward pointing unit normal vector to

is the outward pointing unit normal vector to  .

Equivalently,

.

Equivalently,

. Pick

. Pick  such that

such that  on

on  and

and  on

on  . Hence we may assume that

. Hence we may assume that  be arbitrary. The assumption that

be arbitrary. The assumption that  of

of  in

in  is the graph of a

is the graph of a  lying on one side of this graph. More precisely, this means that after a translation and rotation of

lying on one side of this graph. More precisely, this means that after a translation and rotation of  , there are

, there are  and

and  and a

and a  , such that with the notation

, such that with the notation

it holds that

it holds that

and for

and for  ,

,

of the above form. Note that

of the above form. Note that  is an open cover of

is an open cover of  . By using a

. By using a

. If

. If  by the fundamental theorem of calculus, and

by the fundamental theorem of calculus, and  since

since  , and bring in the notation from (2) used to describe

, and bring in the notation from (2) used to describe  for

for  and for

and for  , we have for each

, we have for each  For

For  we have by the fundamental theorem of calculus that

we have by the fundamental theorem of calculus that

Now fix

Now fix  . Note that

. Note that

Define

Define  by

by  . By the chain rule,

. By the chain rule,

But since

But since  has compact support, we can integrate out

has compact support, we can integrate out  first to deduce that

first to deduce that

Thus

Thus

In summary, with

In summary, with  we have

we have

Recall that the outward unit normal to the graph

Recall that the outward unit normal to the graph  of

of  at a point

at a point  is

is  and that the surface element

and that the surface element  is given by

is given by  . Thus

. Thus

compact manifold with boundary with

compact manifold with boundary with  denote

denote  inner products of functions and

inner products of functions and  denote inner products of vectors. Suppose

denote inner products of vectors. Suppose  and

and  is a

is a  where

where  is the outward-pointing unit normal vector to

is the outward-pointing unit normal vector to  . First consider the case where the patch is disjoint from

. First consider the case where the patch is disjoint from  In the last equality we used the Voss-Weyl coordinate formula for the divergence, although the preceding identity could be used to define

In the last equality we used the Voss-Weyl coordinate formula for the divergence, although the preceding identity could be used to define  as the formal adjoint of

as the formal adjoint of  . Now suppose

. Now suppose  . We zero extend

. We zero extend  and perform integration by parts to obtain

and perform integration by parts to obtain

where

where  .

By a variant of the

.

By a variant of the  is the inward unit normal

is the inward unit normal  at

at  is the volume element on

is the volume element on  This completes the proof.

This completes the proof.

for a scalar function g and a vector field F,

for a scalar function g and a vector field F,

, in which case the theorem is the basis for

, in which case the theorem is the basis for  for two vector fields F and G, where

for two vector fields F and G, where  denotes a cross product,

denotes a cross product,

for two vector fields F and G, where

for two vector fields F and G, where  denotes a

denotes a

for a scalar function f and vector field c:

for a scalar function f and vector field c:

or any divergence free (solenoidal) vector field, e.g. Incompressible flows without sources or sinks such as phase change or chemical reactions etc. In particular, taking

or any divergence free (solenoidal) vector field, e.g. Incompressible flows without sources or sinks such as phase change or chemical reactions etc. In particular, taking

for vector field F and constant vector c:

for vector field F and constant vector c:

:

:

, that can be represented parametrically by:

, that can be represented parametrically by:

where

where  units is the length arc from the point

units is the length arc from the point  to the point

to the point  on

on

, we can evaluate

, we can evaluate  , and because

, and because  ,

,  . Thus

. Thus

bounded by the following inequalities:

bounded by the following inequalities:

. If

. If  is a three-dimensional vector field, then the divergence of

is a three-dimensional vector field, then the divergence of  .

.