Orientation defined by an ordered set of vectors.

Orientation defined by an ordered set of vectors. Reversed orientation corresponds to negating the exterior product.Geometric interpretation of grade n elements in a real exterior algebra for n = 0 (signed point), 1 (directed line segment, or vector), 2 (oriented plane element), 3 (oriented volume). The exterior product of n vectors can be visualized as any n-dimensional shape (e.g. n-parallelotope, n-ellipsoid); with magnitude (hypervolume), and orientation defined by that of its (n − 1)-dimensional boundary and on which side the interior is.

Reversed orientation corresponds to negating the exterior product.Geometric interpretation of grade n elements in a real exterior algebra for n = 0 (signed point), 1 (directed line segment, or vector), 2 (oriented plane element), 3 (oriented volume). The exterior product of n vectors can be visualized as any n-dimensional shape (e.g. n-parallelotope, n-ellipsoid); with magnitude (hypervolume), and orientation defined by that of its (n − 1)-dimensional boundary and on which side the interior is.

In mathematics, the exterior algebra or Grassmann algebra of a vector space is an associative algebra that contains which has a product, called exterior product or wedge product and denoted with , such that for every vector in The exterior algebra is named after Hermann Grassmann, and the names of the product come from the "wedge" symbol and the fact that the product of two elements of is "outside"

The wedge product of vectors is called a blade of degree or -blade. The wedge product was introduced originally as an algebraic construction used in geometry to study areas, volumes, and their higher-dimensional analogues: The magnitude of a 2-blade is the area of the parallelogram defined by and and, more generally, the magnitude of a -blade is the (hyper)volume of the parallelotope defined by the constituent vectors. The alternating property that implies a skew-symmetric property that and more generally any blade flips sign whenever two of its constituent vectors are exchanged, corresponding to a parallelotope of opposite orientation.

The full exterior algebra contains objects that are not themselves blades, but linear combinations of blades; a sum of blades of homogeneous degree is called a k-vector, while a more general sum of blades of arbitrary degree is called a multivector. The linear span of the -blades is called the -th exterior power of The exterior algebra is the direct sum of the -th exterior powers of and this makes the exterior algebra a graded algebra.

The exterior algebra is universal in the sense that every equation that relates elements of in the exterior algebra is also valid in every associative algebra that contains and in which the square of every element of is zero.

The definition of the exterior algebra can be extended for spaces built from vector spaces, such as vector fields and functions whose domain is a vector space. Moreover, the field of scalars may be any field (however for fields of characteristic two, the above condition must be replaced with which is equivalent in other characteristics). More generally, the exterior algebra can be defined for modules over a commutative ring. In particular, the algebra of differential forms in variables is an exterior algebra over the ring of the smooth functions in variables.

Motivating examples

Areas in the plane

The two-dimensional Euclidean vector space is a real vector space equipped with a basis consisting of a pair of orthogonal unit vectors

Suppose that

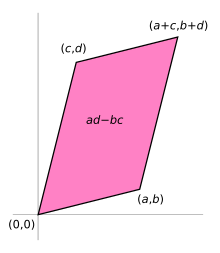

are a pair of given vectors in , written in components. There is a unique parallelogram having and as two of its sides. The area of this parallelogram is given by the standard determinant formula:

Consider now the exterior product of and :

where the first step uses the distributive law for the exterior product, and the last uses the fact that the exterior product is an alternating map, and in particular (The fact that the exterior product is an alternating map also forces ) Note that the coefficient in this last expression is precisely the determinant of the matrix . The fact that this may be positive or negative has the intuitive meaning that v and w may be oriented in a counterclockwise or clockwise sense as the vertices of the parallelogram they define. Such an area is called the signed area of the parallelogram: the absolute value of the signed area is the ordinary area, and the sign determines its orientation.

The fact that this coefficient is the signed area is not an accident. In fact, it is relatively easy to see that the exterior product should be related to the signed area if one tries to axiomatize this area as an algebraic construct. In detail, if A(v, w) denotes the signed area of the parallelogram of which the pair of vectors v and w form two adjacent sides, then A must satisfy the following properties:

- A(rv, sw) = rsA(v, w) for any real numbers r and s, since rescaling either of the sides rescales the area by the same amount (and reversing the direction of one of the sides reverses the orientation of the parallelogram).

- A(v, v) = 0, since the area of the degenerate parallelogram determined by v (i.e., a line segment) is zero.

- A(w, v) = −A(v, w), since interchanging the roles of v and w reverses the orientation of the parallelogram.

- A(v + rw, w) = A(v, w) for any real number r, since adding a multiple of w to v affects neither the base nor the height of the parallelogram and consequently preserves its area.

- A(e1, e2) = 1, since the area of the unit square is one.

With the exception of the last property, the exterior product of two vectors satisfies the same properties as the area. In a certain sense, the exterior product generalizes the final property by allowing the area of a parallelogram to be compared to that of any chosen parallelogram in a parallel plane (here, the one with sides e1 and e2). In other words, the exterior product provides a basis-independent formulation of area.

Cross and triple products

For vectors in R, the exterior algebra is closely related to the cross product and triple product. Using the standard basis {e1, e2, e3}, the exterior product of a pair of vectors

and

is

where {e1 ∧ e2, e3 ∧ e1, e2 ∧ e3} is the basis for the three-dimensional space ⋀(R). The coefficients above are the same as those in the usual definition of the cross product of vectors in three dimensions, the only difference being that the exterior product is not an ordinary vector, but instead is a bivector.

Bringing in a third vector

the exterior product of three vectors is

where e1 ∧ e2 ∧ e3 is the basis vector for the one-dimensional space ⋀(R). The scalar coefficient is the triple product of the three vectors.

The cross product and triple product in three dimensions each admit both geometric and algebraic interpretations. The cross product u × v can be interpreted as a vector which is perpendicular to both u and v and whose magnitude is equal to the area of the parallelogram determined by the two vectors. It can also be interpreted as the vector consisting of the minors of the matrix with columns u and v. The triple product of u, v, and w is geometrically a (signed) volume. Algebraically, it is the determinant of the matrix with columns u, v, and w. The exterior product in three dimensions allows for similar interpretations. In fact, in the presence of a positively oriented orthonormal basis, the exterior product generalizes these notions to higher dimensions.

Formal definition

The exterior algebra of a vector space over a field is defined as the quotient algebra of the tensor algebra T(V), where

by the two-sided ideal generated by all elements of the form such that . Symbolically,

The exterior product of two elements of is defined by

Algebraic properties

Alternating product

The exterior product is by construction alternating on elements of , which means that for all by the above construction. It follows that the product is also anticommutative on elements of , for supposing that ,

hence

More generally, if is a permutation of the integers , and , , ..., are elements of , it follows that

where is the signature of the permutation .

In particular, if for some , then the following generalization of the alternating property also holds:

Together with the distributive property of the exterior product, one further generalization is that a necessary and sufficient condition for to be a linearly dependent set of vectors is that

Exterior power

The kth exterior power of , denoted , is the vector subspace of spanned by elements of the form

If , then is said to be a k-vector. If, furthermore, can be expressed as an exterior product of elements of , then is said to be decomposable (or simple, by some authors; or a blade, by others). Although decomposable -vectors span , not every element of is decomposable. For example, given with a basis , the following 2-vector is not decomposable:

Basis and dimension

If the dimension of is and is a basis for , then the set

is a basis for . The reason is the following: given any exterior product of the form

every vector can be written as a linear combination of the basis vectors ; using the bilinearity of the exterior product, this can be expanded to a linear combination of exterior products of those basis vectors. Any exterior product in which the same basis vector appears more than once is zero; any exterior product in which the basis vectors do not appear in the proper order can be reordered, changing the sign whenever two basis vectors change places. In general, the resulting coefficients of the basis k-vectors can be computed as the minors of the matrix that describes the vectors in terms of the basis .

By counting the basis elements, the dimension of is equal to a binomial coefficient:

where is the dimension of the vectors, and is the number of vectors in the product. The binomial coefficient produces the correct result, even for exceptional cases; in particular, for .

Any element of the exterior algebra can be written as a sum of k-vectors. Hence, as a vector space the exterior algebra is a direct sum

(where, by convention, , the field underlying , and ), and therefore its dimension is equal to the sum of the binomial coefficients, which is .

Rank of a k-vector

If , then it is possible to express as a linear combination of decomposable k-vectors:

where each is decomposable, say

The rank of the k-vector is the minimal number of decomposable k-vectors in such an expansion of . This is similar to the notion of tensor rank.

Rank is particularly important in the study of 2-vectors (Sternberg 1964, §III.6) (Bryant et al. 1991). The rank of a 2-vector can be identified with half the rank of the matrix of coefficients of in a basis. Thus if is a basis for , then can be expressed uniquely as

where (the matrix of coefficients is skew-symmetric). The rank of the matrix is therefore even, and is twice the rank of the form .

In characteristic 0, the 2-vector has rank if and only if

- and

Graded structure

The exterior product of a k-vector with a p-vector is a -vector, once again invoking bilinearity. As a consequence, the direct sum decomposition of the preceding section

gives the exterior algebra the additional structure of a graded algebra, that is

Moreover, if K is the base field, we have

- and

The exterior product is graded anticommutative, meaning that if and , then

In addition to studying the graded structure on the exterior algebra, Bourbaki (1989) studies additional graded structures on exterior algebras, such as those on the exterior algebra of a graded module (a module that already carries its own gradation).

Universal property

Let V be a vector space over the field K. Informally, multiplication in is performed by manipulating symbols and imposing a distributive law, an associative law, and using the identity for v ∈ V. Formally, is the "most general" algebra in which these rules hold for the multiplication, in the sense that any unital associative K-algebra containing V with alternating multiplication on V must contain a homomorphic image of . In other words, the exterior algebra has the following universal property:

Given any unital associative K-algebra A and any K-linear map such that for every v in V, then there exists precisely one unital algebra homomorphism such that j(v) = f(i(v)) for all v in V (here i is the natural inclusion of V in , see above).

To construct the most general algebra that contains V and whose multiplication is alternating on V, it is natural to start with the most general associative algebra that contains V, the tensor algebra T(V), and then enforce the alternating property by taking a suitable quotient. We thus take the two-sided ideal I in T(V) generated by all elements of the form v ⊗ v for v in V, and define as the quotient

(and use ∧ as the symbol for multiplication in ). It is then straightforward to show that contains V and satisfies the above universal property.

As a consequence of this construction, the operation of assigning to a vector space V its exterior algebra is a functor from the category of vector spaces to the category of algebras.

Rather than defining first and then identifying the exterior powers as certain subspaces, one may alternatively define the spaces first and then combine them to form the algebra . This approach is often used in differential geometry and is described in the next section.

Generalizations

Given a commutative ring and an -module , we can define the exterior algebra just as above, as a suitable quotient of the tensor algebra . It will satisfy the analogous universal property. Many of the properties of also require that be a projective module. Where finite dimensionality is used, the properties further require that be finitely generated and projective. Generalizations to the most common situations can be found in Bourbaki (1989).

Exterior algebras of vector bundles are frequently considered in geometry and topology. There are no essential differences between the algebraic properties of the exterior algebra of finite-dimensional vector bundles and those of the exterior algebra of finitely generated projective modules, by the Serre–Swan theorem. More general exterior algebras can be defined for sheaves of modules.

Alternating tensor algebra

For a field of characteristic not 2, the exterior algebra of a vector space over can be canonically identified with the vector subspace of that consists of antisymmetric tensors. For characteristic 0 (or higher than ), the vector space of -linear antisymmetric tensors is transversal to the ideal , hence, a good choice to represent the quotient. But for nonzero characteristic, the vector space of -linear antisymmetric tensors could be not transversal to the ideal (actually, for , the vector space of -linear antisymmetric tensors is contained in ); nevertheless, transversal or not, a product can be defined on this space such that the resulting algebra is isomorphic to the exterior algebra: in the first case the natural choice for the product is just the quotient product (using the available projection), in the second case, this product must be slightly modified as given below (along Arnold setting), but such that the algebra stays isomorphic with the exterior algebra, i.e. the quotient of by the ideal generated by elements of the form . Of course, for characteristic (or higher than the dimension of the vector space), one or the other definition of the product could be used, as the two algebras are isomorphic (see V. I. Arnold or Kobayashi-Nomizu).

Let be the space of homogeneous tensors of degree . This is spanned by decomposable tensors

The antisymmetrization (or sometimes the skew-symmetrization) of a decomposable tensor is defined by

and, when (for nonzero characteristic field might be 0):

where the sum is taken over the symmetric group of permutations on the symbols . This extends by linearity and homogeneity to an operation, also denoted by and , on the full tensor algebra .

Note that

Such that, when defined, is the projection for the exterior (quotient) algebra onto the r-homogeneous alternating tensor subspace. On the other hand, the image is always the alternating tensor graded subspace (not yet an algebra, as product is not yet defined), denoted . This is a vector subspace of , and it inherits the structure of a graded vector space from that on . Moreover, the kernel of is precisely , the homogeneous subset of the ideal , or the kernel of is . When is defined, carries an associative graded product defined by (the same as the wedge product)

Assuming has characteristic 0, as is a supplement of in , with the above given product, there is a canonical isomorphism

When the characteristic of the field is nonzero, will do what did before, but the product cannot be defined as above. In such a case, isomorphism still holds, in spite of not being a supplement of the ideal , but then, the product should be modified as given below ( product, Arnold setting).

Finally, we always get isomorphic with , but the product could (or should) be chosen in two ways (or only one). Actually, the product could be chosen in many ways, rescaling it on homogeneous spaces as for an arbitrary sequence in the field, as long as the division makes sense (this is such that the redefined product is also associative, i.e. defines an algebra on ). Also note, the interior product definition should be changed accordingly, in order to keep its skew derivation property.

Index notation

Suppose that V has finite dimension n, and that a basis e1, ..., en of V is given. Then any alternating tensor t ∈ A(V) ⊂ T(V) can be written in index notation with the Einstein summation convention as

where t is completely antisymmetric in its indices.

The exterior product of two alternating tensors t and s of ranks r and p is given by

The components of this tensor are precisely the skew part of the components of the tensor product s ⊗ t, denoted by square brackets on the indices:

The interior product may also be described in index notation as follows. Let be an antisymmetric tensor of rank . Then, for α ∈ V, is an alternating tensor of rank , given by

where n is the dimension of V.

Duality

Alternating operators

Given two vector spaces V and X and a natural number k, an alternating operator from V to X is a multilinear map

such that whenever v1, ..., vk are linearly dependent vectors in V, then

The map

which associates to vectors from their exterior product, i.e. their corresponding -vector, is also alternating. In fact, this map is the "most general" alternating operator defined on given any other alternating operator there exists a unique linear map with This universal property characterizes the space of alternating operators on and can serve as its definition.

Alternating multilinear forms

See also: Alternating multilinear map

The above discussion specializes to the case when , the base field. In this case an alternating multilinear function

is called an alternating multilinear form. The set of all alternating multilinear forms is a vector space, as the sum of two such maps, or the product of such a map with a scalar, is again alternating. By the universal property of the exterior power, the space of alternating forms of degree on is naturally isomorphic with the dual vector space . If is finite-dimensional, then the latter is naturally isomorphic to . In particular, if is -dimensional, the dimension of the space of alternating maps from to is the binomial coefficient .

Under such identification, the exterior product takes a concrete form: it produces a new anti-symmetric map from two given ones. Suppose ω : V → K and η : V → K are two anti-symmetric maps. As in the case of tensor products of multilinear maps, the number of variables of their exterior product is the sum of the numbers of their variables. Depending on the choice of identification of elements of exterior power with multilinear forms, the exterior product is defined as

or as

where, if the characteristic of the base field is 0, the alternation Alt of a multilinear map is defined to be the average of the sign-adjusted values over all the permutations of its variables:

When the field has finite characteristic, an equivalent version of the second expression without any factorials or any constants is well-defined:

where here Shk,m ⊂ Sk+m is the subset of (k, m) shuffles: permutations σ of the set {1, 2, ..., k + m} such that σ(1) < σ(2) < ⋯ < σ(k), and σ(k + 1) < σ(k + 2) < ... < σ(k + m). As this might look very specific and fine tuned, an equivalent raw version is to sum in the above formula over permutations in left cosets of Sk+m / (Sk × Sm).

Interior product

See also: Interior productSuppose that is finite-dimensional. If denotes the dual space to the vector space , then for each , it is possible to define an antiderivation on the algebra ,

This derivation is called the interior product with , or sometimes the insertion operator, or contraction by .

Suppose that . Then is a multilinear mapping of to , so it is defined by its values on the k-fold Cartesian product . If u1, u2, ..., uk−1 are elements of , then define

Additionally, let whenever is a pure scalar (i.e., belonging to ).

Axiomatic characterization and properties

The interior product satisfies the following properties:

- For each and each (where by convention ),

- If is an element of (), then is the dual pairing between elements of and elements of .

- For each , is a graded derivation of degree −1:

These three properties are sufficient to characterize the interior product as well as define it in the general infinite-dimensional case.

Further properties of the interior product include:

Hodge duality

Main article: Hodge star operatorSuppose that has finite dimension . Then the interior product induces a canonical isomorphism of vector spaces

by the recursive definition

In the geometrical setting, a non-zero element of the top exterior power (which is a one-dimensional vector space) is sometimes called a volume form (or orientation form, although this term may sometimes lead to ambiguity). The name orientation form comes from the fact that a choice of preferred top element determines an orientation of the whole exterior algebra, since it is tantamount to fixing an ordered basis of the vector space. Relative to the preferred volume form , the isomorphism is given explicitly by

If, in addition to a volume form, the vector space V is equipped with an inner product identifying with , then the resulting isomorphism is called the Hodge star operator, which maps an element to its Hodge dual:

The composition of with itself maps and is always a scalar multiple of the identity map. In most applications, the volume form is compatible with the inner product in the sense that it is an exterior product of an orthonormal basis of . In this case,

where id is the identity mapping, and the inner product has metric signature (p, q) — p pluses and q minuses.

Inner product

For a finite-dimensional space, an inner product (or a pseudo-Euclidean inner product) on defines an isomorphism of with , and so also an isomorphism of with . The pairing between these two spaces also takes the form of an inner product. On decomposable -vectors,

the determinant of the matrix of inner products. In the special case vi = wi, the inner product is the square norm of the k-vector, given by the determinant of the Gramian matrix (⟨vi, vj⟩). This is then extended bilinearly (or sesquilinearly in the complex case) to a non-degenerate inner product on If ei, i = 1, 2, ..., n, form an orthonormal basis of , then the vectors of the form

constitute an orthonormal basis for , a statement equivalent to the Cauchy–Binet formula.

With respect to the inner product, exterior multiplication and the interior product are mutually adjoint. Specifically, for , , and ,

where x ∈ V is the musical isomorphism, the linear functional defined by

for all . This property completely characterizes the inner product on the exterior algebra.

Indeed, more generally for , , and , iteration of the above adjoint properties gives

where now is the dual -vector defined by

for all .

Bialgebra structure

There is a correspondence between the graded dual of the graded algebra and alternating multilinear forms on . The exterior algebra (as well as the symmetric algebra) inherits a bialgebra structure, and, indeed, a Hopf algebra structure, from the tensor algebra. See the article on tensor algebras for a detailed treatment of the topic.

The exterior product of multilinear forms defined above is dual to a coproduct defined on , giving the structure of a coalgebra. The coproduct is a linear function , which is given by

on elements . The symbol stands for the unit element of the field . Recall that , so that the above really does lie in . This definition of the coproduct is lifted to the full space by (linear) homomorphism. The correct form of this homomorphism is not what one might naively write, but has to be the one carefully defined in the coalgebra article. In this case, one obtains

Expanding this out in detail, one obtains the following expression on decomposable elements:

where the second summation is taken over all (p, k−p)-shuffles. By convention, one takes that Sh(k,0) and Sh(0,k) equals {id: {1, ..., k} → {1, ..., k}}. It is also convenient to take the pure wedge products and to equal 1 for p = 0 and p = k, respectively (the empty product in ). The shuffle follows directly from the first axiom of a co-algebra: the relative order of the elements is preserved in the riffle shuffle: the riffle shuffle merely splits the ordered sequence into two ordered sequences, one on the left, and one on the right.

Observe that the coproduct preserves the grading of the algebra. Extending to the full space one has

The tensor symbol ⊗ used in this section should be understood with some caution: it is not the same tensor symbol as the one being used in the definition of the alternating product. Intuitively, it is perhaps easiest to think it as just another, but different, tensor product: it is still (bi-)linear, as tensor products should be, but it is the product that is appropriate for the definition of a bialgebra, that is, for creating the object . Any lingering doubt can be shaken by pondering the equalities (1 ⊗ v) ∧ (1 ⊗ w) = 1 ⊗ (v ∧ w) and (v ⊗ 1) ∧ (1 ⊗ w) = v ⊗ w, which follow from the definition of the coalgebra, as opposed to naive manipulations involving the tensor and wedge symbols. This distinction is developed in greater detail in the article on tensor algebras. Here, there is much less of a problem, in that the alternating product clearly corresponds to multiplication in the exterior algebra, leaving the symbol free for use in the definition of the bialgebra. In practice, this presents no particular problem, as long as one avoids the fatal trap of replacing alternating sums of by the wedge symbol, with one exception. One can construct an alternating product from , with the understanding that it works in a different space. Immediately below, an example is given: the alternating product for the dual space can be given in terms of the coproduct. The construction of the bialgebra here parallels the construction in the tensor algebra article almost exactly, except for the need to correctly track the alternating signs for the exterior algebra.

In terms of the coproduct, the exterior product on the dual space is just the graded dual of the coproduct:

where the tensor product on the right-hand side is of multilinear linear maps (extended by zero on elements of incompatible homogeneous degree: more precisely, α ∧ β = ε ∘ (α ⊗ β) ∘ Δ, where is the counit, as defined presently).

The counit is the homomorphism that returns the 0-graded component of its argument. The coproduct and counit, along with the exterior product, define the structure of a bialgebra on the exterior algebra.

With an antipode defined on homogeneous elements by , the exterior algebra is furthermore a Hopf algebra.

Functoriality

Suppose that and are a pair of vector spaces and is a linear map. Then, by the universal property, there exists a unique homomorphism of graded algebras

such that

In particular, preserves homogeneous degree. The k-graded components of are given on decomposable elements by

Let

The components of the transformation relative to a basis of and is the matrix of minors of . In particular, if and is of finite dimension , then is a mapping of a one-dimensional vector space to itself, and is therefore given by a scalar: the determinant of .

Exactness

If is a short exact sequence of vector spaces, then

is an exact sequence of graded vector spaces, as is

Direct sums

In particular, the exterior algebra of a direct sum is isomorphic to the tensor product of the exterior algebras:

This is a graded isomorphism; i.e.,

In greater generality, for a short exact sequence of vector spaces there is a natural filtration

where for is spanned by elements of the form for and The corresponding quotients admit a natural isomorphism

- given by

In particular, if U is 1-dimensional then

is exact, and if W is 1-dimensional then

is exact.

Applications

Oriented volume in affine space

The natural setting for (oriented) -dimensional volume and exterior algebra is affine space. This is also the intimate connection between exterior algebra and differential forms, as to integrate we need a 'differential' object to measure infinitesimal volume. If is an affine space over the vector space , and a (simplex) collection of ordered points , we can define its oriented -dimensional volume as the exterior product of vectors (using concatenation to mean the displacement vector from point to ); if the order of the points is changed, the oriented volume changes by a sign, according to the parity of the permutation. In -dimensional space, the volume of any -dimensional simplex is a scalar multiple of any other.

The sum of the -dimensional oriented areas of the boundary simplexes of a -dimensional simplex is zero, as for the sum of vectors around a triangle or the oriented triangles bounding the tetrahedron in the previous section.

The vector space structure on generalises addition of vectors in : we have and similarly a k-blade is linear in each factor.

Linear algebra

In applications to linear algebra, the exterior product provides an abstract algebraic manner for describing the determinant and the minors of a matrix. For instance, it is well known that the determinant of a square matrix is equal to the volume of the parallelotope whose sides are the columns of the matrix (with a sign to track orientation). This suggests that the determinant can be defined in terms of the exterior product of the column vectors. Likewise, the k × k minors of a matrix can be defined by looking at the exterior products of column vectors chosen k at a time. These ideas can be extended not just to matrices but to linear transformations as well: the determinant of a linear transformation is the factor by which it scales the oriented volume of any given reference parallelotope. So the determinant of a linear transformation can be defined in terms of what the transformation does to the top exterior power. The action of a transformation on the lesser exterior powers gives a basis-independent way to talk about the minors of the transformation.

Physics

Main article: Electromagnetic tensorIn physics, many quantities are naturally represented by alternating operators. For example, if the motion of a charged particle is described by velocity and acceleration vectors in four-dimensional spacetime, then normalization of the velocity vector requires that the electromagnetic force must be an alternating operator on the velocity. Its six degrees of freedom are identified with the electric and magnetic fields.

Electromagnetic field

In Einstein's theories of relativity, the electromagnetic field is generally given as a differential 2-form in 4-space or as the equivalent alternating tensor field the electromagnetic tensor. Then or the equivalent Bianchi identity None of this requires a metric.

Adding the Lorentz metric and an orientation provides the Hodge star operator and thus makes it possible to define or the equivalent tensor divergence where

Linear geometry

The decomposable k-vectors have geometric interpretations: the bivector represents the plane spanned by the vectors, "weighted" with a number, given by the area of the oriented parallelogram with sides and . Analogously, the 3-vector represents the spanned 3-space weighted by the volume of the oriented parallelepiped with edges , , and .

Projective geometry

Decomposable k-vectors in correspond to weighted k-dimensional linear subspaces of . In particular, the Grassmannian of k-dimensional subspaces of , denoted , can be naturally identified with an algebraic subvariety of the projective space . This is called the Plücker embedding, and the image of the embedding can be characterized by the Plücker relations.

Differential geometry

The exterior algebra has notable applications in differential geometry, where it is used to define differential forms. Differential forms are mathematical objects that evaluate the length of vectors, areas of parallelograms, and volumes of higher-dimensional bodies, so they can be integrated over curves, surfaces and higher dimensional manifolds in a way that generalizes the line integrals and surface integrals from calculus. A differential form at a point of a differentiable manifold is an alternating multilinear form on the tangent space at the point. Equivalently, a differential form of degree k is a linear functional on the kth exterior power of the tangent space. As a consequence, the exterior product of multilinear forms defines a natural exterior product for differential forms. Differential forms play a major role in diverse areas of differential geometry.

An alternate approach defines differential forms in terms of germs of functions.

In particular, the exterior derivative gives the exterior algebra of differential forms on a manifold the structure of a differential graded algebra. The exterior derivative commutes with pullback along smooth mappings between manifolds, and it is therefore a natural differential operator. The exterior algebra of differential forms, equipped with the exterior derivative, is a cochain complex whose cohomology is called the de Rham cohomology of the underlying manifold and plays a vital role in the algebraic topology of differentiable manifolds.

Representation theory

In representation theory, the exterior algebra is one of the two fundamental Schur functors on the category of vector spaces, the other being the symmetric algebra. Together, these constructions are used to generate the irreducible representations of the general linear group (see Fundamental representation).

Superspace

The exterior algebra over the complex numbers is the archetypal example of a superalgebra, which plays a fundamental role in physical theories pertaining to fermions and supersymmetry. A single element of the exterior algebra is called a supernumber or Grassmann number. The exterior algebra itself is then just a one-dimensional superspace: it is just the set of all of the points in the exterior algebra. The topology on this space is essentially the weak topology, the open sets being the cylinder sets. An n-dimensional superspace is just the -fold product of exterior algebras.

Lie algebra homology

Let be a Lie algebra over a field , then it is possible to define the structure of a chain complex on the exterior algebra of . This is a -linear mapping

defined on decomposable elements by

The Jacobi identity holds if and only if , and so this is a necessary and sufficient condition for an anticommutative nonassociative algebra to be a Lie algebra. Moreover, in that case is a chain complex with boundary operator . The homology associated to this complex is the Lie algebra homology.

Homological algebra

The exterior algebra is the main ingredient in the construction of the Koszul complex, a fundamental object in homological algebra.

History

The exterior algebra was first introduced by Hermann Grassmann in 1844 under the blanket term of Ausdehnungslehre, or Theory of Extension. This referred more generally to an algebraic (or axiomatic) theory of extended quantities and was one of the early precursors to the modern notion of a vector space. Saint-Venant also published similar ideas of exterior calculus for which he claimed priority over Grassmann.

The algebra itself was built from a set of rules, or axioms, capturing the formal aspects of Cayley and Sylvester's theory of multivectors. It was thus a calculus, much like the propositional calculus, except focused exclusively on the task of formal reasoning in geometrical terms. In particular, this new development allowed for an axiomatic characterization of dimension, a property that had previously only been examined from the coordinate point of view.

The import of this new theory of vectors and multivectors was lost to mid-19th-century mathematicians, until being thoroughly vetted by Giuseppe Peano in 1888. Peano's work also remained somewhat obscure until the turn of the century, when the subject was unified by members of the French geometry school (notably Henri Poincaré, Élie Cartan, and Gaston Darboux) who applied Grassmann's ideas to the calculus of differential forms.

A short while later, Alfred North Whitehead, borrowing from the ideas of Peano and Grassmann, introduced his universal algebra. This then paved the way for the 20th-century developments of abstract algebra by placing the axiomatic notion of an algebraic system on a firm logical footing.

See also

- Alternating algebra

- Exterior calculus identities

- Clifford algebra, a generalization of exterior algebra to a nonzero quadratic form

- Geometric algebra

- Koszul complex

- Multilinear algebra

- Symmetric algebra, the symmetric analog

- Tensor algebra

- Weyl algebra, a quantum deformation of the symmetric algebra by a symplectic form

Notes

- ^ Penrose, R. (2007). The Road to Reality. Vintage books. ISBN 978-0-679-77631-4.

- Wheeler, Misner & Thorne 1973, p. 83

- Grassmann (1844) introduced these as extended algebras (cf. Clifford 1878).

- The term k-vector is not equivalent to and should not be confused with similar terms such as 4-vector, which in a different context could mean an element of a 4-dimensional vector space. A minority of authors use the term -multivector instead of -vector, which avoids this confusion.

- This axiomatization of areas is due to Leopold Kronecker and Karl Weierstrass; see Bourbaki (1989b, Historical Note). For a modern treatment, see Mac Lane & Birkhoff (1999, Theorem IX.2.2). For an elementary treatment, see Strang (1993, Chapter 5).

- This definition is a standard one. See, for instance, Mac Lane & Birkhoff (1999).

- A proof of this can be found in more generality in Bourbaki (1989).

- See Bourbaki (1989, §III.7.1), and Mac Lane & Birkhoff (1999, Theorem XVI.6.8). More detail on universal properties in general can be found in Mac Lane & Birkhoff (1999, Chapter VI), and throughout the works of Bourbaki.

- See Bourbaki (1989, §III.7.5) for generalizations.

- Note: The orientations shown here are not correct; the diagram simply gives a sense that an orientation is defined for every k-form.

- Wheeler, J.A.; Misner, C.; Thorne, K.S. (1973). Gravitation. W.H. Freeman & Co. pp. 58–60, 83, 100–9, 115–9. ISBN 0-7167-0344-0.

- Indeed, the exterior algebra of is the enveloping algebra of the abelian Lie superalgebra structure on .

- This part of the statement also holds in greater generality if and are modules over a commutative ring: That converts epimorphisms to epimorphisms. See Bourbaki (1989, Proposition 3, §III.7.2).

- This statement generalizes only to the case where V and W are projective modules over a commutative ring. Otherwise, it is generally not the case that converts monomorphisms to monomorphisms. See Bourbaki (1989, Corollary to Proposition 12, §III.7.9).

- Such a filtration also holds for vector bundles, and projective modules over a commutative ring. This is thus more general than the result quoted above for direct sums, since not every short exact sequence splits in other abelian categories.

- James, A.T. (1983). "On the Wedge Product". In Karlin, Samuel; Amemiya, Takeshi; Goodman, Leo A. (eds.). Studies in Econometrics, Time Series, and Multivariate Statistics. Academic Press. pp. 455–464. ISBN 0-12-398750-4.

- DeWitt, Bryce (1984). "Chapter 1". Supermanifolds. Cambridge University Press. p. 1. ISBN 0-521-42377-5.

- Kannenberg (2000) published a translation of Grassmann's work in English; he translated Ausdehnungslehre as Extension Theory.

- J Itard, Biography in Dictionary of Scientific Biography (New York 1970–1990).

- Authors have in the past referred to this calculus variously as the calculus of extension (Whitehead 1898; Forder 1941), or extensive algebra (Clifford 1878), and recently as extended vector algebra (Browne 2007).

- Bourbaki 1989, p. 661.

References

Mathematical references

- Bishop, R.; Goldberg, S.I. (1980), Tensor analysis on manifolds, Dover, ISBN 0-486-64039-6

- Includes a treatment of alternating tensors and alternating forms, as well as a detailed discussion of Hodge duality from the perspective adopted in this article.

- Bourbaki, Nicolas (1989), Elements of mathematics, Algebra I, Springer-Verlag, ISBN 3-540-64243-9

- This is the main mathematical reference for the article. It introduces the exterior algebra of a module over a commutative ring (although this article specializes primarily to the case when the ring is a field), including a discussion of the universal property, functoriality, duality, and the bialgebra structure. See §III.7 and §III.11.

- Bryant, R.L.; Chern, S.S.; Gardner, R.B.; Goldschmidt, H.L.; Griffiths, P.A. (1991), Exterior differential systems, Springer-Verlag

- This book contains applications of exterior algebras to problems in partial differential equations. Rank and related concepts are developed in the early chapters.

- Mac Lane, S.; Birkhoff, G. (1999), Algebra, AMS Chelsea, ISBN 0-8218-1646-2

- Chapter XVI sections 6–10 give a more elementary account of the exterior algebra, including duality, determinants and minors, and alternating forms.

- Sternberg, Shlomo (1964), Lectures on Differential Geometry, Prentice Hall

- Contains a classical treatment of the exterior algebra as alternating tensors, and applications to differential geometry.

Historical references

- Bourbaki (1989, Historical note on chapters II and III)

- Clifford, W. (1878), "Applications of Grassmann's Extensive Algebra", American Journal of Mathematics, 1 (4), The Johns Hopkins University Press: 350–358, doi:10.2307/2369379, JSTOR 2369379

- Forder, H.G. (1941), The Calculus of Extension, Internet Archive

- Grassmann, Hermann (1844), Die Lineale Ausdehnungslehre – Ein neuer Zweig der Mathematik (in German) (The Linear Extension Theory – A new Branch of Mathematics) alternative reference

- Kannenberg, Lloyd (2000), Extension Theory (translation of Grassmann's Ausdehnungslehre), American Mathematical Society, ISBN 0-8218-2031-1

- Peano, Giuseppe (1888), Calcolo Geometrico secondo l'Ausdehnungslehre di H. Grassmann preceduto dalle Operazioni della Logica Deduttiva; Kannenberg, Lloyd (1999), Geometric calculus: According to the Ausdehnungslehre of H. Grassmann, Birkhäuser, ISBN 978-0-8176-4126-9.

- Whitehead, Alfred North (1898), "A Treatise on Universal Algebra, with Applications", Nature, 58 (1504), Cambridge: 385, Bibcode:1898Natur..58..385G, doi:10.1038/058385a0, S2CID 3985954

Other references and further reading

- Browne, J.M. (2007), Grassmann algebra – Exploring applications of Extended Vector Algebra with Mathematica, archived from the original on 2009-02-19, retrieved 2007-05-09

- An introduction to the exterior algebra, and geometric algebra, with a focus on applications. Also includes a history section and bibliography.

- Spivak, Michael (1965), Calculus on manifolds, Addison-Wesley, ISBN 978-0-8053-9021-6

- Includes applications of the exterior algebra to differential forms, specifically focused on integration and Stokes's theorem. The notation in this text is used to mean the space of alternating k-forms on V; i.e., for Spivak is what this article would call Spivak discusses this in Addendum 4.

- Strang, G. (1993), Introduction to linear algebra, Wellesley-Cambridge Press, ISBN 978-0-9614088-5-5

- Includes an elementary treatment of the axiomatization of determinants as signed areas, volumes, and higher-dimensional volumes.

- Onishchik, A.L. (2001) , "Exterior algebra", Encyclopedia of Mathematics, EMS Press

- Wendell, Fleming (2012) , "7. Exterior algebra and differential calculus", Functions of Several Variables (2nd ed.), Springer, pp. 275–320, ISBN 978-1-4684-9461-7

- This textbook in multivariate calculus introduces the exterior algebra of differential forms adroitly into the calculus sequence for colleges.

- Shafarevich, I.R.; Remizov, A.O. (2012). Linear Algebra and Geometry. Springer. ISBN 978-3-642-30993-9.

- Chapter 10: The Exterior Product and Exterior Algebras

- "The Grassmann method in projective geometry" A compilation of English translations of three notes by Cesare Burali-Forti on the application of exterior algebra to projective geometry

- C. Burali-Forti, "Introduction to Differential Geometry, following the method of H. Grassmann" An English translation of an early book on the geometric applications of exterior algebras

- "Mechanics, according to the principles of the theory of extension" An English translation of one Grassmann's papers on the applications of exterior algebra

| Linear algebra | ||

|---|---|---|

| Basic concepts |  | |

| Matrices | ||

| Bilinear | ||

| Multilinear algebra | ||

| Vector space constructions | ||

| Numerical | ||

| Tensors | |||||

|---|---|---|---|---|---|

| Glossary of tensor theory | |||||

| Scope |

| ||||

| Notation | |||||

| Tensor definitions | |||||

| Operations | |||||

| Related abstractions | |||||

| Notable tensors |

| ||||

| Mathematicians | |||||

is an

is an  which has a product, called exterior product or wedge product and denoted with

which has a product, called exterior product or wedge product and denoted with  , such that

, such that  for every vector

for every vector  in

in  The exterior algebra is named after

The exterior algebra is named after  vectors

vectors  is called a

is called a  is the area of the

is the area of the  and, more generally, the magnitude of a

and, more generally, the magnitude of a  and more generally any blade flips sign whenever two of its constituent vectors are exchanged, corresponding to a parallelotope of opposite orientation.

and more generally any blade flips sign whenever two of its constituent vectors are exchanged, corresponding to a parallelotope of opposite orientation.

which is equivalent in other characteristics). More generally, the exterior algebra can be defined for

which is equivalent in other characteristics). More generally, the exterior algebra can be defined for  is a

is a

and

and  as two of its sides. The area of this parallelogram is given by the standard

as two of its sides. The area of this parallelogram is given by the standard

(The fact that the exterior product is an alternating map also forces

(The fact that the exterior product is an alternating map also forces  ) Note that the coefficient in this last expression is precisely the determinant of the matrix . The fact that this may be positive or negative has the intuitive meaning that v and w may be oriented in a counterclockwise or clockwise sense as the vertices of the parallelogram they define. Such an area is called the

) Note that the coefficient in this last expression is precisely the determinant of the matrix . The fact that this may be positive or negative has the intuitive meaning that v and w may be oriented in a counterclockwise or clockwise sense as the vertices of the parallelogram they define. Such an area is called the

of a vector space

of a vector space  is defined as the

is defined as the

generated by all elements of the form

generated by all elements of the form  such that

such that  . Symbolically,

. Symbolically,

for all

for all  by the above construction. It follows that the product is also

by the above construction. It follows that the product is also  ,

,

is a

is a  , and

, and  ,

,  , ...,

, ...,  are elements of

are elements of

is the

is the  for some

for some  , then the following generalization of the alternating property also holds:

, then the following generalization of the alternating property also holds:

to be a linearly dependent set of vectors is that

to be a linearly dependent set of vectors is that

, is the

, is the

, then

, then  is said to be a

is said to be a  with a basis

with a basis  , the following 2-vector is not decomposable:

, the following 2-vector is not decomposable:

and

and  is a

is a

can be written as a

can be written as a  ; using the bilinearity of the exterior product, this can be expanded to a linear combination of exterior products of those basis vectors. Any exterior product in which the same basis vector appears more than once is zero; any exterior product in which the basis vectors do not appear in the proper order can be reordered, changing the sign whenever two basis vectors change places. In general, the resulting coefficients of the basis k-vectors can be computed as the

; using the bilinearity of the exterior product, this can be expanded to a linear combination of exterior products of those basis vectors. Any exterior product in which the same basis vector appears more than once is zero; any exterior product in which the basis vectors do not appear in the proper order can be reordered, changing the sign whenever two basis vectors change places. In general, the resulting coefficients of the basis k-vectors can be computed as the

for

for  .

.

, the

, the  ), and therefore its dimension is equal to the sum of the binomial coefficients, which is

), and therefore its dimension is equal to the sum of the binomial coefficients, which is  .

.

is decomposable, say

is decomposable, say

(the matrix of coefficients is

(the matrix of coefficients is  is therefore even, and is twice the rank of the form

is therefore even, and is twice the rank of the form  if and only if

if and only if

and

and

-vector, once again invoking bilinearity. As a consequence, the direct sum decomposition of the preceding section

-vector, once again invoking bilinearity. As a consequence, the direct sum decomposition of the preceding section

, then

, then

such that

such that  for every v in V, then there exists precisely one unital

for every v in V, then there exists precisely one unital  such that j(v) = f(i(v)) for all v in V (here i is the natural inclusion of V in

such that j(v) = f(i(v)) for all v in V (here i is the natural inclusion of V in

and an

and an  , we can define the exterior algebra

, we can define the exterior algebra  just as above, as a suitable quotient of the tensor algebra

just as above, as a suitable quotient of the tensor algebra  . It will satisfy the analogous universal property. Many of the properties of

. It will satisfy the analogous universal property. Many of the properties of  that consists of

that consists of  ), the vector space of

), the vector space of  , the vector space of

, the vector space of  (or higher than the dimension of the vector space), one or the other definition of the product could be used, as the two algebras are isomorphic (see V. I. Arnold or Kobayashi-Nomizu).

(or higher than the dimension of the vector space), one or the other definition of the product could be used, as the two algebras are isomorphic (see V. I. Arnold or Kobayashi-Nomizu).

be the space of homogeneous tensors of degree

be the space of homogeneous tensors of degree  . This is spanned by decomposable tensors

. This is spanned by decomposable tensors

(for nonzero characteristic field

(for nonzero characteristic field  might be 0):

might be 0):

. This extends by linearity and homogeneity to an operation, also denoted by

. This extends by linearity and homogeneity to an operation, also denoted by  and

and  , on the full tensor algebra

, on the full tensor algebra

is the projection for the exterior (quotient) algebra onto the r-homogeneous alternating tensor subspace.

On the other hand, the image

is the projection for the exterior (quotient) algebra onto the r-homogeneous alternating tensor subspace.

On the other hand, the image  is always the alternating tensor graded subspace (not yet an algebra, as product is not yet defined), denoted

is always the alternating tensor graded subspace (not yet an algebra, as product is not yet defined), denoted  . This is a vector subspace of

. This is a vector subspace of  is precisely

is precisely  , the homogeneous subset of the ideal

, the homogeneous subset of the ideal  is defined,

is defined,  defined by (the same as the wedge product)

defined by (the same as the wedge product)

still holds, in spite of

still holds, in spite of  product, Arnold setting).

product, Arnold setting).

for an arbitrary sequence

for an arbitrary sequence  in the field, as long as the division makes sense (this is such that the redefined product is also associative, i.e. defines an algebra on

in the field, as long as the division makes sense (this is such that the redefined product is also associative, i.e. defines an algebra on

be an antisymmetric tensor of rank

be an antisymmetric tensor of rank  is an alternating tensor of rank

is an alternating tensor of rank  , given by

, given by

given any other alternating operator

given any other alternating operator  there exists a unique

there exists a unique  with

with  This

This  and can serve as its definition.

and can serve as its definition.

, the base field. In this case an alternating multilinear function

, the base field. In this case an alternating multilinear function

. If

. If  . In particular, if

. In particular, if  .

.

denotes the

denotes the  , it is possible to define an

, it is possible to define an

. Then

. Then  is a multilinear mapping of

is a multilinear mapping of  . If u1, u2, ..., uk−1 are

. If u1, u2, ..., uk−1 are  elements of

elements of

whenever

whenever  is a pure scalar (i.e., belonging to

is a pure scalar (i.e., belonging to  ).

).

),

),

), then

), then  is the dual pairing between elements of

is the dual pairing between elements of  is a

is a

(which is a one-dimensional vector space) is sometimes called a

(which is a one-dimensional vector space) is sometimes called a

with itself maps

with itself maps  and is always a scalar multiple of the identity map. In most applications, the volume form is compatible with the inner product in the sense that it is an exterior product of an

and is always a scalar multiple of the identity map. In most applications, the volume form is compatible with the inner product in the sense that it is an exterior product of an

. The pairing between these two spaces also takes the form of an inner product. On decomposable

. The pairing between these two spaces also takes the form of an inner product. On decomposable

If ei, i = 1, 2, ..., n, form an

If ei, i = 1, 2, ..., n, form an

,

,  , and

, and

. This property completely characterizes the inner product on the exterior algebra.

. This property completely characterizes the inner product on the exterior algebra.

,

,  , iteration of the above adjoint properties gives

, iteration of the above adjoint properties gives

is the dual

is the dual  -vector defined by

-vector defined by

.

.

, which is given by

, which is given by

. The symbol

. The symbol  stands for the unit element of the field

stands for the unit element of the field  , so that the above really does lie in

, so that the above really does lie in  . This definition of the coproduct is lifted to the full space

. This definition of the coproduct is lifted to the full space

and

and  to equal 1 for p = 0 and p = k, respectively (the empty product in

to equal 1 for p = 0 and p = k, respectively (the empty product in  one has

one has

free for use in the definition of the bialgebra. In practice, this presents no particular problem, as long as one avoids the fatal trap of replacing alternating sums of

free for use in the definition of the bialgebra. In practice, this presents no particular problem, as long as one avoids the fatal trap of replacing alternating sums of

is the counit, as defined presently).

is the counit, as defined presently).

that returns the 0-graded component of its argument. The coproduct and counit, along with the exterior product, define the structure of a

that returns the 0-graded component of its argument. The coproduct and counit, along with the exterior product, define the structure of a  , the exterior algebra is furthermore a

, the exterior algebra is furthermore a  are a pair of vector spaces and

are a pair of vector spaces and  is a

is a

preserves homogeneous degree. The k-graded components of

preserves homogeneous degree. The k-graded components of  are given on decomposable elements by

are given on decomposable elements by

relative to a basis of

relative to a basis of  minors of

minors of  and

and  is a mapping of a one-dimensional vector space

is a mapping of a one-dimensional vector space  is a

is a

there is a natural

there is a natural

for

for  is spanned by elements of the form

is spanned by elements of the form  for

for  and

and  The corresponding quotients admit a natural isomorphism

The corresponding quotients admit a natural isomorphism

given by

given by

is an affine space over the vector space

is an affine space over the vector space  points

points  , we can define its oriented

, we can define its oriented

(using concatenation

(using concatenation  to mean the

to mean the  to

to  ); if the order of the points is changed, the oriented volume changes by a sign, according to the parity of the permutation. In

); if the order of the points is changed, the oriented volume changes by a sign, according to the parity of the permutation. In  -dimensional oriented areas of the boundary simplexes of a

-dimensional oriented areas of the boundary simplexes of a  and similarly a k-blade

and similarly a k-blade  is linear in each factor.

is linear in each factor.

in

in  the

the  or the equivalent Bianchi identity

or the equivalent Bianchi identity  None of this requires a metric.

None of this requires a metric.

or the equivalent tensor

or the equivalent tensor  where

where

represents the plane spanned by the vectors, "weighted" with a number, given by the area of the oriented

represents the plane spanned by the vectors, "weighted" with a number, given by the area of the oriented  and

and  represents the spanned 3-space weighted by the volume of the oriented

represents the spanned 3-space weighted by the volume of the oriented  , can be naturally identified with an

, can be naturally identified with an  . This is called the

. This is called the  be a Lie algebra over a field

be a Lie algebra over a field

, and so this is a necessary and sufficient condition for an anticommutative nonassociative algebra

, and so this is a necessary and sufficient condition for an anticommutative nonassociative algebra  is a

is a  . The

. The  converts epimorphisms to epimorphisms. See

converts epimorphisms to epimorphisms. See  in this text is used to mean the space of alternating k-forms on V; i.e., for Spivak

in this text is used to mean the space of alternating k-forms on V; i.e., for Spivak  Spivak discusses this in Addendum 4.

Spivak discusses this in Addendum 4.