Identity replacement technology is any technology that is used to cover up all or parts of a person's identity, either in real life or virtually. This can include face masks, face authentication technology, and deepfakes on the Internet that spread fake editing of videos and images. Face replacement and identity masking are used by either criminals or law-abiding citizens. Identity replacement tech, when operated on by criminals, leads to heists or robbery activities. Law-abiding citizens utilize identity replacement technology to prevent government or various entities from tracking private information such as locations, social connections, and daily behaviors.

Online identity theft, information stealing, and deepfakes are all methods used by hackers to replace or alter the identity of a victim. Along with these hacking methods are some solutions: face liveness detection, obfuscation of crucial information, and location privacy obfuscation. More advanced obfuscation technology can cover up the location of a person through privacy protection. The main method to achieve these kinds of obfuscation is by replacing personal information such as the location of a person with anonymous identities and operators or trackers. There is also research being done on the effectiveness and use of biometric identity authentication such as fingerprints and faces to replace personal identity authentication such as one's SSN.

For biotechnology identity replacement, gene sequencing and identity adjustments are common areas of research. With cutting-edge technology, it is possible to change the identity of a person or the identity of an offspring. With the advancement of science comes the ethical issues of cloning, identity change, and societal and organizational transformations.

Features replacement

Features replacement technology is any technology that changes, alters, hides, or misrepresent a person's features. This can include feature replacements such as fingerprint replacement, face replacement, pupil authentication replacement, etc. The technology involved in features replacement ranges from masking to creating 3D videos and images.

Criminal uses

A variety of technologies attempt to fool facial recognition software by the use of anti-facial recognition masks. 3D masks that replace body features, usually faces, can be made from materials such as plastic, cotton, leather, etc. These identity masks can range from realistic imitations of a person to unrealistic characters that hide the identity of an individual. Criminals and hackers tend to use a spectrum of masks depending on their intended objectives of the crime and other environmental factors. Usually, if a crime involves more planning and execution, criminals and hackers put more effort into creating their 3d masks.

There are many intended purposes for feature replacements. Cyber criminals or real-life criminals use masks or 3D generated images of a mask to hide from security systems or pass through security checks. They usually do this finding the identity of a victim who has access to certain security systems. Then, criminals wear masks in public to conduct fraud and passes through security systems as the victim of the identity theft. This usage of masks and 3D printed items to cover certain body features while conducting crime is illegal under laws like anti-mask laws.

Other reasons for features replacement

Another use of face replacement technology is to hide one's identity from third-party trackers, monitors, and government officials. Although uncommonly used by individuals, this method of hiding one's identity (either online or in-person) is mainly used for hiding from government tracking, for entertainment purposes, and for religious purposes. People may decide to wear a mask in-doors to prevent government tracking, for example.

Deepfakes, spoofing, and anti-spoofing

Deepfakes and synthetic media

Deepfake usages

Deepfakes, a type of identity replacement technology, are pictures or video edits that replace the identity of a person in the picture or the video. This digital forgery is used to manipulate informations, defame people, and blackmail individuals. Through editing techniques such as face replacement and pixel or coloration implant, deepfakes can resemble the real image closely.

Deepfakes are classified into four types of identity manipulations: face synthesis, identity swap, attribute manipulation, and expression swap. Some more specific examples include face swapping, lip syncing, motion transfer, and audio generation. Although a more common usage of synthetic media or deepfakes is political disinformation, a less known phenomenon is financial fraud committed by cybercriminals who use deepfakes to steal financial data and profit from doing so. Hackers and criminals use deepfakes to penetrate social media accounts, security systems of banks, and individual financial information of wealthy individuals. Two scenarios that are used by hackers and manipulators include narrowcast and broadcast. Some deepfake techniques include deepfake voice phishing, fabricated private marks, and synthetic social media profiles that contain profiles of fake identities. According to research, deepfake prevention requires collaboration from key stakeholder such as internal firm employees, industry-wide experts, and multi-stakeholder groups.

Technology used to deter deepfakes

Some possible methods of deterring deepfakes include early detection of face mismatches, individual feature analysis of the face, identity confirmation of images or videos, and techniques that utilize multi-feature analysis that pinpoint face liveness, etc. There is further research being done on deepfakes techniques such as face morphing and face de-identification. However, deepfake prevention techniques tend to be worse at identifying more advanced deepfakes, identification methods sometimes fail to recognize unseen conditions not related to facial analysis, and databases and technology must be up-to-date based on evolving deepfake techniques.

Deepfakes can be used as weapons to spread misinformation and threaten democratic systems through identity replacement strategies. Some deepfakes, due to low cost and ease of usage, can be used to replace identities and spread misinformation across nations and the international landscape effectively. Hackers can use bots or deepfakes that spread propaganda and disinformation to adversaries, and these attempts could challenge democratic processes internationally. The public will be distrustful due to the potential use of deepfakes by politicians or outside countries.

Spoofing and anti-spoofing

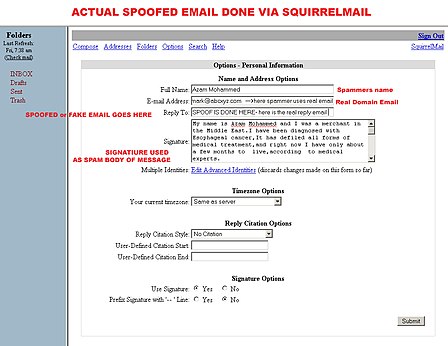

Spoofing

Spoofing, a concept related to deepfake, is a method of hacking and identity manipulation by impersonating as a known source trusted by a spoof target or system of security. Spoofing attacks can be easily launched due to common uses of face recognition systems in mobile device unlocking. One way the hackers get into the system is by using a synthetic-forged biometric that fools sensors and grants a different identity to the hacker who passes through as the real identity.

Spoofing can also involve fake physical artifacts such as fake printouts of masks and fingers that hackers use to manipulate biometric authentication technology. Due to spoofing attempts on a mass scale, there are global political, ethical, and economical threats that goes beyond a country's borders. Mass crimes involving cybersecurity breaches, political hacking, and personal identity thieving can cause damage to the international landscape.

Anti-spoofing techniques

Payment information, personal information, and biometric information are all potential exploitation sources performed on by hackers. There are both feature level and sensor level anti-spoofing techniques. The goal of anti-spoofing is to deter illegitimate users from accessing to important and personal information. 4 main groups of anti-spoofing techniques are widely used by cybersecurity experts: motion analysis, texture analysis, image quality analysis, and hardware based analysis that integrates software components. Another anti-spoofing technique is using color texture to analyze the joint color-texture density of facial features in images and videos. By comparing across databases using replay videos of spoof attacks, many of these methods are able to detect liveness of faces and facial symmetry under a controlled environment.

Anti-spoofing and deepfake identification are prone to errors and attacks. For example, one model of attentional adversarial network to generate fake images that match the original pictures in terms of features, face strength, and semantic information. One drawback of such an adversarial network model is it analyzes only one attack target; However, research is being done on using various models to target multiple attacks. Some other shortcomings of anti-spoofing techniques include failure to detect spoofing across databases, failure to apply to real life scenarios, and performance issues related to the limits of the technologies involved.

Identity change and biotech enhancement

Biotech enhancement

Gene sequencing and gene therapy are cutting-edge technology used by biotech researchers to discover ways of altering the identities or genes of offsprings and humans. With gene alternating and features enhancement, one can change the structural identities of offsprings. Another related concept is cloning, a more futuristic concept about replicating human beings.

On a broader level, identity change leads to social transformation. Identity change and organization transformations occur sometimes at the same time. For example, there is profound socio-political change related to collective and individual identity change in Ireland. Identity change is also associated with economical, political, and social factors related to the changing environment. Individuals maintain the right to make personal choices, but these choices are often affected by one's surroundings and one's immediate environment. Enhancement and alteration of the human body and identity is thus connected to broader social transformations. If society changes and evolves, then individuals may choose to evolve with it. Generational factors are also considered by researchers as biotech evolves and advances.

Ethical concerns of biotech enhancement

Fundamentally, some current objections to enhancement biotech include questions about authenticity of biotech enhancement and fundamental attributes and values of being human. Some key concerns include safety, ethical distributions, and identity traits violations. Current biotech research is seeking to expand upon what human identity means, the connection between gene alteration and human enhancement, generational offspring alterations. More research is needed in this realm of biotechnology research for scientists to determine the viability and ethical issues revolving around advanced biotechnology.

Face authentication and biometric identification

Biometric identifications, including face authentication technology, is used by firms, governments, and various other organizations for security checks and personal identification. This procedure and technology is especially important to protect private materials and information of a firm or government. Due to evolving security technology, Biometric authentication methods are replacing physical copies of IDs, numbers like SSN, and personal information written on paper.

3D sensor analysis to test face authenticity

3D cameras and depth analysis can be used to detect spoofing and fraudulent data. Biometric identifications with a wide range of depth and flexility can aid the detection of spoofing attempts by hackers and identity thieves. Liveness assurance and authentication of faces can help prevent face identity manipulation and forgery in that liveness detection of the face can use color, depth, angle of facial features, and other factors to distinguish between fake and real faces. Due to the ease of making a 3D mask and creating deepfakes online, fake identities is increasingly common in the tech industry. Some common methods used to achieve face authentication results include: SVM classifiers, image quality assessment, pupil tracking and color texture analysis. Biometric identification technology with a higher flexibility leads to better detection of spoofing attacks.

3D face reconstruction and face alignment can aid the use of biometric identification systems when authenticating identities of individuals. An end-to-end method called Position Map Regression Network is used to reconstruct 3D facial features from the 3D space such as from an image of a person. Some key metrics in measuring the effectiveness of alignment and reconstruction include face reconstruction speed, runtime of alignments and accuracy of facial alignment compared to original image. Through restructuring 3D facial structures using density to align faces, position maps can convert a 3D face into a 2D image based on a UV plain analysis. 3D shapes are acquired by 3D sensors and specific features within the face shape are acquired by the sensors to retrieve information. Convolutional neural networks are trained to extract facial and semantic information from the 3D image to the 2D image with a process called regression. Overall, this position-map method of facial reconstruction and alignment can be used in cybersecurity authentication, biometric verification, and identity matching.

Fingerprint biometric identification

Fingerprinting is also a biometric identification method researched on by cybersecurity firms and governments. Fingerprint verification can be used to counter identity theft or potential fraud just like face authentication technologies. One study uses a minutiae-extraction algorithm to develop an identity-authentication system based on how it extracts data and verifiable information from the fingerprint scan. This model is based on alignment, where it matches inputs to stored template to verify the identity of someone faster and more accurately. The goal of all biometric authentication methods, including fingerprint identification, is to have accurate and speedy responses in authenticating data. Systems and alignment technologies are constantly updated to achieve better results. Some drawbacks of fingerprint identification are large distortions in poor image quality, straight line deformations, vague transformations that affects authentication quality, and missing minutiae for some parts of an image. However, multiple biometric authentication tools could be used, such as face and fingerprint, in order to obtain better and more accurate performances.

Applications of 3D sensors and biometrics

The components of 3d sensors such as key electronic parts and sensor systems are increasingly made smaller and better by emphasizing compactness of sensors, effectiveness of detecting shapes, portability, strength of imaging, etc. 3D imaging and optical sensor can be expensive, but the cost can be decreased when manufacturers and suppliers make individual sensor components cheaper and more flexible to fit a variety of sensors and cameras. Virtual renders and prototyping tools are integrated into 3D sensor and camera systems to aid with facial reconstruction, identity search, and shape designs. 3D sensors can be made to form sensor systems where the entire system is more effective at capture an image compared to single sensors or cameras. There are applications for 3D sensors such as in manufacturing, optical uses, and robotic applications. Key industries that could utilize 3d cameras include robotics, law enforcements, automatic authentication systems, and product development.

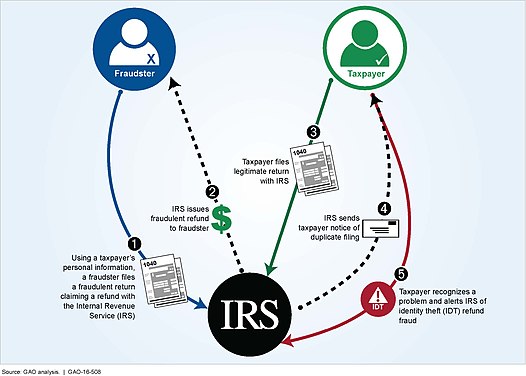

Identity theft

Identity theft is the concept when a thief steals the identity of a victim and portrays oneself as the victim's identity. Identity theft has many implications both on a small and large scale. Individual identity theft can be limited to a single person when the identity thief takes on the identity of that victim. The reason for identity theft might include pleasure of entertainment, malicious hacking, settling revenge, or for political purposes of sabotage. Mass scale identity theft can involve political sabotage, financial and economical heists and crimes, and social changes for the worse.

Identity theft and consumer payment

Identity theft and identity replacement has shaped and affected consumer spending over the past years in the financial world. One method used to analyze identity theft is to map identity theft incidents to determine geographical locations, environmental factors, and purposes of identity theft. Payment instruments used by different types of payment systems can affect how identity theft is used to obtain financial information and commit financial fraud. Identity theft has an implication for consumer payment behaviors and adoptions. Although customers have different payment methods, geographical areas with more identity theft occurrences tend to have an increased use of payment methods such as money orders, travelers’ check, prepaid cards, and credit card payments. Electronic payments are widely used by consumers given society's evolving landscape of payment technology. However, these payment systems, including transactions of checks, cards, and cash, require periodic updates to keep up with evolving ways of identity theft.

Given our current economy of transactions involving customer data, more opportunities are created for fraudulent transactions since more consumers are shopping online and conducting financial transactions online. A thief could hack data related to common financial derivatives and items such as product payments, loans, mortgages, stocks, options trading, etc. One way identity theft can happen is when the thief tries to obtain a service or product but pays it with someone else's financial data or account information. This fraudulent transaction will attribute the cost of the transaction to the identity thief victim. The victim's identity could be used multiple times by different thieves using similar or different identity theft methods. Some solutions to such problems include consumer protections, credit freezes if fraud occurs, credit verification, and penalties and enforcements.

Identity theft in politics

Identity theft can also involve political manipulations and hacking on a large scale that is detrimental to the political wellbeing of international politics. Identity thieves can use identity replacement methods such as biometric replacement, face masks, deepfakes, and personal information stealing to conduct political sabotages. For example, an identity thief could conduct voter fraud by imposing as one or more individuals who cast ballots. The thief could also hack the social media account of a politician and post scandals or defamation about that politician.

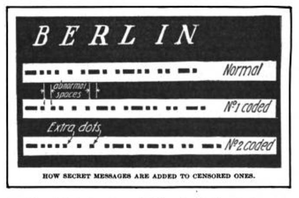

Obfuscation and identity privacy protection

Definition of obfuscation

Obfuscation has a technical meaning of code protection and making coding patterns, structures, and lines anonymous to everyone but the code programmer. This way, the programmer deters incoming hacks and shell-injection attacks methods. Another use of obfuscation is protecting a person's identity online, such as protection of privacy, location, and behaviors.

Methods of obfuscation

Obfuscation operators can be used to determine distribution areas, privacy protections, and location preferences. Probabilistic fundamentals such as the joint distribution function are used to test out obfuscation operators and how operators can be used to protect location privacy of individuals without sacrificing certain app features and efficiencies. Thus, obfuscation can be used to make location and related information anonymous and useless to potential hackers who are trying to breach the privacy of individuals. Adversary models can be used to form combinations of operators to test the viability of obfuscation operators based on adversary awareness, utility functions, and robustness of operator families.

Another obfuscation privacy protection method protects images online and through social media. The targeted-identity-protection-iterative method(TIP-IM) is used for this type of image-privacy protection. The method is to feed various adversarial models into TIP-IM and look at the performance of adversarial networks. By simulating an identity-protection system, the method identifies an adversarial network that interacts with privacy protection results. Thus, the TIP-IM can prevent hackers' unauthorized access to images, accounts, and systems that have sensitive information. There is also a trade-off between effectiveness and naturalness of the protected face and identity images: naturalness of faces decreases as image protection becomes more effective.

Obfuscation Categories

Obfuscation can be divided into three categories: construction, empirical, and construction and empirical combination. Mapping obfuscation techniques involves analysis in data, layout, control, and preventive structures of applications. By diversifying systems and obfuscation of data through system analysis, data scientists and security experts can make it harder for hackers to breach a system's security and privacy settings. Virtualization systems are used by cybersecurity experts to test the effects of various obfuscation techniques on potential cyber attacks. Different cyber attacks on private information require different diversification and obfuscation methods. Thus, a combination of multiple obfuscation methods such as code blocking, location privacy protection, identity replacements can be used. Some further studies in the field of obfuscation include analysis on diversification methods and performing tests on different virtual environments such as cloud and trusted computing.

Olympus: an example of obfuscation technology

One study formed a system of obfuscation operators called Olympus, a system of managing data and protecting privacy of individuals on applications. Olympus's goal is to maintain existing data structures and functionality of the applications while also protecting the privacy of personal information uploaded onto the testing applications. These data usually come from sensors and are uploaded onto the application where it's analyzed. Through obfuscation operators and certain combinations of them, an individual's private data can be protected while also being analyzed. Information categories like SSN, birth dates, home locations, age, gender, race, and income that are sensitive to data-stealing and identity thieving are protected. Olympus is an attempt to apply privacy protection to real world applications. By forming adversarial networks between utility requirements and privacy through weighing the tradeoffs between them, data's usability is kept.

See also

- Obfuscation

- 3D sensor systems

- Spoofing and anti-spoofing

- Face authentication

References

- ^ Bateman, Jon (2020). Deepfakes and Synthetic Media in the Financial System (PDF). Carnegie Endowment for International Peace. JSTOR resrep25783.

- ^ Ardagna, Claudio; Cremonini, Marco; De Capitani di Vimercati, Sabrina; Samarati, Pierangela (January 2011). "An Obfuscation-Based Approach for Protecting Location Privacy". IEEE Transactions on Dependable and Secure Computing. 8 (1): 13–27. CiteSeerX 10.1.1.182.9007. doi:10.1109/TDSC.2009.25. S2CID 105178.

- ^ Degrazia, David (1 June 2005). "Enhancement Technologies and Human Identity". Journal of Medicine and Philosophy. 30 (3): 261–283. doi:10.1080/03605310590960166. PMID 16036459.

- ^ Albakri, Ghazel; Alghowinem, Sharifa (24 April 2019). "The Effectiveness of Depth Data in Liveness Face Authentication Using 3D Sensor Cameras". Sensors. 19 (8). Basel, Switzerland: 1928. Bibcode:2019Senso..19.1928A. doi:10.3390/s19081928. PMC 6515036. PMID 31022904.

- Bryson, Kevin (20 May 2023). "Evaluating Anti-Facial Recognition Tools News Physical Sciences Division The University of Chicago". physicalsciences.uchicago.edu. Retrieved 27 January 2024.

- ^ Tolosana, Ruben; Vera-Rodriguez, Ruben; Fierrez, Julian; Morales, Aythami; Ortega-Garcia, Javier (18 June 2020). "DeepFakes and Beyond: A Survey of Face Manipulation and Fake Detection". arXiv:2001.00179.

{{cite journal}}: Cite journal requires|journal=(help) - Winet, Evan (1 January 2012). "Face-Veil Bans and Anti-Mask Laws: State Interests and the Right to Cover the Face". Hastings International and Comparative Law Review. 35 (1): 217.

- ^ Grünenberg, Kristina (24 March 2020). "Wearing Someone Else's Face: Biometric Technologies, Anti-spoofing and the Fear of the Unknown". Ethnos. 87 (2): 223–240. doi:10.1080/00141844.2019.1705869. S2CID 216368036.

- ^ Smith, Hannah; Mansted, Katherine (2000). Weaponised deep fakes. Australian Strategic Policy Institute. JSTOR resrep25129.

- ^ Patel, Keyurkumar; Han, Hu; Jain, Anil K. (October 2016). "Secure Face Unlock: Spoof Detection on Smartphones". IEEE Transactions on Information Forensics and Security. 11 (10): 2268–2283. doi:10.1109/TIFS.2016.2578288. S2CID 10531341.

- ^ Galbally, Javier; Marcel, Sebastien; Fierrez, Julian (2014). "Biometric Antispoofing Methods: A Survey in Face Recognition". IEEE Access. 2: 1530–1552. Bibcode:2014IEEEA...2.1530G. doi:10.1109/ACCESS.2014.2381273. hdl:10486/666327.

- ^ Boulkenafet, Zinelabidine; Akhtar, Zahid; Feng, Xiaoyi; Hadid, Abdenour (2017). "Face Anti-spoofing in Biometric Systems". Biometric Security and Privacy. Signal Processing for Security Technologies. pp. 299–321. doi:10.1007/978-3-319-47301-7_13. ISBN 978-3-319-47300-0.

- ^ Yang, Xiao; Dong, Yinpeng; Pang, Tianyu; Zhu, Jun; Su, Hang (15 March 2020). "Towards Privacy Protection by Generating Adversarial Identity Masks". arXiv:2003.06814.

{{cite journal}}: Cite journal requires|journal=(help) - ^ Todd, Jennifer (2005). "Social Transformation, Collective Categories, and Identity Change". Theory and Society. 34 (4): 429–463. doi:10.1007/s11186-005-7963-z. hdl:10197/1829. JSTOR 4501731. S2CID 144263474.

- ^ Feng, Yao; Wu, Fan; Shao, Xiaohu; Wang, Yanfeng; Zhou, Xi (2018). Joint 3D Face Reconstruction and Dense Alignment with Position Map Regression Network. Proceedings of the European Conference on Computer Vision. pp. 534–551.

- ^ Sansoni, Giovanna; Trebeschi, Marco; Docchio, Franco (20 January 2009). "State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation". Sensors. 9 (1): 568–601. Bibcode:2009Senso...9..568S. doi:10.3390/s90100568. PMC 3280764. PMID 22389618.

- ^ Jain, A.K.; Pankanti, S.; Bolle, R. (1997). "An identity-authentication system using fingerprints". Proceedings of the IEEE. 85 (9): 1365–1388. CiteSeerX 10.1.1.389.4975. doi:10.1109/5.628674.

- ^ Kahn, Charles M.; Liñares-Zegarra, José M. (August 2016). "Identity Theft and Consumer Payment Choice: Does Security Really Matter?". Journal of Financial Services Research. 50 (1): 121–159. doi:10.1007/s10693-015-0218-x. S2CID 154344806.

- ^ Anderson, Keith B.; Durbin, Erik; Salinger, Michael A. (2008). "Identity Theft". The Journal of Economic Perspectives. 22 (2): 171–192. doi:10.1257/jep.22.2.171. JSTOR 27648247.

- Song, Qing; Wu, Yingqi; Yang, Lu (29 November 2018). "Attacks on State-of-the-Art Face Recognition using Attentional Adversarial Attack Generative Network". arXiv:1811.12026.

{{cite journal}}: Cite journal requires|journal=(help) - ^ Hosseinzadeh, Shohreh; Rauti, Sampsa; Laurén, Samuel; Mäkelä, Jari-Matti; Holvitie, Johannes; Hyrynsalmi, Sami; Leppänen, Ville (December 2018). "Diversification and obfuscation techniques for software security: A systematic literature review". Information and Software Technology. 104: 72–93. doi:10.1016/j.infsof.2018.07.007.

- ^ Raval, Nisarg; Machanavajjhala, Ashwin; Pan, Jerry (1 January 2019). "Olympus: Sensor Privacy through Utility Aware Obfuscation". Proceedings on Privacy Enhancing Technologies. 2019 (1): 5–25. doi:10.2478/popets-2019-0002.