| This article includes a list of references, related reading, or external links, but its sources remain unclear because it lacks inline citations. Please help improve this article by introducing more precise citations. (December 2023) (Learn how and when to remove this message) |

| This article possibly contains original research. Please improve it by verifying the claims made and adding inline citations. Statements consisting only of original research should be removed. (December 2023) (Learn how and when to remove this message) |

This article discusses how information theory (a branch of mathematics studying the transmission, processing and storage of information) is related to measure theory (a branch of mathematics related to integration and probability).

Measures in information theory

Many of the concepts in information theory have separate definitions and formulas for continuous and discrete cases. For example, entropy is usually defined for discrete random variables, whereas for continuous random variables the related concept of differential entropy, written , is used (see Cover and Thomas, 2006, chapter 8). Both these concepts are mathematical expectations, but the expectation is defined with an integral for the continuous case, and a sum for the discrete case.

These separate definitions can be more closely related in terms of measure theory. For discrete random variables, probability mass functions can be considered density functions with respect to the counting measure. Thinking of both the integral and the sum as integration on a measure space allows for a unified treatment.

Consider the formula for the differential entropy of a continuous random variable with range and probability density function :

This can usually be interpreted as the following Riemann–Stieltjes integral:

where is the Lebesgue measure.

If instead, is discrete, with range a finite set, is a probability mass function on , and is the counting measure on , we can write:

The integral expression, and the general concept, are identical in the continuous case; the only difference is the measure used. In both cases the probability density function is the Radon–Nikodym derivative of the probability measure with respect to the measure against which the integral is taken.

If is the probability measure induced by , then the integral can also be taken directly with respect to :

If instead of the underlying measure μ we take another probability measure , we are led to the Kullback–Leibler divergence: let and be probability measures over the same space. Then if is absolutely continuous with respect to , written the Radon–Nikodym derivative exists and the Kullback–Leibler divergence can be expressed in its full generality:

where the integral runs over the support of Note that we have dropped the negative sign: the Kullback–Leibler divergence is always non-negative due to Gibbs' inequality.

Entropy as a "measure"

| This section needs additional citations for verification. Please help improve this article by adding citations to reliable sources in this section. Unsourced material may be challenged and removed. (April 2017) (Learn how and when to remove this message) |

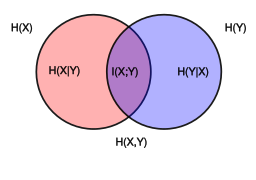

There is an analogy between Shannon's basic "measures" of the information content of random variables and a measure over sets. Namely the joint entropy, conditional entropy, and mutual information can be considered as the measure of a set union, set difference, and set intersection, respectively (Reza pp. 106–108).

If we associate the existence of abstract sets and to arbitrary discrete random variables X and Y, somehow representing the information borne by X and Y, respectively, such that:

- whenever X and Y are unconditionally independent, and

- whenever X and Y are such that either one is completely determined by the other (i.e. by a bijection);

where is a signed measure over these sets, and we set:

we find that Shannon's "measure" of information content satisfies all the postulates and basic properties of a formal signed measure over sets, as commonly illustrated in an information diagram. This allows the sum of two measures to be written:

and the analog of Bayes' theorem () allows the difference of two measures to be written:

This can be a handy mnemonic device in some situations, e.g.

Note that measures (expectation values of the logarithm) of true probabilities are called "entropy" and generally represented by the letter H, while other measures are often referred to as "information" or "correlation" and generally represented by the letter I. For notational simplicity, the letter I is sometimes used for all measures.

Multivariate mutual information

Main article: Multivariate mutual informationCertain extensions to the definitions of Shannon's basic measures of information are necessary to deal with the σ-algebra generated by the sets that would be associated to three or more arbitrary random variables. (See Reza pp. 106–108 for an informal but rather complete discussion.) Namely needs to be defined in the obvious way as the entropy of a joint distribution, and a multivariate mutual information defined in a suitable manner so that we can set:

in order to define the (signed) measure over the whole σ-algebra. There is no single universally accepted definition for the multivariate mutual information, but the one that corresponds here to the measure of a set intersection is due to Fano (1966: p. 57-59). The definition is recursive. As a base case the mutual information of a single random variable is defined to be its entropy: . Then for we set

where the conditional mutual information is defined as

The first step in the recursion yields Shannon's definition The multivariate mutual information (same as interaction information but for a change in sign) of three or more random variables can be negative as well as positive: Let X and Y be two independent fair coin flips, and let Z be their exclusive or. Then bit.

Many other variations are possible for three or more random variables: for example, is the mutual information of the joint distribution of X and Y relative to Z, and can be interpreted as Many more complicated expressions can be built this way, and still have meaning, e.g. or

References

- Thomas M. Cover and Joy A. Thomas. Elements of Information Theory, second edition, 2006. New Jersey: Wiley and Sons. ISBN 978-0-471-24195-9.

- Fazlollah M. Reza. An Introduction to Information Theory. New York: McGraw–Hill 1961. New York: Dover 1994. ISBN 0-486-68210-2

- Fano, R. M. (1966), Transmission of Information: a statistical theory of communications, MIT Press, ISBN 978-0-262-56169-3, OCLC 804123877

- R. W. Yeung, "On entropy, information inequalities, and Groups." PS Archived 2016-03-03 at the Wayback Machine

is usually defined for discrete random variables, whereas for continuous random variables the related concept of

is usually defined for discrete random variables, whereas for continuous random variables the related concept of  , is used (see Cover and Thomas, 2006, chapter 8). Both these concepts are mathematical

, is used (see Cover and Thomas, 2006, chapter 8). Both these concepts are mathematical  with range

with range  and

and  :

:

is the

is the  a finite set,

a finite set,  is a probability mass function on

is a probability mass function on  is the

is the

is the probability measure induced by

is the probability measure induced by

, we are led to the

, we are led to the  the

the  exists and the Kullback–Leibler divergence can be expressed in its full generality:

exists and the Kullback–Leibler divergence can be expressed in its full generality:

Note that we have dropped the negative sign: the Kullback–Leibler divergence is always non-negative due to

Note that we have dropped the negative sign: the Kullback–Leibler divergence is always non-negative due to  and

and  to arbitrary

to arbitrary  whenever X and Y are unconditionally

whenever X and Y are unconditionally  whenever X and Y are such that either one is completely determined by the other (i.e. by a bijection);

whenever X and Y are such that either one is completely determined by the other (i.e. by a bijection);

) allows the difference of two measures to be written:

) allows the difference of two measures to be written:

needs to be defined in the obvious way as the entropy of a joint distribution, and a multivariate

needs to be defined in the obvious way as the entropy of a joint distribution, and a multivariate  defined in a suitable manner so that we can set:

defined in a suitable manner so that we can set:

. Then for

. Then for  we set

we set

The multivariate mutual information (same as

The multivariate mutual information (same as  bit.

bit.

is the mutual information of the joint distribution of X and Y relative to Z, and can be interpreted as

is the mutual information of the joint distribution of X and Y relative to Z, and can be interpreted as  Many more complicated expressions can be built this way, and still have meaning, e.g.

Many more complicated expressions can be built this way, and still have meaning, e.g.  or

or