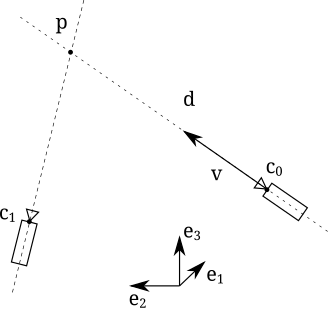

In computer vision, the inverse depth parametrization is a parametrization used in methods for 3D reconstruction from multiple images such as simultaneous localization and mapping (SLAM). Given a point in 3D space observed by a monocular pinhole camera from multiple views, the inverse depth parametrization of the point's position is a 6D vector that encodes the optical centre of the camera when in first observed the point, and the position of the point along the ray passing through and .

Inverse depth parametrization generally improves numerical stability and allows to represent points with zero parallax. Moreover, the error associated to the observation of the point's position can be modelled with a Gaussian distribution when expressed in inverse depth. This is an important property required to apply methods, such as Kalman filters, that assume normality of the measurement error distribution. The major drawback is the larger memory consumption, since the dimensionality of the point's representation is doubled.

Definition

Given 3D point with world coordinates in a reference frame , observed from different views, the inverse depth parametrization of is given by:

where the first five components encode the camera pose in the first observation of the point, being the optical centre, the azimuth, the elevation angle, and the inverse depth of at the first observation.

References

Bibliography

- Montiel, J. M. M.; Civera, Javier; Davison, Andrew J. (2006). "Unified Inverse Depth Parametrization for Monocular SLAM". In Sukhatme, Gaurav S.; Schaal, Stefan; Burgard, Wolfram; Fox, Dieter (eds.). Robotics: Science and Systems II, August 16-19, 2006. University of Pennsylvania, Philadelphia, Pennsylvania, USA. The MIT Press. doi:10.15607/RSS.2006.II.011.

- Civera, Javier; Davison, Andrew J; Montiel, JM Martínez (2008). "Inverse depth parametrization for monocular SLAM". IEEE Transactions on Robotics. 24 (5). IEEE: 932–945. CiteSeerX 10.1.1.175.1380. doi:10.1109/TRO.2008.2003276. S2CID 345360.

- Piniés, Pedro; Lupton, Todd; Sukkarieh, Salah; Tardós, Juan D (2007). "Inertial Aiding of Inverse Depth SLAM using a Monocular Camera". Proceedings 2007 IEEE International Conference on Robotics and Automation. IEEE. pp. 2797–2802. doi:10.1109/ROBOT.2007.363895. ISBN 978-1-4244-0602-9. S2CID 10474338.

- Sunderhauf, Niko; Lange, Sven; Protzel, Peter (2007). "Using the unscented Kalman filter in mono-SLAM with inverse depth parametrization for autonomous airship control". 2007 IEEE International Workshop on Safety, Security and Rescue Robotics. IEEE: 1–6.

along the ray, with direction

along the ray, with direction  , from which it was first observed.

, from which it was first observed. in 3D space observed by a

in 3D space observed by a  when in first observed the point, and the position of the point along the ray passing through

when in first observed the point, and the position of the point along the ray passing through  with world coordinates in a

with world coordinates in a  , observed from different views, the inverse depth parametrization

, observed from different views, the inverse depth parametrization  of

of

the

the  the

the  the elevation angle, and

the elevation angle, and  at the first observation.

at the first observation.