Multimodal search is a type of search that uses different methods to get relevant results. They can use any kind of search, search by keyword, search by concept, search by example, etc.

Introduction

A multimodal search engine is designed to imitate the flexibility and agility of how the human mind works to create, process and refuse irrelevant ideas. So, the more elements you have in the input of the search engine to compare, the more accurate the results can be. Multimodal search engines use different inputs of different nature and methods of search at the same time with the possibility of combining the results by merging all of the input elements of the search. There are also engines that can use a feedback of the results with the evaluation of the user to perform a more appropriate and relevant search.

Nowadays, mobile devices have been developed to a point that they can perform infinite functions from any place at any time, thanks to the internet and GPS connections. Touch screens, motion sensors and voice recognition are now featured on mobile devices called smartphones. All the features and functions make it possible to execute multimodal searches from any place in the world at any time.

Search elements

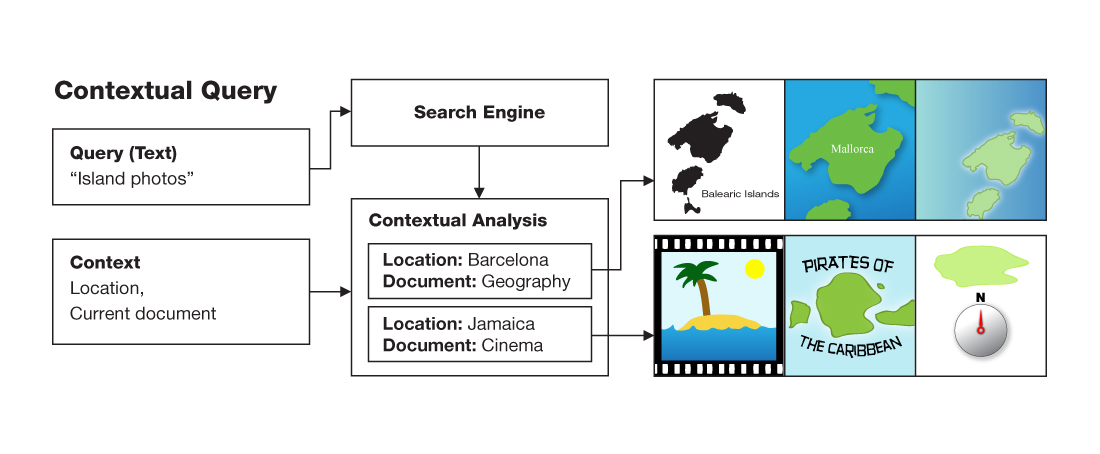

The use of text is an option, as well as multimedia searching, image, video, audio, and voice search. Even the location of the user can help the search engine to perform a more effective search, adaptable to every situation. Nowadays, different ways to interact with a search engine are being discovered, in terms of input elements of the search and in the variety of results obtained.

Personal context

Many queries from mobiles are location-based (LBS), that use the location of the user to interact with the applications. If available, the browser uses the device GPS, or computes an approximate location based on cell tower triangulation, with the permission of the user, who must be agree to share his/her location with the application in the download.

Therefore, multimodal searches use not only audiovisual content that the user provides directly, but also the context where the user is, like his/her location, language, time at the moment, web site or document where the user is surfing, or other elements that can help to improve of a search in every situation.

Classification of the results

The multimodal search engine works in parallel, whilst at the same time, performs a search of more to less relevance of every element introduced directly or indirectly (personal context). Afterwards, it provides a combination of all the results, merging every element with its associated weight for every descriptor.

The engine analyzes every element and tags them, so a comparison of the tags can be made with existent indexed information in databases. A classification of the results proceeds, to show them from more to less relevance.

It’s necessary to define the importance of every input element. There are search engines that do this automatically, however there are also engines where the user can do it manually, giving more or less weight to every element of the search. It’s also important that the user provides the appropriate and essential information for the search; too much information can confuse the system and provide unsatisfactory results. With multimodal searches users can get better results than with a simple search, but multimodal searches must process more input information. It can also spend more time to process it and require more memory space.

An efficient search engine interprets the query of the users, realizes his/her intention and applies a strategy to use an appropriate search, i.e. the engine adapts to every input query and also to the combination of the elements and methods.

Applications

Nowadays, existing multimodal search engines are not very complex, and some of them are in an experimental phase. Some of the more simple engines are Google Images or Bing , web interfaces that use text and images as inputs to find images in the output.

MMRetrieval is a multimodal experimental search engine that uses multilingual and multimedia information through a web interface. The engine searches the different inputs in parallel and merges all the results by different chosen methods. The engine also provides different multistage retrieval, as well as a single text index baseline to be able to compare all the different phases of search.

There are a lot of applications for mobile devices, using the context of the user, like based-location services, and using also text, images, audios or videos that the user provides at the moment or with saved files, or even interacting with the voice.

References

- Query-Adaptive Fusion for Multimodal Search,Lyndon Kennedy, Student Member IEEE, Shih-Fu Chang, Fellow IEEE, and Apostol Natsev

- Context-aware Querying for Multimodal Search Engines, Jonas Etzold, Arnaud Brousseau, Paul Grimm and Thomas Steiner Archived 2012-04-15 at the Wayback Machine

- Apply Multimodal Search and Relevance Feedback In a Digital Video Library, Thesis of Yu Zhong

- Aplicació rica d’internet per a la consulta amb text i imatge al repositori de vídeos de la Corporació Catalana de Mitjans Audiovisuals, Ramon Salla, Universitat Politècnica de Catalunya