Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories (parts of speech) and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a constituency grammar; as such, it stands in contrast to dependency grammars, which are based on the dependency relation.

Definition and examples

Phrase structure rules are usually of the following form:

meaning that the constituent is separated into the two subconstituents and . Some examples for English are as follows:

The first rule reads: A S (sentence) consists of a NP (noun phrase) followed by a VP (verb phrase). The second rule reads: A noun phrase consists of an optional Det (determiner) followed by a N (noun). The third rule means that a N (noun) can be preceded by an optional AP (adjective phrase) and followed by an optional PP (prepositional phrase). The round brackets indicate optional constituents.

Beginning with the sentence symbol S, and applying the phrase structure rules successively, finally applying replacement rules to substitute actual words for the abstract symbols, it is possible to generate many proper sentences of English (or whichever language the rules are specified for). If the rules are correct, then any sentence produced in this way ought to be grammatically (syntactically) correct. It is also to be expected that the rules will generate syntactically correct but semantically nonsensical sentences, such as the following well-known example:

This sentence was constructed by Noam Chomsky as an illustration that phrase structure rules are capable of generating syntactically correct but semantically incorrect sentences. Phrase structure rules break sentences down into their constituent parts. These constituents are often represented as tree structures (dendrograms). The tree for Chomsky's sentence can be rendered as follows:

A constituent is any word or combination of words that is dominated by a single node. Thus each individual word is a constituent. Further, the subject NP Colorless green ideas, the minor NP green ideas, and the VP sleep furiously are constituents. Phrase structure rules and the tree structures that are associated with them are a form of immediate constituent analysis.

In transformational grammar, systems of phrase structure rules are supplemented by transformation rules, which act on an existing syntactic structure to produce a new one (performing such operations as negation, passivization, etc.). These transformations are not strictly required for generation, as the sentences they produce could be generated by a suitably expanded system of phrase structure rules alone, but transformations provide greater economy and enable significant relations between sentences to be reflected in the grammar.

Top down

An important aspect of phrase structure rules is that they view sentence structure from the top down. The category on the left of the arrow is a greater constituent and the immediate constituents to the right of the arrow are lesser constituents. Constituents are successively broken down into their parts as one moves down a list of phrase structure rules for a given sentence. This top-down view of sentence structure stands in contrast to much work done in modern theoretical syntax. In Minimalism for instance, sentence structure is generated from the bottom up. The operation Merge merges smaller constituents to create greater constituents until the greatest constituent (i.e. the sentence) is reached. In this regard, theoretical syntax abandoned phrase structure rules long ago, although their importance for computational linguistics seems to remain intact.

Alternative approaches

Constituency vs. dependency

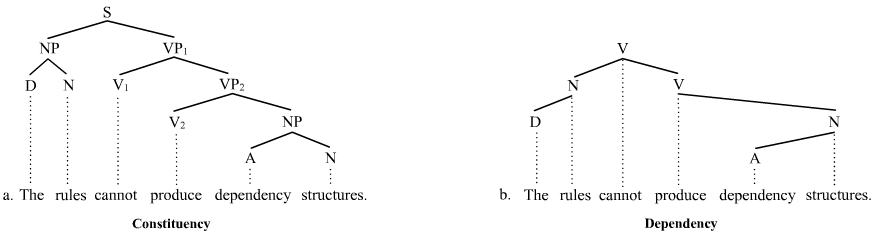

Phrase structure rules as they are commonly employed result in a view of sentence structure that is constituency-based. Thus, grammars that employ phrase structure rules are constituency grammars (= phrase structure grammars), as opposed to dependency grammars, which view sentence structure as dependency-based. What this means is that for phrase structure rules to be applicable at all, one has to pursue a constituency-based understanding of sentence structure. The constituency relation is a one-to-one-or-more correspondence. For every word in a sentence, there is at least one node in the syntactic structure that corresponds to that word. The dependency relation, in contrast, is a one-to-one relation; for every word in the sentence, there is exactly one node in the syntactic structure that corresponds to that word. The distinction is illustrated with the following trees:

The constituency tree on the left could be generated by phrase structure rules. The sentence S is broken down into smaller and smaller constituent parts. The dependency tree on the right could not, in contrast, be generated by phrase structure rules (at least not as they are commonly interpreted).

Representational grammars

A number of representational phrase structure theories of grammar never acknowledged phrase structure rules, but have pursued instead an understanding of sentence structure in terms the notion of schema. Here phrase structures are not derived from rules that combine words, but from the specification or instantiation of syntactic schemata or configurations, often expressing some kind of semantic content independently of the specific words that appear in them. This approach is essentially equivalent to a system of phrase structure rules combined with a noncompositional semantic theory, since grammatical formalisms based on rewriting rules are generally equivalent in power to those based on substitution into schemata.

So in this type of approach, instead of being derived from the application of a number of phrase structure rules, the sentence Colorless green ideas sleep furiously would be generated by filling the words into the slots of a schema having the following structure:

- VP AP]

And which would express the following conceptual content:

- X DOES Y IN THE MANNER OF Z

Though they are non-compositional, such models are monotonic. This approach is highly developed within Construction grammar and has had some influence in Head-Driven Phrase Structure Grammar and lexical functional grammar, the latter two clearly qualifying as phrase structure grammars.

See also

- Constituent

- Dependency grammar

- Discontinuous-constituent phrase structure grammar

- Immediate constituent analysis

- Non-configurational language

- Phrase

- Phrase structure grammar

- Syntactic category

Notes

- For general discussions of phrase structure rules, see for instance Borsley (1991:34ff.), Brinton (2000:165), Falk (2001:46ff.).

- Dependency grammars are associated above all with the work of Lucien Tesnière (1959).

- See for instance Chomsky (1995).

- The most comprehensive source on dependency grammar is Ágel et al. (2003/6).

- Concerning Construction Grammar, see Goldberg (2006).

- Concerning Head-Driven Phrase Structure Grammar, see Pollard and Sag (1994).

- Concerning Lexical Functional Grammar, see Bresnan (2001).

References

- Ágel, V., Ludwig Eichinger, Hans-Werner Eroms, Peter Hellwig, Hans Heringer, and Hennig Lobin (eds.) 2003/6. Dependency and Valency: An International Handbook of Contemporary Research. Berlin: Walter de Gruyter.

- Borsley, R. 1991. Syntactic theory: A unified approach. London: Edward Arnold.

- Bresnan, Joan 2001. Lexical Functional Syntax.

- Brinton, L. 2000. The structure of modern English. Amsterdam: John Benjamins Publishing Company.

- Carnie, A. 2013. Syntax: A Generative Introduction, 3rd edition. Oxford: Blackwell Publishing.

- Chomsky, N. 1957. Syntactic Structures. The Hague/Paris: Mouton.

- Chomsky, N. 1995. The Minimalist Program. Cambridge, Mass.: The MIT Press.

- Falk, Y. 2001. Lexical-Functional Grammar: An introduction to parallel constraint-based syntax. Stanford, CA: CSLI Publications.

- Goldberg, A. 2006. Constructions at Work: The Nature of Generalization in Language. Oxford University Press.

- Pollard, C. and I. Sag 1994. Head-driven phrase structure grammar. Chicago: University of Chicago Press.

- Tesnière, L. 1959. Éleménts de syntaxe structurale. Paris: Klincksieck.

is separated into the two subconstituents

is separated into the two subconstituents  and

and  . Some examples for English are as follows:

. Some examples for English are as follows: