Quadratic unconstrained binary optimization (QUBO), also known as unconstrained binary quadratic programming (UBQP), is a combinatorial optimization problem with a wide range of applications from finance and economics to machine learning. QUBO is an NP hard problem, and for many classical problems from theoretical computer science, like maximum cut, graph coloring and the partition problem, embeddings into QUBO have been formulated. Embeddings for machine learning models include support-vector machines, clustering and probabilistic graphical models. Moreover, due to its close connection to Ising models, QUBO constitutes a central problem class for adiabatic quantum computation, where it is solved through a physical process called quantum annealing.

Definition

The set of binary vectors of a fixed length is denoted by , where is the set of binary values (or bits). We are given a real-valued upper triangular matrix , whose entries define a weight for each pair of indices within the binary vector. We can define a function that assigns a value to each binary vector through

Intuitively, the weight is added if both and have value 1. When , the values are added if , as for all .

The QUBO problem consists of finding a binary vector that is minimal with respect to , namely

In general, is not unique, meaning there may be a set of minimizing vectors with equal value w.r.t. . The complexity of QUBO arises from the number of candidate binary vectors to be evaluated, as grows exponentially in .

Sometimes, QUBO is defined as the problem of maximizing , which is equivalent to minimizing .

Properties

QUBO is scale invariant for positive factors , which leave the optimum unchanged:

In its general form, QUBO is NP-hard and cannot be solved efficiently by any polynomial-time algorithm. However, there are polynomially-solvable special cases, where has certain properties, for example:

- If all coefficients are positive, the optimum is trivially . Similarly, if all coefficients are negative, the optimum is .

- If is diagonal, the bits can be optimized independently, and the problem is solvable in . The optimal variable assignments are simply if , and otherwise.

- If all off-diagonal elements of are non-positive, the corresponding QUBO problem is solvable in polynomial time.

QUBO can be solved using integer linear programming solvers like CPLEX or Gurobi Optimizer. This is possible since QUBO can be reformulated as a linear constrained binary optimization problem. To achieve this, substitute the product by an additional binary variable and add the constraints , and . Note that can also be relaxed to continuous variables within the bounds zero and one.

Applications

QUBO is a structurally simple, yet computationally hard optimization problem. It can be used to encode a wide range of optimization problems from various scientific areas.

Cluster Analysis

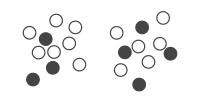

Binary Clustering with QUBO A bad cluster assignment.

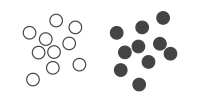

A bad cluster assignment. A good cluster assignment.Visual representation of a clustering problem with 20 points: Circles of the same color belong to the same cluster. Each circle can be understood as a binary variable in the corresponding QUBO problem.

A good cluster assignment.Visual representation of a clustering problem with 20 points: Circles of the same color belong to the same cluster. Each circle can be understood as a binary variable in the corresponding QUBO problem.

As an illustrative example of how QUBO can be used to encode an optimization problem, we consider the problem of cluster analysis. Here, we are given a set of 20 points in 2D space, described by a matrix , where each row contains two cartesian coordinates. We want to assign each point to one of two classes or clusters, such that points in the same cluster are similar to each other. For two clusters, we can assign a binary variable to the point corresponding to the -th row in , indicating whether it belongs to the first () or second cluster (). Consequently, we have 20 binary variables, which form a binary vector that corresponds to a cluster assignment of all points (see figure).

One way to derive a clustering is to consider the pairwise distances between points. Given a cluster assignment , one of or evaluates to 1 if points and are in the same cluster. Similarly, one of or indicates that they are in different clusters. Let denote the Euclidean distance between points and . In order to define a cost function to minimize, when points and are in the same cluster we add their positive distance , and subtract it when they are in different clusters. This way, an optimal solution tends to place points which are far apart into different clusters, and points that are close into the same cluster. The cost function thus comes down to

From the second line, the QUBO parameters can be easily found by re-arranging to be:

Using these parameters, the optimal QUBO solution will correspond to an optimal cluster w.r.t. above cost function.

Connection to Ising models

QUBO is very closely related and computationally equivalent to the Ising model, whose Hamiltonian function is defined as

with real-valued parameters for all . The spin variables are binary with values from instead of . Moreover, in the Ising model the variables are typically arranged in a lattice where only neighboring pairs of variables can have non-zero coefficients. Applying the identity yields an equivalent QUBO problem:

where

and using the fact that for a binary variable .

As the constant does not change the position of the optimum , it can be neglected during optimization and is only important for recovering the original Hamiltonian function value.

References

- Kochenberger, Gary; Hao, Jin-Kao; Glover, Fred; Lewis, Mark; Lu, Zhipeng; Wang, Haibo; Wang, Yang (2014). "The unconstrained binary quadratic programming problem: a survey" (PDF). Journal of Combinatorial Optimization. 28: 58–81. doi:10.1007/s10878-014-9734-0. S2CID 16808394.

- ^ Glover, Fred; Kochenberger, Gary (2019). "A Tutorial on Formulating and Using QUBO Models". arXiv:1811.11538 .

- Lucas, Andrew (2014). "Ising formulations of many NP problems". Frontiers in Physics. 2: 5. arXiv:1302.5843. Bibcode:2014FrP.....2....5L. doi:10.3389/fphy.2014.00005.

- Mücke, Sascha; Piatkowski, Nico; Morik, Katharina (2019). "Learning Bit by Bit: Extracting the Essence of Machine Learning" (PDF). LWDA. S2CID 202760166. Archived from the original (PDF) on 2020-02-27.

- Tom Simonite (8 May 2013). "D-Wave's Quantum Computer Goes to the Races, Wins". MIT Technology Review. Archived from the original on 24 September 2015. Retrieved 12 May 2013.

- A. P. Punnen (editor), Quadratic unconstrained binary optimization problem: Theory, Algorithms, and Applications, Springer, Springer, 2022.

- Çela, E., Punnen, A.P. (2022). Complexity and Polynomially Solvable Special Cases of QUBO. In: Punnen, A.P. (eds) The Quadratic Unconstrained Binary Optimization Problem. Springer, Cham. https://doi.org/10.1007/978-3-031-04520-2_3

- See Theorem 3.16 in Punnen (2022); note that the authors assume the maximization version of QUBO.

- Ratke, Daniel (2021-06-10). "List of QUBO formulations". Retrieved 2022-12-16.

External links

- QUBO Benchmark (Benchmark of software packages for the exact solution of QUBOs; part of the well-known Mittelmann benchmark collection)

- Endre Boros, Peter L Hammer & Gabriel Tavares (April 2007). "Local search heuristics for Quadratic Unconstrained Binary Optimization (QUBO)". Journal of Heuristics. 13 (2). Association for Computing Machinery: 99–132. doi:10.1007/s10732-007-9009-3. S2CID 32887708. Retrieved 12 May 2013.

- Di Wang & Robert Kleinberg (November 2009). "Analyzing quadratic unconstrained binary optimization problems via multicommodity flows". Discrete Applied Mathematics. 157 (18). Elsevier: 3746–3753. doi:10.1016/j.dam.2009.07.009. PMC 2808708. PMID 20161596.

This artificial intelligence-related article is a stub. You can help Misplaced Pages by expanding it. |

is denoted by

is denoted by  , where

, where  is the set of binary values (or bits).

We are given a real-valued upper

is the set of binary values (or bits).

We are given a real-valued upper  , whose entries

, whose entries  define a weight for each pair of indices

define a weight for each pair of indices  within the binary vector.

We can define a function

within the binary vector.

We can define a function  that assigns a value to each binary vector through

that assigns a value to each binary vector through

and

and  have value 1.

When

have value 1.

When  , the values

, the values  are added if

are added if  , as

, as  for all

for all  .

.

that is minimal with respect to

that is minimal with respect to  , namely

, namely

grows exponentially in

grows exponentially in  .

.

.

.

, which leave the optimum

, which leave the optimum

has certain properties, for example:

has certain properties, for example:

. Similarly, if all coefficients are negative, the optimum is

. Similarly, if all coefficients are negative, the optimum is  .

. . The optimal variable assignments are simply

. The optimal variable assignments are simply  if

if  , and

, and  otherwise.

otherwise. by an additional binary variable

by an additional binary variable  and add the constraints

and add the constraints  ,

,  and

and  .

Note that

.

Note that  can also be

can also be  , where each row contains two

, where each row contains two  -th row in

-th row in  , indicating whether it belongs to the first (

, indicating whether it belongs to the first ( ) or second cluster (

) or second cluster ( that corresponds to a cluster assignment of all points (see figure).

that corresponds to a cluster assignment of all points (see figure).

, one of

, one of  evaluates to 1 if points

evaluates to 1 if points  are in the same cluster.

Similarly, one of

are in the same cluster.

Similarly, one of  or

or  indicates that they are in different clusters.

Let

indicates that they are in different clusters.

Let  denote the

denote the  , and subtract it when they are in different clusters.

This way, an optimal solution tends to place points which are far apart into different clusters, and points that are close into the same cluster.

The cost function thus comes down to

, and subtract it when they are in different clusters.

This way, an optimal solution tends to place points which are far apart into different clusters, and points that are close into the same cluster.

The cost function thus comes down to

for all

for all  .

The spin variables

.

The spin variables  are binary with values from

are binary with values from  instead of

instead of  .

Moreover, in the Ising model the variables are typically arranged in a lattice where only neighboring pairs of variables

.

Moreover, in the Ising model the variables are typically arranged in a lattice where only neighboring pairs of variables  can have non-zero coefficients.

Applying the identity

can have non-zero coefficients.

Applying the identity  yields an equivalent QUBO problem:

yields an equivalent QUBO problem:

.

.

does not change the position of the optimum

does not change the position of the optimum