| This article needs additional citations for verification. Please help improve this article by adding citations to reliable sources. Unsourced material may be challenged and removed. Find sources: "Self-balancing binary search tree" – news · newspapers · books · scholar · JSTOR (November 2010) (Learn how and when to remove this message) |

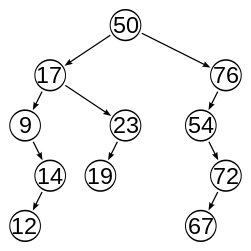

In computer science, a self-balancing binary search tree (BST) is any node-based binary search tree that automatically keeps its height (maximal number of levels below the root) small in the face of arbitrary item insertions and deletions. These operations when designed for a self-balancing binary search tree, contain precautionary measures against boundlessly increasing tree height, so that these abstract data structures receive the attribute "self-balancing".

For height-balanced binary trees, the height is defined to be logarithmic in the number of items. This is the case for many binary search trees, such as AVL trees and red–black trees. Splay trees and treaps are self-balancing but not height-balanced, as their height is not guaranteed to be logarithmic in the number of items.

Self-balancing binary search trees provide efficient implementations for mutable ordered lists, and can be used for other abstract data structures such as associative arrays, priority queues and sets.

Overview

Most operations on a binary search tree (BST) take time directly proportional to the height of the tree, so it is desirable to keep the height small. A binary tree with height h can contain at most 2+2+···+2 = 2−1 nodes. It follows that for any tree with n nodes and height h:

And that implies:

- .

In other words, the minimum height of a binary tree with n nodes is log2(n), rounded down; that is, .

However, the simplest algorithms for BST item insertion may yield a tree with height n in rather common situations. For example, when the items are inserted in sorted key order, the tree degenerates into a linked list with n nodes. The difference in performance between the two situations may be enormous: for example, when n = 1,000,000, the minimum height is .

If the data items are known ahead of time, the height can be kept small, in the average sense, by adding values in a random order, resulting in a random binary search tree. However, there are many situations (such as online algorithms) where this randomization is not viable.

Self-balancing binary trees solve this problem by performing transformations on the tree (such as tree rotations) at key insertion times, in order to keep the height proportional to log2(n). Although a certain overhead is involved, it is not bigger than the always necessary lookup cost and may be justified by ensuring fast execution of all operations.

While it is possible to maintain a BST with minimum height with expected time operations (lookup/insertion/removal), the additional space requirements required to maintain such a structure tend to outweigh the decrease in search time. For comparison, an AVL tree is guaranteed to be within a factor of 1.44 of the optimal height while requiring only two additional bits of storage in a naive implementation. Therefore, most self-balancing BST algorithms keep the height within a constant factor of this lower bound.

In the asymptotic ("Big-O") sense, a self-balancing BST structure containing n items allows the lookup, insertion, and removal of an item in worst-case time, and ordered enumeration of all items in time. For some implementations these are per-operation time bounds, while for others they are amortized bounds over a sequence of operations. These times are asymptotically optimal among all data structures that manipulate the key only through comparisons.

Implementations

Data structures implementing this type of tree include:

- 2–3 tree

- AA tree

- AVL tree

- B-tree

- Red–black tree

- Scapegoat tree

- Tango tree

- Treap

- Weight-balanced tree

Applications

Self-balancing binary search trees can be used in a natural way to construct and maintain ordered lists, such as priority queues. They can also be used for associative arrays; key-value pairs are simply inserted with an ordering based on the key alone. In this capacity, self-balancing BSTs have a number of advantages and disadvantages over their main competitor, hash tables. One advantage of self-balancing BSTs is that they allow fast (indeed, asymptotically optimal) enumeration of the items in key order, which hash tables do not provide. One disadvantage is that their lookup algorithms get more complicated when there may be multiple items with the same key. Self-balancing BSTs have better worst-case lookup performance than most hash tables ( compared to ), but have worse average-case performance ( compared to ).

Self-balancing BSTs can be used to implement any algorithm that requires mutable ordered lists, to achieve optimal worst-case asymptotic performance. For example, if binary tree sort is implemented with a self-balancing BST, we have a very simple-to-describe yet asymptotically optimal sorting algorithm. Similarly, many algorithms in computational geometry exploit variations on self-balancing BSTs to solve problems such as the line segment intersection problem and the point location problem efficiently. (For average-case performance, however, self-balancing BSTs may be less efficient than other solutions. Binary tree sort, in particular, is likely to be slower than merge sort, quicksort, or heapsort, because of the tree-balancing overhead as well as cache access patterns.)

Self-balancing BSTs are flexible data structures, in that it's easy to extend them to efficiently record additional information or perform new operations. For example, one can record the number of nodes in each subtree having a certain property, allowing one to count the number of nodes in a certain key range with that property in time. These extensions can be used, for example, to optimize database queries or other list-processing algorithms.

See also

References

- ^ Donald Knuth. The Art of Computer Programming, Volume 3: Sorting and Searching, Second Edition. Addison-Wesley, 1998. ISBN 0-201-89685-0. Section 6.2.3: Balanced Trees, pp.458–481.

- Cuckoo hashing provides worst-case lookup performance of .

External links

- Dictionary of Algorithms and Data Structures: Height-balanced binary search tree

- GNU libavl, a LGPL-licensed library of binary tree implementations in C, with documentation

| Tree data structures | |

|---|---|

| Search trees (dynamic sets/associative arrays) | |

| Heaps | |

| Tries | |

| Spatial data partitioning trees | |

| Other trees | |

| Data structures | |

|---|---|

| Types | |

| Abstract | |

| Arrays | |

| Linked | |

| Trees | |

| Graphs | |

in the number

in the number  of items. This is the case for many binary search trees, such as

of items. This is the case for many binary search trees, such as

.

. .

.

.

.

time. For some implementations these are per-operation time bounds, while for others they are

time. For some implementations these are per-operation time bounds, while for others they are  ).

).

sorting algorithm. Similarly, many algorithms in

sorting algorithm. Similarly, many algorithms in