(1, 0, 1, 0, 0, 1, 1, 0) × H(8) = (4, 2, 0, −2, 0, 2, 0, 2)

The Hadamard transform (also known as the Walsh–Hadamard transform, Hadamard–Rademacher–Walsh transform, Walsh transform, or Walsh–Fourier transform) is an example of a generalized class of Fourier transforms. It performs an orthogonal, symmetric, involutive, linear operation on 2 real numbers (or complex, or hypercomplex numbers, although the Hadamard matrices themselves are purely real).

The Hadamard transform can be regarded as being built out of size-2 discrete Fourier transforms (DFTs), and is in fact equivalent to a multidimensional DFT of size 2 × 2 × ⋯ × 2 × 2. It decomposes an arbitrary input vector into a superposition of Walsh functions.

The transform is named for the French mathematician Jacques Hadamard (French: [adamaʁ]), the German-American mathematician Hans Rademacher, and the American mathematician Joseph L. Walsh.

Definition

The Hadamard transform Hm is a 2 × 2 matrix, the Hadamard matrix (scaled by a normalization factor), that transforms 2 real numbers xn into 2 real numbers Xk. The Hadamard transform can be defined in two ways: recursively, or by using the binary (base-2) representation of the indices n and k.

Recursively, we define the 1 × 1 Hadamard transform H0 by the identity H0 = 1, and then define Hm for m > 0 by: where the 1/√2 is a normalization that is sometimes omitted.

For m > 1, we can also define Hm by: where represents the Kronecker product. Thus, other than this normalization factor, the Hadamard matrices are made up entirely of 1 and −1.

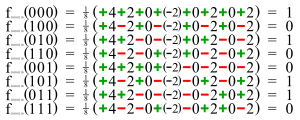

Equivalently, we can define the Hadamard matrix by its (k, n)-th entry by writing

where the kj and nj are the bit elements (0 or 1) of k and n, respectively. Note that for the element in the top left corner, we define: . In this case, we have:

This is exactly the multidimensional DFT, normalized to be unitary, if the inputs and outputs are regarded as multidimensional arrays indexed by the nj and kj, respectively.

Some examples of the Hadamard matrices follow. where is the bitwise dot product of the binary representations of the numbers i and j. For example, if , then , agreeing with the above (ignoring the overall constant). Note that the first row, first column element of the matrix is denoted by .

H1 is precisely the size-2 DFT. It can also be regarded as the Fourier transform on the two-element additive group of Z/(2).

The rows of the Hadamard matrices are the Walsh functions.

| This section is missing information about ordering variants of the Hadamard matrices: sequency (Walsh matrix), Hadamard, and dyadic. Please expand the section to include this information. Further details may exist on the talk page. (April 2024) |

Advantages of the Walsh–Hadamard transform

Real

According to the above definition of matrix H, here we let H = H

In the Walsh transform, only 1 and −1 will appear in the matrix. The numbers 1 and −1 are real numbers so there is no need to perform a complex number calculation.

No multiplication is required

The DFT needs irrational multiplication, while the Hadamard transform does not. Even rational multiplication is not needed, since sign flips is all it takes.

Some properties are similar to those of the DFT

In the Walsh transform matrix, all entries in the first row (and column) are equal to 1.

sign change calculated 1st row 0

second row=1.

third row =2.

.

.

.

eighth row=7.

Discrete Fourier transform:

In discrete Fourier transform, when m equal to zeros (mean first row), the result of DFT also is 1. At the second row, although it is different from the first row we can observe a characteristic of the matrix that the signal in the first raw matrix is low frequency and it will increase the frequency at second row, increase more frequency until the last row.

If we calculate zero crossing:

First row = 0 zero crossing

Second row = 1 zero crossing

Third row = 2 zero crossings

⋮

Eight row = 7 zero crossings

Relation to Fourier transform

The Hadamard transform is in fact equivalent to a multidimensional DFT of size 2 × 2 × ⋯ × 2 × 2.

Another approach is to view the Hadamard transform as a Fourier transform on the Boolean group . Using the Fourier transform on finite (abelian) groups, the Fourier transform of a function is the function defined by where is a character of . Each character has the form for some , where the multiplication is the boolean dot product on bit strings, so we can identify the input to with (Pontryagin duality) and define by

This is the Hadamard transform of , considering the input to and as boolean strings.

In terms of the above formulation where the Hadamard transform multiplies a vector of complex numbers on the left by the Hadamard matrix the equivalence is seen by taking to take as input the bit string corresponding to the index of an element of , and having output the corresponding element of .

Compare this to the usual discrete Fourier transform which when applied to a vector of complex numbers instead uses characters of the cyclic group .

Computational complexity

In the classical domain, the Hadamard transform can be computed in operations (), using the fast Hadamard transform algorithm.

In the quantum domain, the Hadamard transform can be computed in time, as it is a quantum logic gate that can be parallelized.

Quantum computing applications

The Hadamard transform is used extensively in quantum computing. The 2 × 2 Hadamard transform is the quantum logic gate known as the Hadamard gate, and the application of a Hadamard gate to each qubit of an -qubit register in parallel is equivalent to the Hadamard transform .

Hadamard gate

Further information: Quantum gate § Hadamard gateIn quantum computing, the Hadamard gate is a one-qubit rotation, mapping the qubit-basis states and to two superposition states with equal weight of the computational basis states and . Usually the phases are chosen so that

in Dirac notation. This corresponds to the transformation matrix in the basis, also known as the computational basis. The states and are known as and respectively, and together constitute the polar basis in quantum computing.

Hadamard gate operations

One application of the Hadamard gate to either a 0 or 1 qubit will produce a quantum state that, if observed, will be a 0 or 1 with equal probability (as seen in the first two operations). This is exactly like flipping a fair coin in the standard probabilistic model of computation. However, if the Hadamard gate is applied twice in succession (as is effectively being done in the last two operations), then the final state is always the same as the initial state.

Hadamard transform in quantum algorithms

Computing the quantum Hadamard transform is simply the application of a Hadamard gate to each qubit individually because of the tensor product structure of the Hadamard transform. This simple result means the quantum Hadamard transform requires operations, compared to the classical case of operations.

For an -qubit system, Hadamard gates acting on each of the qubits (each initialized to the ) can be used to prepare uniform quantum superposition states

when is of the form .

In this case case with qubits, the combined Hadamard gate is expressed as the tensor product of Hadamard gates:

The resulting uniform quantum superposition state is then: This generalizes the preparation of uniform quantum states using Hadamard gates for any .

Measurement of this uniform quantum state results in a random state between and .

Many quantum algorithms use the Hadamard transform as an initial step, since as explained earlier, it maps n qubits initialized with to a superposition of all 2 orthogonal states in the basis with equal weight. For example, this is used in the Deutsch–Jozsa algorithm, Simon's algorithm, the Bernstein–Vazirani algorithm, and in Grover's algorithm. Note that Shor's algorithm uses both an initial Hadamard transform, as well as the quantum Fourier transform, which are both types of Fourier transforms on finite groups; the first on and the second on .

Preparation of uniform quantum superposition states in the general case, when ≠ is non-trivial and requires more work. An efficient and deterministic approach for preparing the superposition state with a gate complexity and circuit depth of only for all was recently presented. This approach requires only qubits. Importantly, neither ancilla qubits nor any quantum gates with multiple controls are needed in this approach for creating the uniform superposition state .

Molecular phylogenetics (evolutionary biology) applications

The Hadamard transform can be used to estimate phylogenetic trees from molecular data. Phylogenetics is the subfield of evolutionary biology focused on understanding the relationships among organisms. A Hadamard transform applied to a vector (or matrix) of site pattern frequencies obtained from a DNA multiple sequence alignment can be used to generate another vector that carries information about the tree topology. The invertible nature of the phylogenetic Hadamard transform also allows the calculation of site likelihoods from a tree topology vector, allowing one to use the Hadamard transform for maximum likelihood estimation of phylogenetic trees. However, the latter application is less useful than the transformation from the site pattern vector to the tree vector because there are other ways to calculate site likelihoods that are much more efficient. However, the invertible nature of the phylogenetic Hadamard transform does provide an elegant tool for mathematic phylogenetics.

The mechanics of the phylogenetic Hadamard transform involve the calculation of a vector that provides information about the topology and branch lengths for tree using the site pattern vector or matrix .

where is the Hadamard matrix of the appropriate size. This equation can be rewritten as a series of three equations to simplify its interpretation:

The invertible nature of this equation allows one to calculate an expected site pattern vector (or matrix) as follows:

We can use the Cavender–Farris–Neyman (CFN) two-state substitution model for DNA by encoding the nucleotides as binary characters (the purines A and G are encoded as R and the pyrimidines C and T are encoded as Y). This makes it possible to encode the multiple sequence alignment as the site pattern vector that can be converted to a tree vector , as shown in the following example:

| Index | Binary pattern | Alignment patterns | ||||

|---|---|---|---|---|---|---|

| 0 | 0000 | RRRR and YYYY | −0.475 | 0 | 1 | 0.6479 |

| 1 | 0001 | RRRY and YYYR | 0.2 | −0.5 | 0.6065 | 0.1283 |

| 2 | 0010 | RRYR and YYRY | 0.025 | −0.15 | 0.8607 | 0.02 |

| 3* | 0011 | RRYY and YYRR | 0.025 | −0.45 | 0.6376 | 0.0226 |

| 4 | 0100 | RYRR and YRYY | 0.2 | −0.45 | 0.6376 | 0.1283 |

| 5* | 0101 | RYRY and YRYR | 0 | −0.85 | 0.4274 | 0.0258 |

| 6* | 0110 | RYYR and YRRY | 0 | −0.5 | 0.6065 | 0.0070 |

| 7 | 0111 | RYYY and YRRR | 0.025 | −0.9 | 0.4066 | 0.02 |

The example shown in this table uses the simplified three equation scheme and it is for a four taxon tree that can be written as ((A,B),(C,D)); in newick format. The site patterns are written in the order ABCD. This particular tree has two long terminal branches (0.2 transversion substitutions per site), two short terminal branches (0.025 transversion substitutions per site), and a short internal branch (0.025 transversion substitutions per site); thus, it would be written as ((A:0.025,B:0.2):0.025,(C:0.025,D:0.2)); in newick format. This tree will exhibit long branch attraction if the data are analyzed using the maximum parsimony criterion (assuming the sequence analyzed is long enough for the observed site pattern frequencies to be close to the expected frequencies shown in the column). The long branch attraction reflects the fact that the expected number of site patterns with index 6 -- which support the tree ((A,C),(B,D)); -- exceed the expected number of site patterns that support the true tree (index 4). Obviously, the invertible nature of the phylogenetic Hadamard transform means that the tree vector means that the tree vector corresponds to the correct tree. Parsimony analysis after the transformation is therefore statistically consistent, as would be a standard maximum likelihood analysis using the correct model (in this case the CFN model).

Note that the site pattern with 0 corresponds to the sites that have not changed (after encoding the nucleotides as purines or pyrimidines). The indices with asterisks (3, 5, and 6) are "parsimony-informative", and. the remaining indices represent site patterns where a single taxon differs from the other three taxa (so they are the equivalent of terminal branch lengths in a standard maximum likelihood phylogenetic tree).

If one wishes to use nucleotide data without recoding as R and Y (and ultimately as 0 and 1) it is possible to encode the site patterns as a matrix. If we consider a four-taxon tree there are a total of 256 site patterns (four nucleotides to the 4th power). However, symmetries of the Kimura three-parameter (or K81) model allow us to reduce the 256 possible site patterns for DNA to 64 patterns, making it possible to encode the nucleotide data for a four-taxon tree as an 8 × 8 matrix in a manner similar to the vector of 8 elements used above for transversion (RY) site patterns. This is accomplished by recoding the data using the Klein four-group:

| Nucleotide 1 | Nucleotide 2 | Nucleotide 3 | Nucleotide 4 |

|---|---|---|---|

| A (0,0) | G (1,0) | C (0,1) | T (1,1) |

| C (0,0) | T (1,0) | A (0,1) | G (1,1) |

| G (0,0) | A (1,0) | T (0,1) | C (1,1) |

| T (0,0) | C (1,0) | G (0,1) | A (1,1) |

As with RY data, site patterns are indexed relative to the base in the arbitrarily chosen first taxon with the bases in the subsequent taxa encoded relative to that first base. Thus, the first taxon receives the bit pair (0,0). Using those bit pairs one can produce two vectors similar to the RY vectors and then populate the matrix using those vectors. This can be illustrated using the example from Hendy et al. (1994), which is based on a multiple sequence alignment of four primate hemoglobin pseudogenes:

| 0 | 8 | 16 | 24 | 32 | 40 | 48 | 56 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 8988 | 9 | 10 | 12 | 24 | 90 | ||

| 1 | 41 | 9 | ** | |||||

| 2 | 45 | 13 | ||||||

| 3 | 54* | 14 | 3 | |||||

| 4 | 94 | 20 | ||||||

| 5 | 1 | |||||||

| 6 | 2 | 2 | ||||||

| 7 | 356 | 1 | 1 | 75 |

The much larger number of site patterns in column 0 reflects the fact that column 0 corresponds to transition differences, which accumulate more rapidly than transversion differences in virtually all comparisons of genomic regions (and definitely accumulate more rapidly in the hemoglobin pseudogenes used for this worked example). If we consider the site pattern AAGG it would to binary pattern 0000 for the second element of the Klein group bit pair and 0011 for the first element. in this case binary pattern based on the first element first element corresponds to index 3 (so row 3 in column 0; indicated with a single asterisk in the table). The site patterns GGAA, CCTT, and TTCC would be encoded in the exact same way. The site pattern AACT would be encoded with binary pattern 0011 based on the second element and 0001 based on the first element; this yields index 1 for the first element and index 3 for the second. The index based on the second Klein group bit pair is multiplied by 8 to yield the column index (in this case it would be column 24) The cell that would include the count of AACT site patterns is indicated with two asterisks; however, the absence of a number in the example indicates that the sequence alignment include no AACT site patterns (likewise, CCAG, GGTC, and TTGA site patterns, which would be encoded in the same way, are absent).

Other applications

The Hadamard transform is also used in data encryption, as well as many signal processing and data compression algorithms, such as JPEG XR and MPEG-4 AVC. In video compression applications, it is usually used in the form of the sum of absolute transformed differences. It is also a crucial part of significant number of algorithms in quantum computing. The Hadamard transform is also applied in experimental techniques such as NMR, mass spectrometry and crystallography. It is additionally used in some versions of locality-sensitive hashing, to obtain pseudo-random matrix rotations.

See also

External links

- Ritter, Terry (August 1996). "Walsh–Hadamard Transforms: A Literature Survey".

- Akansu, Ali N.; Poluri, R. (July 2007). "Walsh-Like Nonlinear Phase Orthogonal Codes for Direct Sequence CDMA Communications" (PDF). IEEE Transactions on Signal Processing. 55 (7): 3800–6. Bibcode:2007ITSP...55.3800A. doi:10.1109/TSP.2007.894229. S2CID 6830633.

- Pan, Jeng-shyang Data Encryption Method Using Discrete Fractional Hadamard Transformation (May 28, 2009)

- Lachowicz, Dr. Pawel. Walsh–Hadamard Transform and Tests for Randomness of Financial Return-Series (April 7, 2015)

- Beddard, Godfrey; Yorke, Briony A. (January 2011). "Pump-probe Spectroscopy using Hadamard Transforms" (PDF). Archived from the original (PDF) on 2014-10-18. Retrieved 2012-04-28.

- Yorke, Briony A.; Beddard, Godfrey; Owen, Robin L.; Pearson, Arwen R. (September 2014). "Time-resolved crystallography using the Hadamard transform". Nature Methods. 11 (11): 1131–1134. doi:10.1038/nmeth.3139. PMC 4216935. PMID 25282611.

References

- Compare Figure 1 in Townsend, W.J.; Thornton, M.A. "Walsh spectrum computations using Cayley graphs". Proceedings of the 44th IEEE 2001 Midwest Symposium on Circuits and Systems (MWSCAS 2001). MWSCAS-01. IEEE. doi:10.1109/mwscas.2001.986127.

- ^ Kunz, H.O. (1979). "On the Equivalence Between One-Dimensional Discrete Walsh–Hadamard and Multidimensional Discrete Fourier Transforms". IEEE Transactions on Computers. 28 (3): 267–8. doi:10.1109/TC.1979.1675334. S2CID 206621901.

- Fourier Analysis of Boolean Maps– A Tutorial –, pp. 12–13

- Lecture 5: Basic quantum algorithms, Rajat Mittal, pp. 4–5

- Nielsen, Michael A.; Chuang, Isaac (2010). Quantum Computation and Quantum Information. Cambridge: Cambridge University Press. ISBN 978-1-10700-217-3. OCLC 43641333.

- Alok Shukla and Prakash Vedula (2024). "An efficient quantum algorithm for preparation of uniform quantum superposition states". Quantum Information Processing. 23:38 (1): 38. arXiv:2306.11747. Bibcode:2024QuIP...23...38S. doi:10.1007/s11128-024-04258-4.

- Hendy, Michael D.; Penny, David (December 1989). "A Framework for the Quantitative Study of Evolutionary Trees". Systematic Zoology. 38 (4): 297. doi:10.2307/2992396. JSTOR 2992396.

- Hendy, Michael D.; Penny, David (January 1993). "Spectral analysis of phylogenetic data". Journal of Classification. 10 (1): 5–24. doi:10.1007/BF02638451. ISSN 0176-4268. S2CID 122466038.

- Székely, L. A., Erdős, P. L., Steel, M. A., & Penny, D. (1993). A Fourier inversion formula for evolutionary trees. Applied mathematics letters, 6(2), 13–16.

- Felsenstein, Joseph (November 1981). "Evolutionary trees from DNA sequences: A maximum likelihood approach". Journal of Molecular Evolution. 17 (6): 368–376. Bibcode:1981JMolE..17..368F. doi:10.1007/BF01734359. ISSN 0022-2844. PMID 7288891. S2CID 8024924.

- Stamatakis, Alexandros (2019), Warnow, Tandy (ed.), "A Review of Approaches for Optimizing Phylogenetic Likelihood Calculations", Bioinformatics and Phylogenetics, Computational Biology, vol. 29, Cham: Springer International Publishing, pp. 1–19, doi:10.1007/978-3-030-10837-3_1, ISBN 978-3-030-10836-6, S2CID 145834168, retrieved 2020-10-10

- Chor, Benny; Hendy, Michael D.; Holland, Barbara R.; Penny, David (2000-10-01). "Multiple Maxima of Likelihood in Phylogenetic Trees: An Analytic Approach". Molecular Biology and Evolution. 17 (10): 1529–1541. doi:10.1093/oxfordjournals.molbev.a026252. ISSN 1537-1719. PMID 11018159.

- Matsen, Frederick A.; Steel, Mike (2007-10-01). Ané, Cécile; Sullivan, Jack (eds.). "Phylogenetic Mixtures on a Single Tree Can Mimic a Tree of Another Topology". Systematic Biology. 56 (5): 767–775. arXiv:0704.2260. doi:10.1080/10635150701627304. ISSN 1076-836X. PMID 17886146.

- Waddell, Peter J; Steel, M.A (December 1997). "General Time-Reversible Distances with Unequal Rates across Sites: Mixing Γ and Inverse Gaussian Distributions with Invariant Sites". Molecular Phylogenetics and Evolution. 8 (3): 398–414. doi:10.1006/mpev.1997.0452. PMID 9417897.

- Steel, M. A.; Hendy, M. D.; Penny, D. (1993-12-01). "Parsimony Can Be Consistent!". Systematic Biology. 42 (4): 581–587. doi:10.1093/sysbio/42.4.581. ISSN 1063-5157.

- ^ Hendy, M. D.; Penny, D.; Steel, M. A. (1994-04-12). "A discrete Fourier analysis for evolutionary trees". Proceedings of the National Academy of Sciences. 91 (8): 3339–3343. Bibcode:1994PNAS...91.3339H. doi:10.1073/pnas.91.8.3339. ISSN 0027-8424. PMC 43572. PMID 8159749.

- Miyamoto, M. M.; Koop, B. F.; Slightom, J. L.; Goodman, M.; Tennant, M. R. (1988-10-01). "Molecular systematics of higher primates: genealogical relations and classification". Proceedings of the National Academy of Sciences. 85 (20): 7627–7631. Bibcode:1988PNAS...85.7627M. doi:10.1073/pnas.85.20.7627. ISSN 0027-8424. PMC 282245. PMID 3174657.

where the 1/√2 is a normalization that is sometimes omitted.

where the 1/√2 is a normalization that is sometimes omitted.

where

where  represents the

represents the

. In this case, we have:

. In this case, we have:

DFT, normalized to be

DFT, normalized to be  where

where  is the bitwise

is the bitwise  , then

, then  , agreeing with the above (ignoring the overall constant). Note that the first row, first column element of the matrix is denoted by

, agreeing with the above (ignoring the overall constant). Note that the first row, first column element of the matrix is denoted by  .

.

.

Using the

.

Using the  is the function

is the function  defined by

defined by

where

where  is a

is a  for some

for some  , where the multiplication is the boolean dot product on bit strings, so we can identify the input to

, where the multiplication is the boolean dot product on bit strings, so we can identify the input to  by

by

, considering the input to

, considering the input to  complex numbers

complex numbers  on the left by the Hadamard matrix

on the left by the Hadamard matrix  the equivalence is seen by taking

the equivalence is seen by taking  .

.

operations (

operations ( ), using the

), using the  time, as it is a

time, as it is a  is the

is the  -qubit register in parallel is equivalent to the Hadamard transform

-qubit register in parallel is equivalent to the Hadamard transform  and

and  to two superposition states with equal weight of the computational

to two superposition states with equal weight of the computational

in the

in the  basis, also known as the

basis, also known as the  and

and  are known as

are known as  and

and  respectively, and together constitute the

respectively, and together constitute the

operations, compared to the classical case of

operations, compared to the classical case of  operations.

operations.

is of the form

is of the form  .

In this case case with

.

In this case case with

This generalizes the preparation of uniform quantum states using Hadamard gates for any

This generalizes the preparation of uniform quantum states using Hadamard gates for any  .

.

with a gate complexity and circuit depth of only

with a gate complexity and circuit depth of only  for all

for all  qubits. Importantly, neither ancilla qubits nor any quantum gates with multiple controls are needed in this approach for creating the uniform superposition state

qubits. Importantly, neither ancilla qubits nor any quantum gates with multiple controls are needed in this approach for creating the uniform superposition state  .

.

that provides information about the topology and branch lengths for tree

that provides information about the topology and branch lengths for tree  using the site pattern vector or matrix

using the site pattern vector or matrix  .

.

where

where  is the Hadamard matrix of the appropriate size. This equation can be rewritten as a series of three equations to simplify its interpretation:

is the Hadamard matrix of the appropriate size. This equation can be rewritten as a series of three equations to simplify its interpretation: