| This article needs additional citations for verification. Please help improve this article by adding citations to reliable sources. Unsourced material may be challenged and removed. Find sources: "Number One Electronic Switching System" – news · newspapers · books · scholar · JSTOR (March 2010) (Learn how and when to remove this message) |

The Number One Electronic Switching System (1ESS) was the first large-scale stored program control (SPC) telephone exchange or electronic switching system in the Bell System. It was manufactured by Western Electric and first placed into service in Succasunna, New Jersey, in May 1965. The switching fabric was composed of a reed relay matrix controlled by wire spring relays which in turn were controlled by a central processing unit (CPU).

The 1AESS central office switch was a plug compatible, higher capacity upgrade from 1ESS with a faster 1A processor that incorporated the existing instruction set for programming compatibility, and used smaller remreed switches, fewer relays, and featured disk storage. It was in service from 1976 to 2017.

Switching fabric

| This section may be too technical for most readers to understand. Please help improve it to make it understandable to non-experts, without removing the technical details. (May 2020) (Learn how and when to remove this message) |

The voice switching fabric plan was similar to that of the earlier 5XB switch in being bidirectional and in using the call-back principle. The largest full-access matrix switches (the 12A line grids had partial access) in the system, however, were 8x8 rather than 10x10 or 20x16. Thus they required eight stages rather than four to achieve large enough junctor groups in a large office. Crosspoints being more expensive in the new system but switches cheaper, system cost was minimized with fewer crosspoints organized into more switches. The fabric was divided into Line Networks and Trunk Networks of four stages, and partially folded to allow connecting line-to-line or trunk-to-trunk without exceeding eight stages of switching.

The traditional implementation of a nonblocking minimal spanning switch able to connect input customers to output customers simultaneously—with the connections initiated in any order—the connection matrix scaled on . This being impractical, statistical theory is used to design hardware that can connect most of the calls, and block others when traffic exceeds the design capacity. These blocking switches are the most common in modern telephone exchanges. They are generally implemented as smaller switch fabrics in cascade. In many, a randomizer is used to select the start of a path through the multistage fabric so that the statistical properties predicted by the theory can be gained. In addition, if the control system is able to rearrange the routing of existing connections on the arrival of a new connection, a full non-blocking matrix requires fewer switch points.

Line and trunk networks

Each four stage Line Network (LN) or Trunk Network (TN) was divided into Junctor Switch Frames (JSF) and either Line Switch Frames (LSF) in the case of a Line Network, or Trunk Switch Frames (TSF) in the case of a Trunk Network. Links were designated A, B, C, and J for Junctor. A Links were internal to the LSF or TSF; B Links connected LSF or TSF to JSF, C were internal to JSF, and J links or Junctors connected to another net in the exchange.

All JSFs had a unity concentration ratio, that is the number of B links within the network equalled the number of junctors to other networks. Most LSFs had a 4:1 Line Concentration Ratio (LCR); that is the lines were four times as numerous as the B links. In some urban areas 2:1 LSF were used. The B links were often multipled to make a higher LCR, such as 3:1 or (especially in suburban 1ESS) 5:1. Line Networks always had 1024 Junctors, arranged in 16 grids that each switched 64 junctors to 64 B links. Four grids were grouped for control purposes in each of four LJFs.

TSF had a unity concentration, but a TN could have more TSFs than JSFs. Thus their B links were usually multipled to make a Trunk Concentration Ratio (TCR) of 1.25:1 or 1.5:1, the latter being especially common in 1A offices. TSFs and JSFs were identical except for their position in the fabric and the presence of a ninth test access level or no-test level in the JSF. Each JSF or TSF was divided into 4 two-stage grids.

Early TNs had four JSF, for a total of 16 grids, 1024 J links and the same number of B links, with four B links from each Trunk Junctor grid to each Trunk Switch grid. Starting in the mid-1970s, larger offices had their B links wired differently, with only two B links from each Trunk Junctor Grid to each Trunk Switch Grid. This allowed a larger TN, with 8 JSF containing 32 grids, connecting 2048 junctors and 2048 B links. Thus the junctor groups could be larger and more efficient. These TN had eight TSF, giving the TN a unity trunk concentration ratio.

Within each LN or TN, the A, B, C and J links were counted from the outer termination to the inner. That is, for a trunk, the trunk Stage 0 switch could connect each trunk to any of eight A links, which in turn were wired to Stage 1 switches to connect them to B links. Trunk Junctor grids also had Stage 0 and Stage 1 switches, the former to connect B links to C links, and the latter to connect C to J links also called Junctors. Junctors were gathered into cables, 16 twisted pairs per cable constituting a Junctor Subgroup, running to the Junctor Grouping Frame where they were plugged into cables to other networks. Each network had 64 or 128 subgroups, and was connected to each other network by one or (usually) several subgroups.

The original 1ESS Ferreed switching fabric was packaged as separate 8x8 switches or other sizes, tied into the rest of the speech fabric and control circuitry by wire wrap connections. The transmit/receive path of the analog voice signal is through a series of magnetic-latching reed switches (very similar to latching relays).

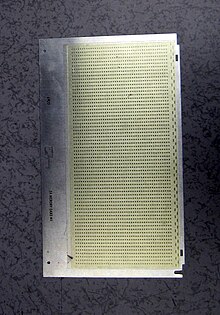

The much smaller Remreed crosspoints, introduced at about the same time as 1AESS, were packaged as grid boxes of four principal types. Type 10A Junctor Grids and 11A Trunk Grids were a box about 16x16x5 inches (40x40x12 cm) with sixteen 8x8 switches inside. Type 12A Line Grids with 2:1 LCR were only about 5 inches (12 cm) wide, with eight 4x4 Stage 0 line switches with ferrods and cutoff contacts for 32 lines, connected internally to four 4x8 Stage 1 switches connecting to B-links. Type 14A Line Grids with 4:1 LCR were about 16x12x5 inches (40x30x12 cm) with 64 lines, 32 A-links and 16 B-links. The boxes were connected to the rest of the fabric and control circuitry by slide-in connectors. Thus the worker had to handle a much bigger, heavier piece of equipment, but did not have to unwrap and rewrap dozens of wires.

Fabric error

The two controllers in each Junctor Frame had no-test access to their Junctors via their F-switch, a ninth level in the Stage 1 switches which could be opened or closed independently of the crosspoints in the grid. When setting up each call through the fabric, but before connecting the fabric to the line and/or trunk, the controller could connect a test scan point to the talk wires in order to detect potentials. Current flowing through the scan point would be reported to the maintenance software, resulting in a "False Cross and Ground" (FCG) teleprinter message listing the path. Then the maintenance software would tell the call completion software to try again with a different junctor.

With a clean FCG test, the call completion software told the "A" relay in the trunk circuit to operate, connecting its transmission and test hardware to the switching fabric and thus to the line. Then, for an outgoing call, the trunk's scan point would scan for the presence of an off hook line. If the short was not detected, the software would command the printing of a "Supervision Failure" (SUPF) and try again with a different junctor. A similar supervision check was performed when an incoming call was answered. Any of these tests could alert for the presence of a bad crosspoint.

Staff could study a mass of printouts to find which links and crosspoints (out of, in some offices, a million crosspoints) were causing calls to fail on first tries. In the late 1970s, teleprinter channels were gathered together in Switching Control Centers (SCC), later Switching Control Center System, each serving a dozen or more 1ESS exchanges and using their own computers to analyze these and other kinds of failure reports. They generated a so-called histogram (actually a scatterplot) of parts of the fabric where failures were particularly numerous, usually pointing to a particular bad crosspoint, even if it failed sporadically rather than consistently. Local workers could then busy out the appropriate switch or grid and replace it.

When a test access crosspoint itself was stuck closed, it would cause sporadic FCG failures all over both grids that were tested by that controller. Since the J links were externally connected, switchroom staff discovered that such failures could be found by making busy both grids, grounding the controller's test leads, and then testing all 128 J links, 256 wires, for a ground.

Given the restrictions of 1960s hardware, unavoidable failure occurred. Though detected, the system was designed to connect the calling party to the wrong person rather than a disconnect, intercept, etc.

Scan and distribute

The computer received input from peripherals via magnetic scanners, composed of ferrod sensors, similar in principle to magnetic core memory except that the output was controlled by control windings analogous to the windings of a relay. Specifically, the ferrod was a transformer with four windings. Two small windings ran through holes in the center of a rod of ferrite. A pulse on the Interrogate winding was induced into the Readout winding, if the ferrite was not magnetically saturated. The larger control windings, if current was flowing through them, saturated the magnetic material, hence decoupling the Interrogate winding from the Readout winding which would return a Zero signal. The Interrogate windings of 16 ferrods of a row were wired in series to a driver, and the Readout windings of 64 ferrods of a column were wired to a sense amp. Check circuits ensured that an Interrogate current was indeed flowing.

Scanners were Line Scanners (LSC), Universal Trunk Scanners (USC), Junctor Scanners (JSC) and Master Scanners (MS). The first three only scanned for supervision, while Master Scanners did all other scan jobs. For example, a DTMF Receiver, mounted in a Miscellaneous Trunk frame, had eight demand scan points, one for each frequency, and two supervisory scan points, one to signal the presence of a valid DTMF combination so the software knew when to look at the frequency scan points, and the other to supervise the loop. The supervisory scan point also detected Dial Pulses, with software counting the pulses as they arrived. Each digit when it became valid was stored in a software hopper to be given to the Originating Register.

Ferrods were mounted in pairs, usually with different control windings, so one could supervise a switchward side of a trunk and the other the distant office. Components inside the trunk pack, including diodes, determined for example, whether it performed reverse battery signaling as an incoming trunk, or detected reverse battery from a distant trunk; i.e. was an outgoing trunk.

Line ferrods were also provided in pairs, of which the even numbered one had contacts brought out to the front of the package in lugs suitable for wire wrap so the windings could be strapped for loop start or ground start signaling. The original 1ESS packaging had all the ferrods of an LSF together, and separate from the line switches, while the later 1AESS had each ferrod at the front of the steel box containing its line switch. Odd numbered line equipment could not be made ground start, their ferrods being inaccessible.

The computer controlled the magnetic latching relays by Signal Distributors (SD) packaged in the Universal Trunk frames, Junctor frames, or in Miscellaneous Trunk frames, according to which they were numbered as USD, JSD or MSD. SD were originally contact trees of 30-contact wire spring relays, each driven by a flipflop. Each magnetic latching relay had one transfer contact dedicated to sending a pulse back to the SD, on each operate and release. The pulser in the SD detected this pulse to determine that the action had occurred, or else alerted the maintenance software to print a FSCAN report. In later 1AESS versions SD were solid state with several SD points per circuit pack generally on the same shelf or adjacent shelf to the trunk pack.

A few peripherals that needed quicker response time, such as Dial Pulse Transmitters, were controlled via Central Pulse Distributors, which otherwise were mainly used for enabling (alerting) a peripheral circuit controller to accept orders from the Peripheral Unit Address Bus.

1ESS computer

The duplicate Harvard architecture central processor or CC (Central Control) for the 1ESS operated at approximately 200 kHz. It comprised five bays, each two meters high and totaling about four meters in length per CC. Packaging was in cards approximately 4x10 inches (10x25 centimeters) with an edge connector in the back. Backplane wiring was cotton covered wire-wrap wires, not ribbons or other cables. CPU logic was implemented using discrete diode–transistor logic. One hard plastic card commonly held the components necessary to implement, for example, two gates or a flipflop.

A great deal of logic was given over to diagnostic circuitry. CPU diagnostics could be run that would attempt to identify failing card(s). In single card failures, first attempt to repair success rates of 90% or better were common. Multiple card failures were not uncommon and the success rate for first time repair dropped rapidly.

The CPU design was quite complex - using three way interleaving of instruction execution (later called instruction pipeline) to improve throughput. Each instruction would go through an indexing phase, an actual instruction execution phase and an output phase. While an instruction was going through the indexing phase, the previous instruction was in its execution phase and the instruction before it was in its output phase.

In many instructions of the instruction set, data could be optionally masked and/or rotated. Single instructions existed for such esoteric functions as "find first set bit (the rightmost bit that is set) in a data word, optionally reset the bit and tell me the position of the bit". Having this function as an atomic instruction (rather than implementing as a subroutine) dramatically sped scanning for service requests or idle circuits. The central processor was implemented as a hierarchical state machine.

Memory had a 44-bit word length for program stores, of which six bits were for Hamming error correction and one was used for an additional parity check. This left 37 bits for the instruction, of which usually 22 bits were used for the address. This was an unusually wide instruction word for the time.

Program stores also contained permanent data, and could not be written online. Instead, the aluminum memory cards, also called twistor planes, had to be removed in groups of 128 so their permanent magnets could be written offline by a motorized writer, an improvement over the non motorized single card writer used in Project Nike. All memory frames, all busses, and all software and data were fully dual modular redundant. The dual CCs operated in lockstep and the detection of a mismatch triggered an automatic sequencer to change the combination of CC, busses and memory modules until a configuration was reached that could pass a sanity check. Busses were twisted pairs, one pair for each address, data or control bit, connected at the CC and at each store frame by coupling transformers, and ending in terminating resistors at the last frame.

Call Stores were the system's read/write memory, containing the data for calls in progress and other temporary data. They had a 24-bit word, of which one bit was for parity check. They operated similar to magnetic core memory, except that the ferrite was in sheets with a hole for each bit, and the coincident current address and readout wires passed through that hole. The first Call Stores held 8 kilowords, in a frame approximately a meter wide and two meters tall.

The separate program memory and data memory were operated in antiphase, with the addressing phase of Program Store coinciding with the data fetch phase of Call Store and vice versa. This resulted in further overlapping, thus higher program execution speed than might be expected from the slow clock rate.

Programs were mostly written in machine code. Bugs that previously went unnoticed became prominent when 1ESS was brought to big cities with heavy telephone traffic, and delayed the full adoption of the system for a few years. Temporary fixes included the Service Link Network (SLN), which did approximately the job of the Incoming Register Link and Ringing Selection Switch of the 5XB switch, thus diminishing CPU load and decreasing response times for incoming calls, and a Signal Processor (SP) or peripheral computer of only one bay, to handle simple but time-consuming tasks such as the timing and counting of Dial Pulses. 1AESS eliminated the need for SLN and SP.

The half inch tape drive was write only, being used only for Automatic Message Accounting. Program updates were executed by shipping a load of Program Store cards with the new code written on them.

The Basic Generic program included constant "audits" to correct errors in the call registers and other data. When a critical hardware failure in the processor or peripheral units occurred, such as both controllers of a line switch frame failing and unable to receive orders, the machine would stop connecting calls and go into a "phase of memory regeneration", "phase of reinitialization", or "Phase" for short. The Phases were known as Phase 1,2,4 or 5. Lesser phases only cleared the call registers of calls that were in an unstable state that is not yet connected, and took less time.

During a Phase, the system, normally roaring with the sound of relays operating and releasing, would go quiet as no relays were getting orders. The Teletype Model 35 would ring its bell and print a series of P's while the phase lasted. For Central office staff this could be a scary time as seconds and then perhaps minutes passed while they knew subscribers who picked up their phones would get dead silence until the phase was over and the processor regained "sanity" and resumed connecting calls. Greater phases took longer, clearing all call registers, thus disconnecting all calls and treating any off-hook line as a request for dial tone. If the automated phases failed to restore system sanity, there were manual procedures to identify and isolate bad hardware or buses.

1AESS

Most of the thousands of 1ESS and 1AESS offices in the USA were replaced in the 1990s by DMS-100, 5ESS Switch and other digital switches, and since 2010 also by packet switches. As of late 2014, just over 20 1AESS installations remained in the North American network, which were located mostly in AT&T's legacy BellSouth and AT&T's legacy Southwestern Bell states, especially in the Atlanta GA metro area, the Saint Louis MO metro area, and in the Dallas/Fort Worth TX metro area. In 2015, AT&T did not renew a support contract with Alcatel-Lucent (now Nokia) for the 1AESS systems still in operation and notified Alcatel-Lucent of its intent to remove them all from service by 2017. As a result, Alcatel-Lucent dismantled the last 1AESS lab at the Naperville Bell Labs location in 2015, and announced the discontinuation of support for the 1AESS. In 2017, AT&T completed the removal of remaining 1AESS systems by moving customers to other newer technology switches, typically with Genband switches with TDM trunking only.

The last known 1AESS switch was in Odessa, TX (Odessa Lincoln Federal wirecenter ODSSTXLI). It was disconnected from service around June 3, 2017 and cut over to a Genband G5/G6 packet switch.

Other electronic switching systems

The No. 1 Electronic Switching System Arranged with Data Features (No. 1 ESS ADF) was an adaptation of the Number One Electronic Switching System to create a store and forward message switching system. It used both single and multi-station lines for transmitting teletypewriter and data messages. It was created to respond to a growing need for rapid and economical delivery of data and printed copy.

Features

The No. 1 ESS ADF had a large number of features, including:

- Mnemonic addresses: Alphanumeric codes used to address stations

- Group code addresses: Mnemonic codes used to address a specific combination of stations

- Precedence: Message delivery according to four levels of precedence

- Date and time services: Optional date and time of message origination and delivery

- Multiline hunting groups: Distribution of messages to the next available station in a group

- Alternate delivery: Optional routing of all messages addressed to one station to another station

See also

References

- Ketchledge, R.: “The No. 1 Electronic Switching System” IEEE Transactions on Communications, Volume 13, Issue 1, Mar 1965, pp 38–41

- 1A Processor, Bell System Technical Journal, 56(2), 119 (February 1977)

- "No. 1 Electronic Switching System"

- D. Danielsen, K. S. Dunlap, and H. R. Hofmann. "No. 1 ESS Switching Network Frames and Circuits. 1964.

- ^ J. G. Ferguson, W. E. Grutzner, D. C. Koehler, R. S. Skinner, M. T. Skubiak, and D. H. Wetherell. "No. 1 ESS Apparatus and Equipment". Bell System Technical Journal. 1964.

- Al L Varney. "Questions About The No. 1 ESS Switch". 1991.

- Adar, Eytan; Tan, Desney; Teevan, Jaime (April 2013). "Benevolent deception in human computer interaction" (PDF). CHI '13: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. SIGCHI Conference on Human Factors in Computing Systems. Paris: Association for Computing Machinery. p. 1. doi:10.1145/2470654.2466246. ISBN 978-1-4503-1899-0. p. 1:

Although the 1ESS knew when it failed, it was designed to connect the caller to the wrong person rather than react to the error in a more disruptive way ... caller, thinking that she had simply misdialed, would hang up and try again ... illusion of an infallible phone system preserved.

- Organization of no. 1 ESS central processor

- "Product Information and Downloads: 1AESS". Nokia Support Portal. Archived from the original on 2016-09-16.

- Mike, Jersey (2017-04-05). "The Position Light: Now For Something Completely The Same (#1AESS Retirement)". The Position Light. Retrieved 2021-11-24.

- No. 1 ESS ADF: System Organization and Objectives, Bell System Technical Journal, 49(10), 2733 (1970)

- No. 1 ESS ADF: System Organization and Objectives, Bell System Technical Journal, 49(10), 2747–2751 (1970)

External links

| NANP telephone switches | |

|---|---|

| Early automatic & crossbar switches | |

| Electronic switching systems | |

input customers to

input customers to  . This being impractical,

. This being impractical,